Test data quality during CI/CD development

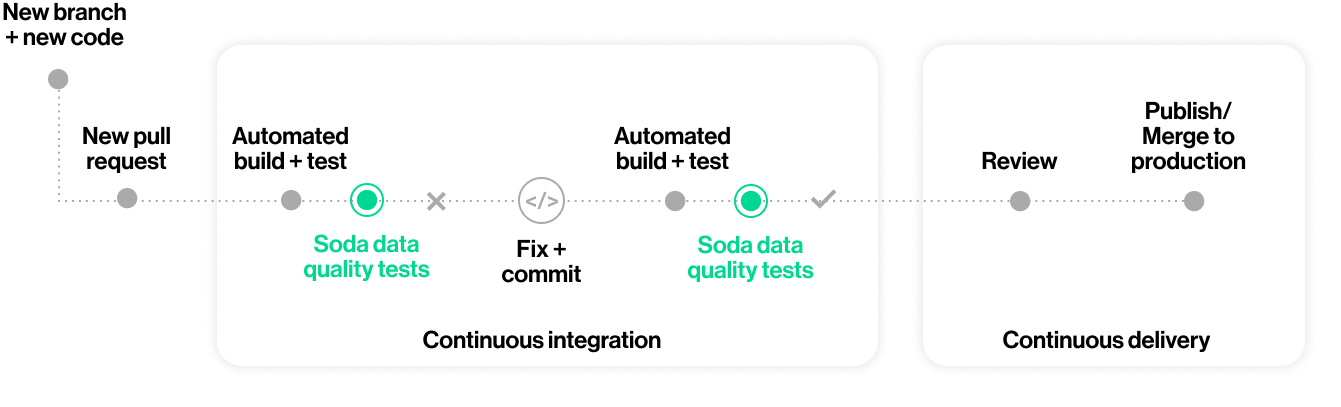

Follow this guide to set up and run automated Soda scans for data quality during CI/CD development using GitHub Actions.

Use this guide to install and set up Soda to test the quality of your data during your development lifecycle. Catch data quality issues in a GitHub pull request before merging data management changes, such as transformations, into production.

Not quite ready for this big gulp of Soda? 🥤Try taking a sip, first.

About this guide

The instructions below offer Data Engineers an example of how to use the Soda Library Action to execute SodaCL checks for data quality on data in a Snowflake data source.

For context, the example assumes that a team of people use GitHub to collaborate on managing data ingestion and transformation with dbt. In the same repo, team members collaborate to write tests for data quality in SodaCL checks YAML files. With each new pull request, or commit to an existing one, in the repository that adds a transformation or makes changes to a dbt model, the GitHub Action in Workflow executes a Soda scan for data quality and presents the results of the scan in a comment in the pull request, and in Soda Cloud.

Where the scan results indicate an issue with data quality, Soda notifies the team via a notification in Slack so that they can investigate and address any issues before merging the PR into production.

Borrow from this guide to connect to your own data source, add the GitHub Action for Soda to a Workflow, and execute your own relevant tests for data quality to prevent issues in production.

Add the GitHub Action for Soda to a Workflow

In a browser, navigate to cloud.soda.io/signup to create a new Soda account, which is free for a 45-day trial. If you already have a Soda account, log in.

Navigate to your avatar > Profile, then access the API keys tab. Click the plus icon to generate new API keys. Copy+paste the API key values to a temporary, secure place in your local environment.

Why do I need a Soda Cloud account?

To validate your account license or free trial, the Soda Library Docker image that the GitHub Action uses to execute scans must communicate with a Soda Cloud account via API keys. Create new API keys in your Soda Cloud account, then use them to configure the connection between the Soda Library Docker image and your account later in this procedure.

In the GitHub repository in which you wish to include data quality scans in a Workflow, create a folder named

sodafor the configuration files that Soda requires as input to run a scan.In this folder, create two files:

a

configuration.ymlfile to store the connection configuration Soda needs to connect to your data source and your Soda Cloud account.a

checks.ymlfile to store the SodaCL checks you wish to execute to test for data quality; see next section.

Follow the instructions to add connection configuration details for both your data source and your Soda Cloud account to the

configuration.yml, as per the example below.

In the

.github/workflowsfolder in your GitHub repository, open an existing Workflow or create a new workflow file.In your browser, navigate to the GitHub Marketplace to access the Soda Library Action. Click Use latest version to copy the code snippet for the Action.

Paste the snippet into your new or existing workflow as an independent step, then add the required action inputs and environment variable as in the following example.

Be sure to add the Soda Action after the step in the workflow that completes a dbt run that executes your dbt tests.

Best practice dictates that you configure sensitive credentials using GitHub secrets. Read more about GitHub encrypted secrets.

Save the changes to your workflow file.

Write checks for data quality

A check is a test that Soda executes when it scans a dataset in your data source. The checks.yml file stores the checks you write using the Soda Checks Language (SodaCL). You can create multiple checks.yml files to organize your data quality checks and run all, or some of them, at scan time.

In your

sodafolder, open thechecks.ymlfile, then copy and paste the following rather generic checks into the file.

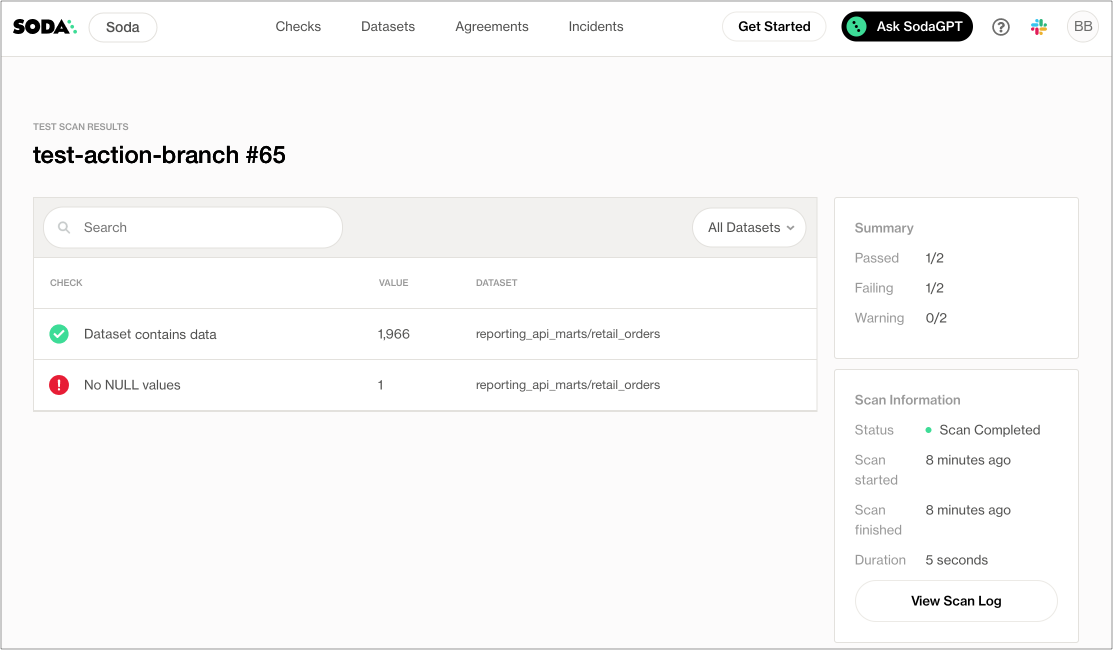

Replace the value of

dataset_namewith the name of a dataset in your data source.Replace the value of

column1with the name of a column in the dataset.yaml checks for dataset_name: # Checks that dataset contains rows - row_count > 0: name: Dataset contains data # Checks that column contains no NULL values - missing_count(column1) = 0: name: No NULL values

Save the

checks.ymlfile.

Trigger a scan and examine the scan results

To trigger the GitHub Action and initiate a Soda scan for data quality, create a new pull request in your repository. Be sure to trigger a Soda scan after the step in your Workflow that completes the dbt run that executed your dbt tests.

What does the GitHub Action do?

To summarize, the action completes the following tasks:

Checks to validate that the required Action input values are set.

Builds a Docker image with a specific Soda Library version for the base image.

Expands the environment variables to pass to the Docker run command as these variables can be configured in the workflow file and contain secrets.

Runs the built image to trigger the Soda scan for data quality.

Converts the Soda Library scan results to a markdown table using newest hash from 1.0.0 version.

Creates a pull request comment.

Posts any additional messages to make it clear whether or not the scan failed.

See the public soda-github-action repository for more detail.

For the purposes of this exercise, create a new branch in your GitHub repo, then make a small change to an existing file and commit and push the change to the branch.

Execute a dbt run.

Create a new pull request, then navigate to your GitHub account and review the pull request you just created. Notice that the Soda scan action is queued and perhaps already running against your data to check for quality.

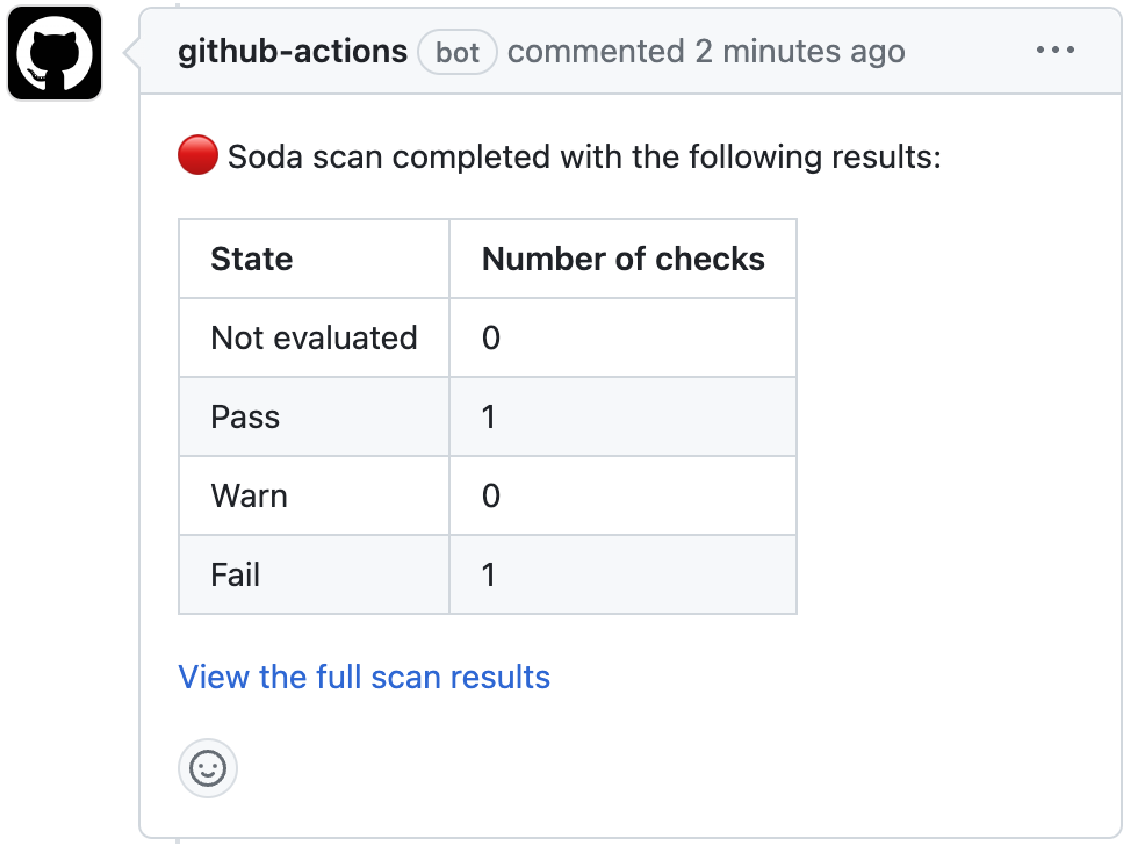

When the job completes, navigate to the pull request's Conversation tab to view the comment the Action posted via the github-action bot. The table indicates the states and volumes of the check results.x

To examine the full scan report and troubleshoot any issues, click the link in the comment to View full scan results, then click View Scan Log. Use [Troubleshoot SocaCL]() for help diagnosing issues.

✨Well done!✨ You've taken the first step towards a future in which you and your colleagues prevent data quality issues from getting into production. Huzzah!

Go further

Get organized in Soda!

Request a demo. Hey, what can Soda do for you?

Not quite ready for this big gulp of Soda? 🥤Try taking a sip, first.

Need help? Join the Soda community on Slack.

Last updated

Was this helpful?