Test data quality in an Azure Data Factory pipeline

Use this guide to invoke Soda data quality tests in an Azure Data Factory pipeline.

Use this guide as an example of how to set up Soda to run data quality tests on data in an ETL pipeline in Azure Data Factory.

About this guide

This guide offers an example of how to set up and trigger Soda to run data quality scans from an Azure Data Factory (ADF) pipeline.

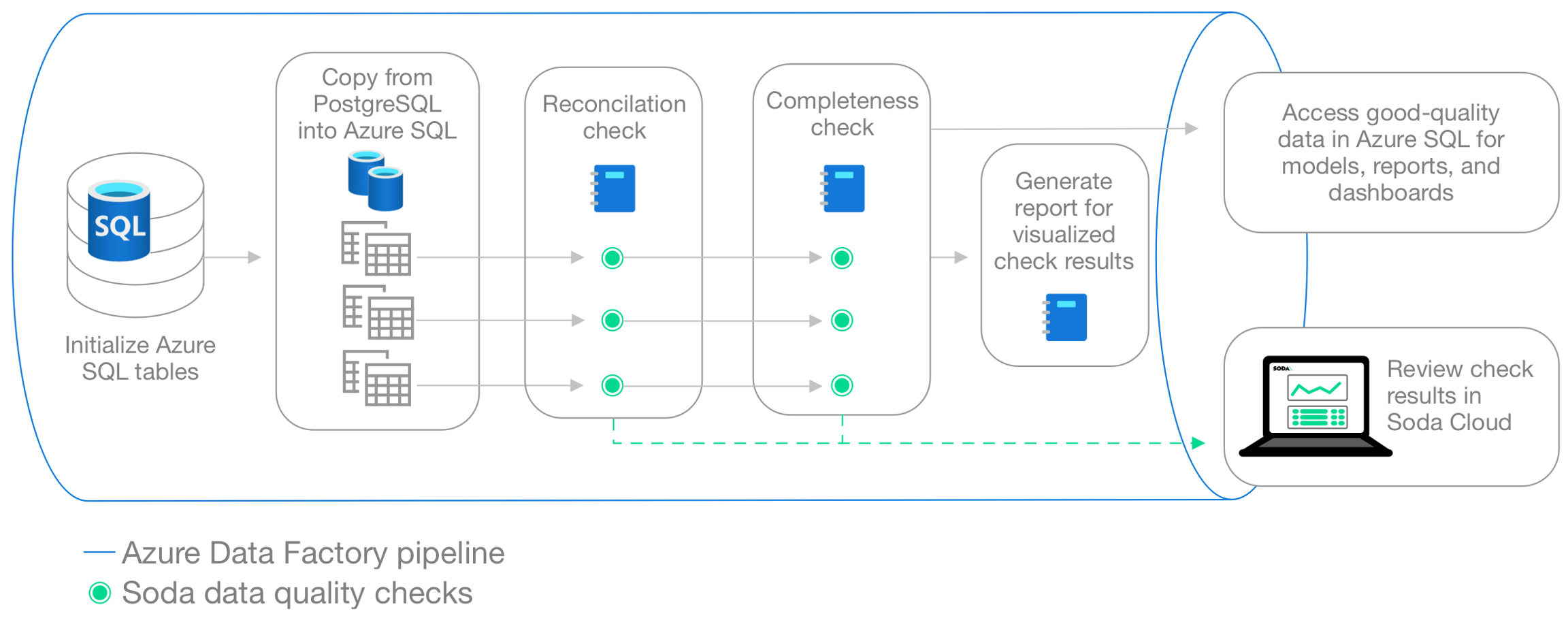

The Data Engineer in this example has copied data from a PostgreSQL data source to an Azure SQL Server data source and uses Soda reconciliation checks in a Synapse notebook to validate that data copied from the source to the target is the same. Next, they create a second notebook to execute Soda checks to validate the completeness of the ingested data. Finally, the Engineer generates a visualized report of the data quality results.

This example uses a programmatic deployment model which invokes the Soda Python library, and uses Soda Cloud to validate a commercial usage license and display visualized data quality test results.

Read more: SodaCL reference Read more: Soda reconciliation checks Read more: Choose a flavor of Soda

Prerequisites

The Data Engineer in this example has the following:

permission to configure Azure Cloud resources through the user interface

access to:

an Azure Data Factory pipeline

a Synapse workspace

a dedicated SQL pool in Synapse

a dedicated Apache Spark pool in Synapse

an external source SQL database such as PostgreSQL

an Azure Data Lake Storage account

an Azure Key Vault

The above-listed resources have permissions to interact with each other; for example the Synapse workspace has permission to fetch secrets from the Key Vault.

Python 3.8, 3.9, or 3.10

Pip 21.0 or greater

Python versions Soda supports

Soda officially supports Python versions 3.8, 3.9, and 3.10. Though largely funcntional, efforts to fully support Python 3.11 and 3.12 are ongoing.

Using Python 3.11, some users might have some issues with dependencies constraints. At times, extra the combination of Python 3.11 and dependencies constraints requires that a dependency be built from source rather than downloaded pre-built.

The same applies to Python 3.12, although there is some anecdotal evidence that indicates that 3.12 might not work in all scenarios due to dependencies constraints.

Create a Soda Cloud account

To validate your account license or free trial, Soda Library must communicate with a Soda Cloud account via API keys. You create a set of API keys in your Soda Cloud account, then use them to configure the connection to Soda Library.

In a browser, the engineer navigated to cloud.soda.io/signup to create a new Soda account, which is free for a 45-day trial.

They navigated to your avatar > Profile, then accessed the API keys tab and clicked the plus icon to generate new API keys.

They copy+pasted the API key values to their Azure Key Vault.

Use Soda to reconcile data

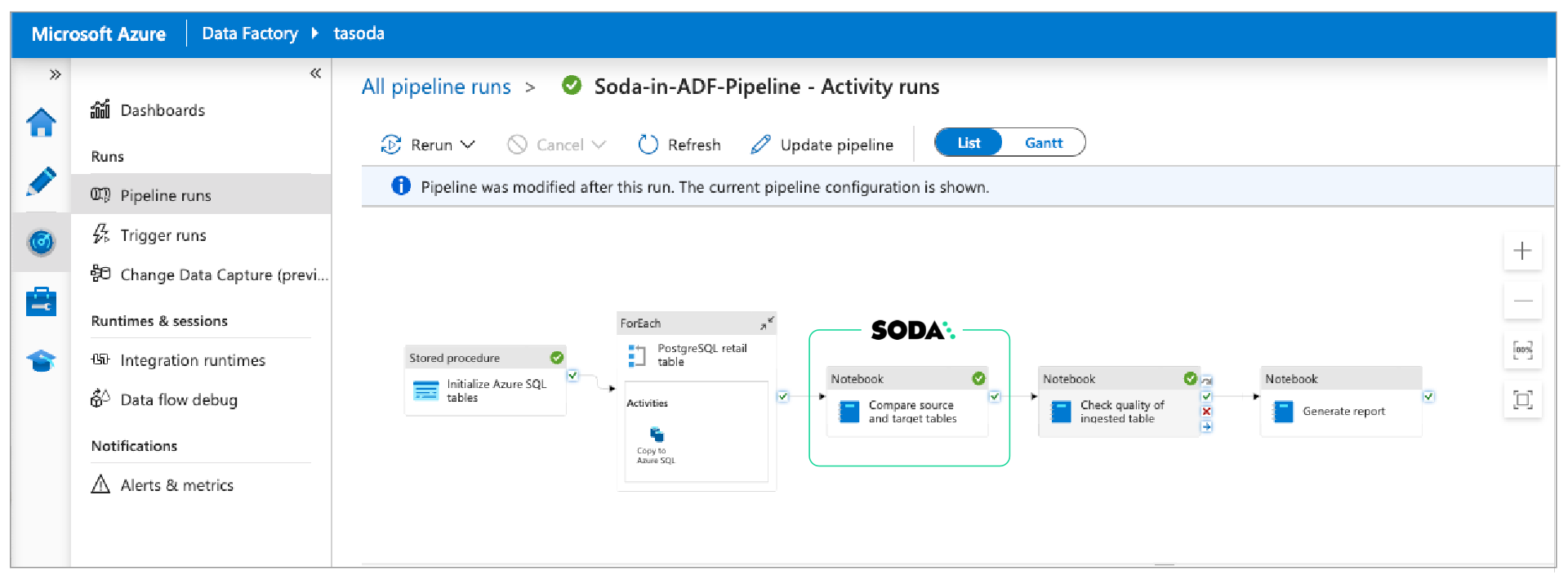

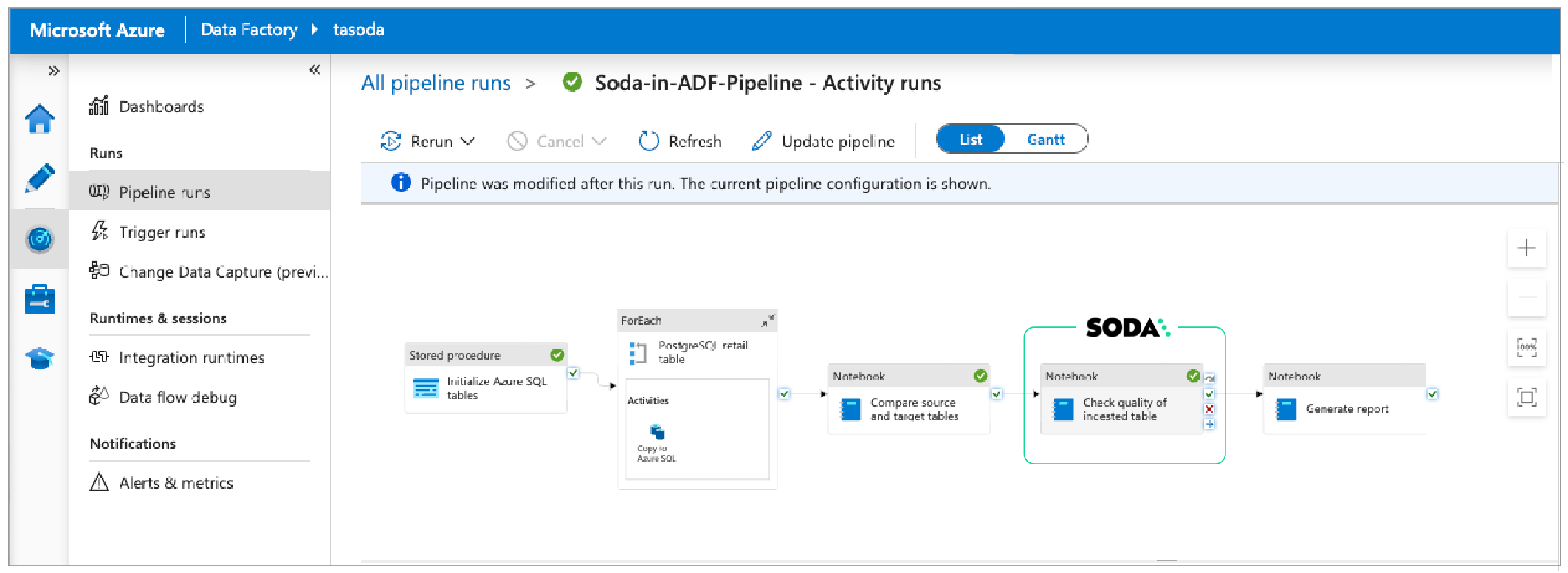

This example executes checks which, after a data migration, validate that the source and target data are matching. The first ADF Notebook Activity links to a notebook which contains the Soda connection details, the check definitions, and the script to run a Soda scan for data quality which executes the reconciliation checks.

Download the notebook: Soda Synapse Recon notebook

In the ADF pipeline, the Data Engineer adds a Notebook activity for Synapse to a pipeline. In the Settings tab, they name the notebook

Reconciliation Checks.Next, in the Azure Synapse Analytics (Artifacts) tab, they create a new Azure Synapse Analytics linked service that serves to execute the Notebook activity.

In the Settings tab, they choose the Notebook and the base parameters to pass to it.

The Spark Pool that runs the notebook must have the Soda Library packages it needs to run scans of the data. Before creating the notebook in the Synapse workspace, they add a

requirement.txtfile to the Spark Pool and include the following contents. Access Spark Pool instructions. Because this example runs scans on both the source (PostgreSQL) and target (SQL server) data sources, it requires two Soda Library packages.

They create a new notebook in their Synapse workspace, then add the following contents that enable Soda to connect with the data sources, and with Soda Cloud. For the sensitive data source login credentials and Soda Cloud API key values, the example fetches the values from an Azure Key Vault. Read more: [Integrate Soda with a secrets manager](#integrate-with-a-secrets-manager)

They define the SodaCL reconciliation checks inside another YAML string. The checks include check attributes which they created in Soda Cloud. When added to checks, the Data Engineer can use the attributes to filter check results in Soda Cloud, build custom views (Collections), and stay organized as they monitor data quality in the Soda Cloud user interface.

Finally, they define the script that runs the Soda scan for data quality, executing the reconcilation checks that validate the matching source and target data. If

scan.assert_no_checks_fail()returns anAssertionErrorindicating that one or more checks have failed during the scan, then the Azure Data Factory pipeline halts.

Add post-ingestion checks

Beyond reconciling the copied data, the Data Engineer uses SodaCL checks to gauge the completeness of data. In a new ADF Notebook Activity, they follow the same pattern as the reconciliation check notebook in which they configured connections to Soda Cloud and the data source, defined SodaCL checks, then prepared a script to run the scan and execute the checks.

Download the notebook: Soda Synapse Ingest notebook

Generate a data visualization report

The last activity in the pipeline is another Notebook Activity which runs a new Synapse notebook called Report. This notebook loads the data into a dataframe, creates a plot of the data, then saves the plot to an Azure Data Lake Storage location.

Download the notebook: Soda Synapse Report notebook

Review check results in Soda Cloud

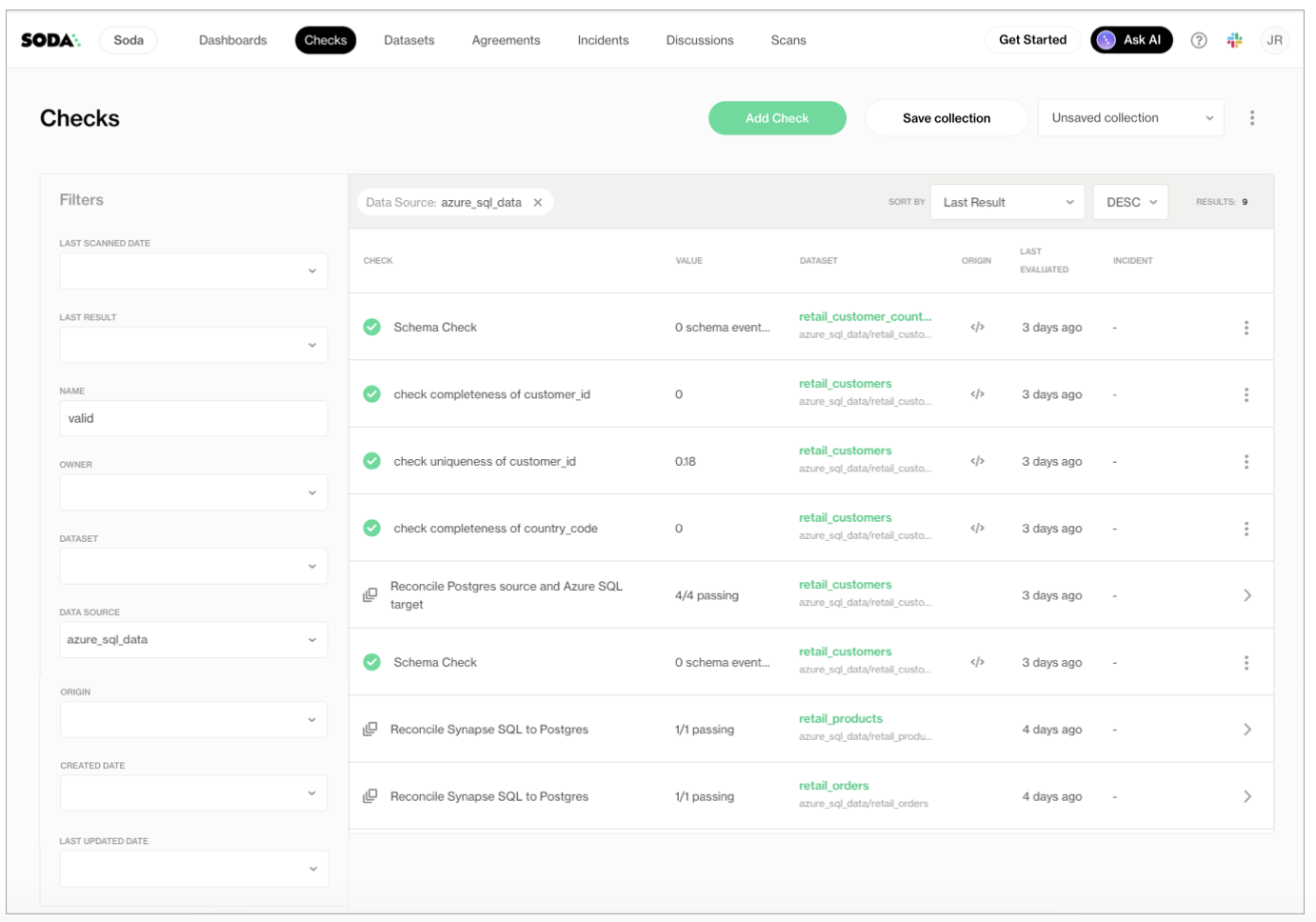

After running the ADF pipeline, the Data Engineer can access their Soda Cloud account to review the check results.

In the Checks page, they apply a filter to narrow the results to display only those associated with the Azure SQL Server data source against which Soda ran the data quality scans. Soda displays the results of the most recent scan.

Go further

Learn more about SodaCL checks and metrics.

Learn more about getting organized in Soda Cloud.

Set notification rules to receive alerts when checks fail.

Need help? Join the Soda community on Slack.

Last updated

Was this helpful?