Build a Sigma dashboard

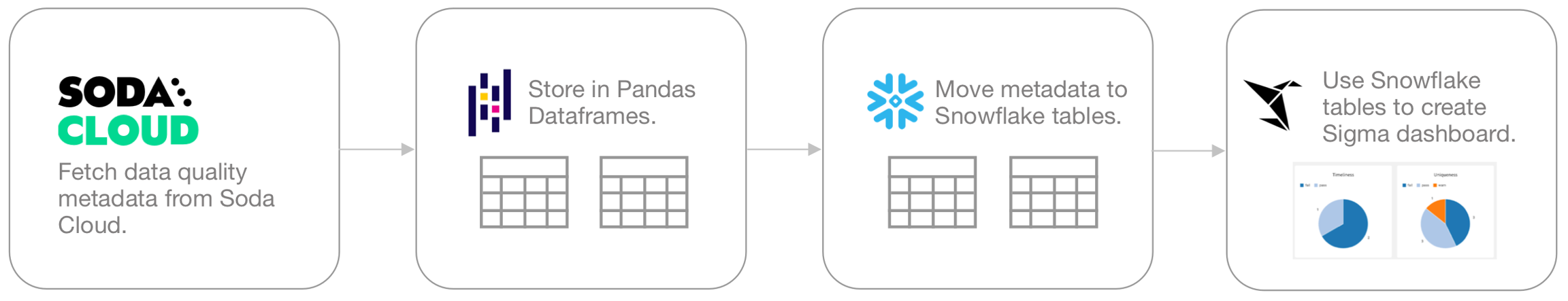

This example helps you build a customized data quality reporting dashboard in Sigma using the Soda Cloud API.

This guide offers a comprehensive example for building a customized data quality reporting dashboard in Sigma. Use the Soda Cloud API to capture metadata from your Soda Cloud account, store it in Snowflake, then access the data in Snowflake to create a Sigma dashboard.

Prerequisites

Python 3.8, 3.9, or 3.10

Pip 21.0 or greater

access to an account in Sigma

access to a Snowflake data source

a Soda Cloud account; see Get started

permission in Soda Cloud to access dataset metadata; see Manage dataset roles

Set up a Python script

Install an HTTP request library and Snowflake connector.

In a new Python script, configure the following details to integrate with Soda Cloud. See Generate API keys for detailed instructions.

In the same script, define the tables in which to store the Soda dataset information and check results in Snowflake, ensuring they are in uppercase to avoid issues with Snowflake's case sensitivity requirements.

In the same script, configure your Snowflake connection details. This configuration enables your script to securely access your Snowflake data source.

In the script, prepare an HTTP

GETrequest to the Soda Cloud API to retrieve dataset information. Direct the request to the Dataset information endpoint, including the authentication API keys to access the data. This script prints an error if the request is unauthorized.Run the script to ensure that the

GETrequest results in HTTP status code200, confirming the successful connection to Soda Cloud.

Capture and store metadata

With a functional connection to Soda Cloud, adjust the API call to extract all dataset information from Soda Cloud, iterating over each page of the datasets. Then, create a Pandas Dataframe to contain the retrieved metadata. This adjusted call retrieves information about each dataset's name, its last update, the data source in which it exists, its health status, and the volume of checks and incidents with which it is associated.

Inspect the information you retrieved with the following Pandas command; see example output below.

{:height="700px" width="700px"}

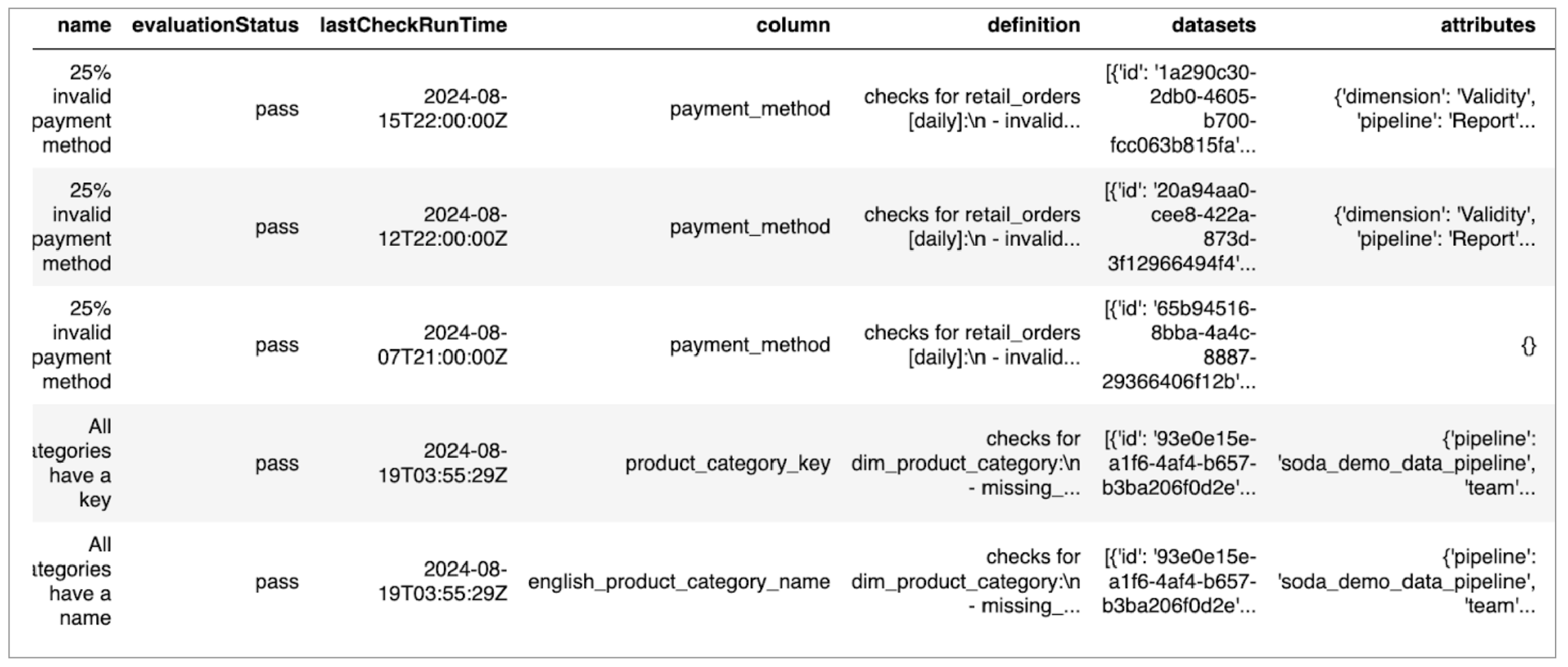

3. Following the same logic, extract all the check-related information from Soda Cloud using the Checks information endpoint.

This call retrieves information about the checks in Soda Cloud, including the dataset and column each runs against, the latest check evaluation time and the result—pass, warn, or fail—and any attributes associated with the check.

{:height="700px" width="700px"}

3. Following the same logic, extract all the check-related information from Soda Cloud using the Checks information endpoint.

This call retrieves information about the checks in Soda Cloud, including the dataset and column each runs against, the latest check evaluation time and the result—pass, warn, or fail—and any attributes associated with the check.

4. Again, inspect the output with a Pandas command.

5. Finally, move the two sets of metadata into your Snowflake data source. Optionally, if you wish to track updates and changes to dataset and check metadata over time, you can store the metadata to incremental tables and set up a flow to update the values on a regular basis using the latest information retrieved from Soda Cloud.

Run the script to populate the tables in Snowflake with the metadata pulled from Soda Cloud.

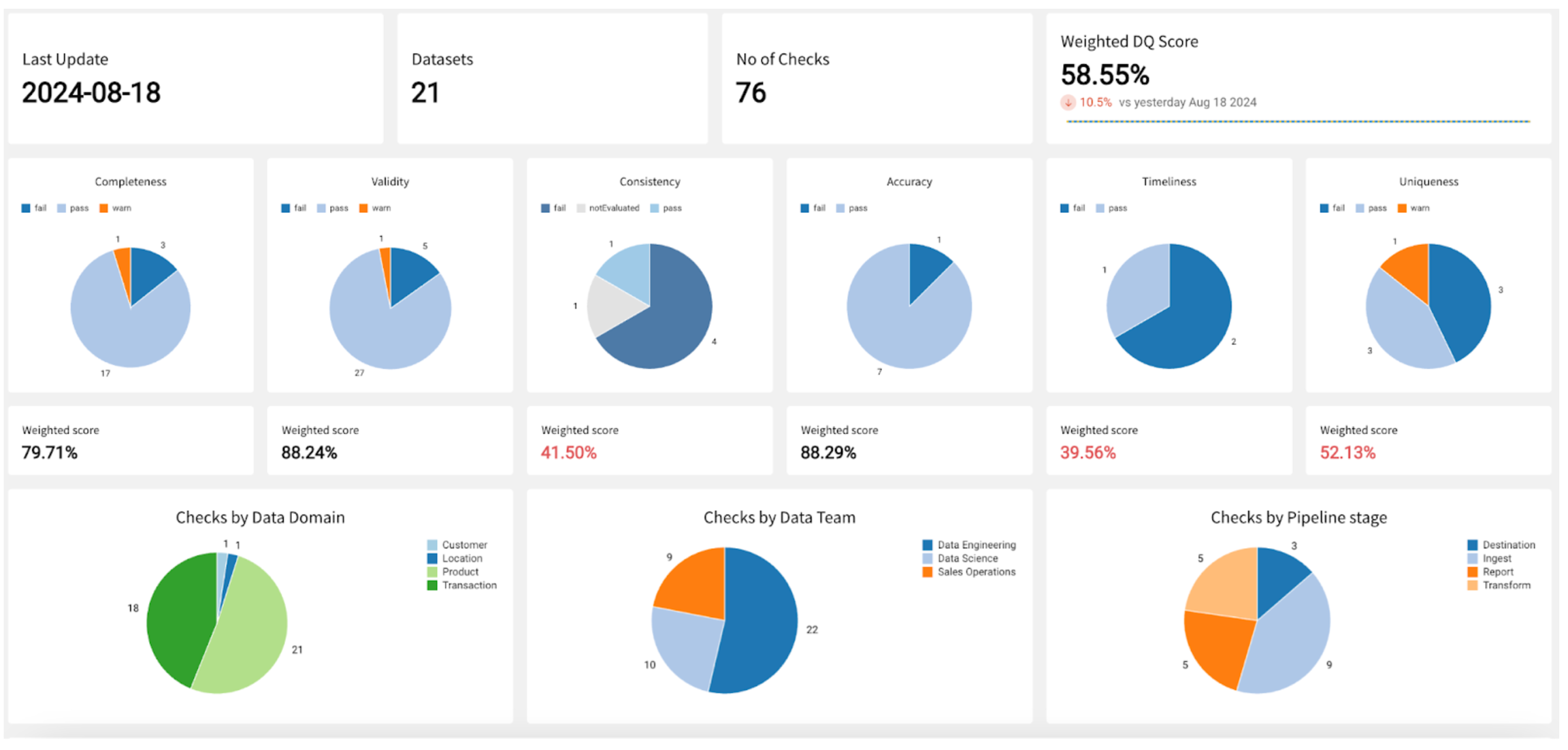

Build a data quality dashboard in Sigma

To build a custom dashboard, this example uses Sigma, a cloud-based analytics and business intelligence platform designed to facilitate data exploration and analysis. You may wish to use a different tool to build a dashboard such as Metabase, Lightdash, Looker, PowerBI, or Tableau.

This example leverages check attributes, an optional configuration that helps categorize or segment check results so you can better filter and organize not only your views in Soda Cloud, but your customized dashboard. Checks in this example use the following attributes:

Data Quality Dimension: Completeness, Validity, Consistency, Accuracy, Timeliness, Uniqueness

Data Domain: Customer, Location, Product, Transaction

Data Team: Data Engineering, Data Science, Sales Operations

Pipeline stage: Destination, Ingest, Report, Transform

Weight

The weight attribute, in particular, is very useful in allocating a numerical level of importance to checks which you can use to create a custom data health quality score.

Follow the Sigma documentation to Connect to Snowflake.

Follow Sigma documentation to access the metadata you stored in Snowflake, either by Modeling data from database tables, or Creating a dataset by writing custom SQL.

Create a new workbook in Sigma where you can create your visualizations.

The Sigma dashboard below tracks data quality status within an organization. It includes some basic KPI information including the number of datasets monitored by Soda, as well as the number of checks that it regularly executes. It displays a weighted data quality score based on the custom values provided in the Weight attribute for each check (here shown according to data quality dimension) which it compares to previous measurements gathered over time.

Go further

Access full Soda Cloud API and Soda Cloud Reporting API documentation.

Learn more about check attributes and dataset attributes.

Need help? Join the Soda community on Slack.

Last updated

Was this helpful?