Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Access configuration details to connect Soda to Databricks using a Spark data source.

You can use the Soda Library packages for Apache Spark to connect to Databricks SQL or to use Spark DataFrames on Databricks.

Refer to Connect to Spark for Databricks SQL.

Refer to Use Soda Library with Spark DataFrames on Databricks. 🎥 Watch a video that demonstrates how to add Soda to your Databricks pipeline: https://go.soda.io/soda-databricks-video

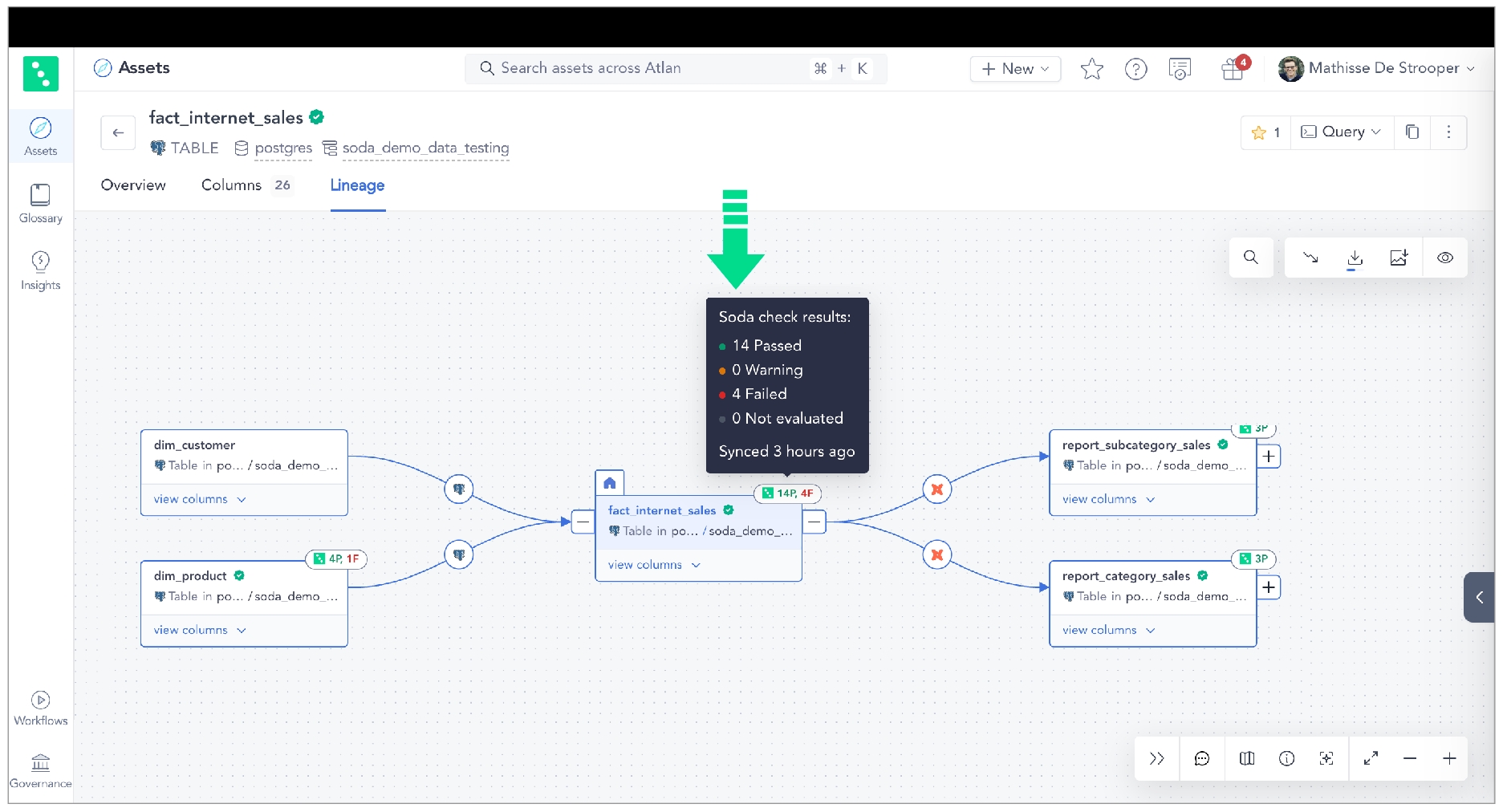

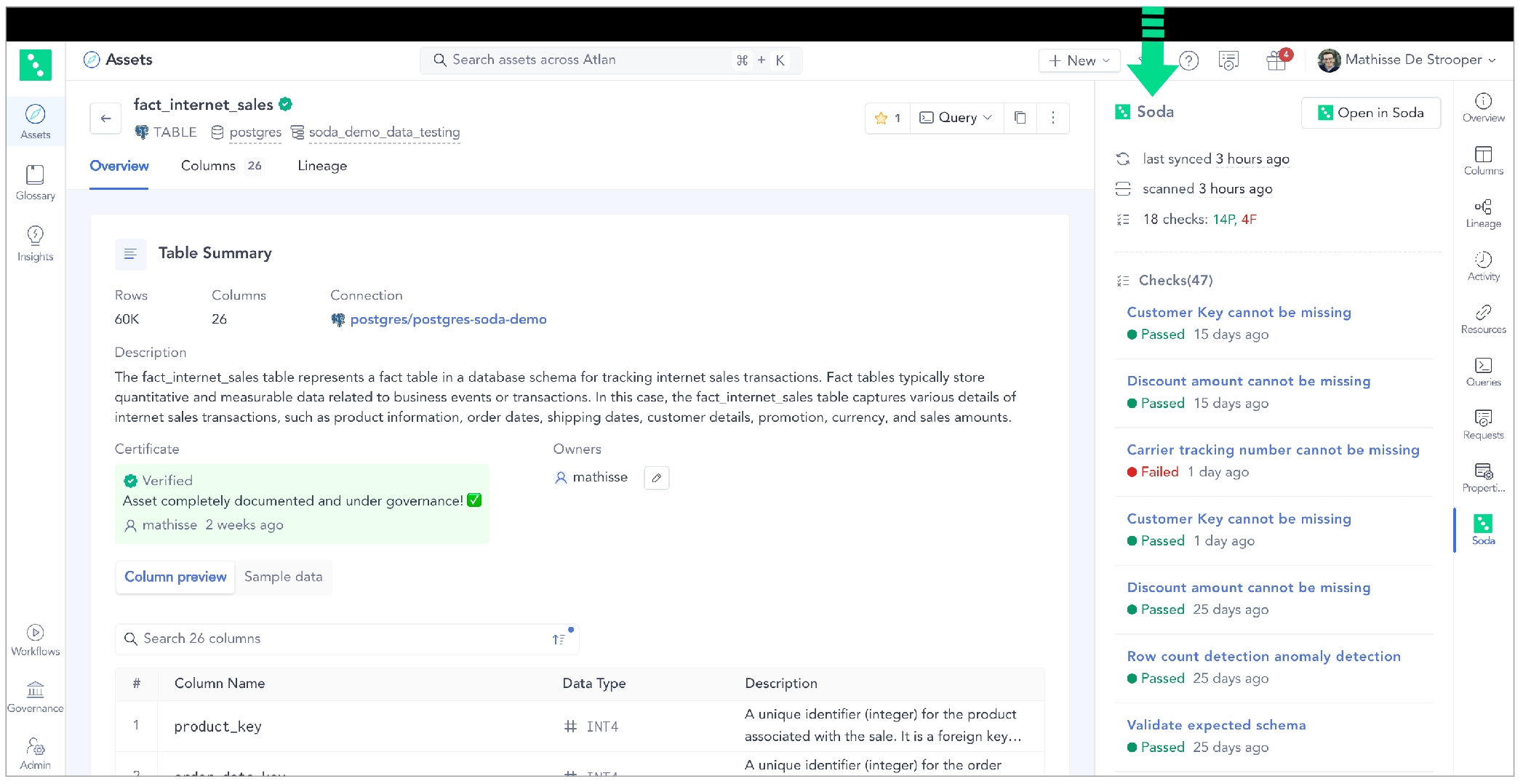

Integrate Soda with Atlan to access details about the quality of your data from right within your data catalog.

Integrate Soda with Atlan to access details about the quality of your data from within the data catalog.

Run data quality checks using Soda and visualize quality metrics and rules within the context of a data source, dataset, or column in Atlan.

Use Soda Cloud to flag poor-quality data in lineage diagrams.

Give your Atlan users the confidence of knowing that the data they are using is sound.

You have completed at least one Soda scan to validate that the data source’s datasets appear in Soda Cloud as expected.

You have an Atlan account with the privileges necessary to allow you to set up a Connection in your Atlan workspace.

Follow the instructions to Generate API keys in Soda to use for authentication in your Atlan connection.

Follow Atlan's documentation to set up the Connection to Soda in your Atlan workspace.

🎥 Watch the Atlan-Soda integration in action!

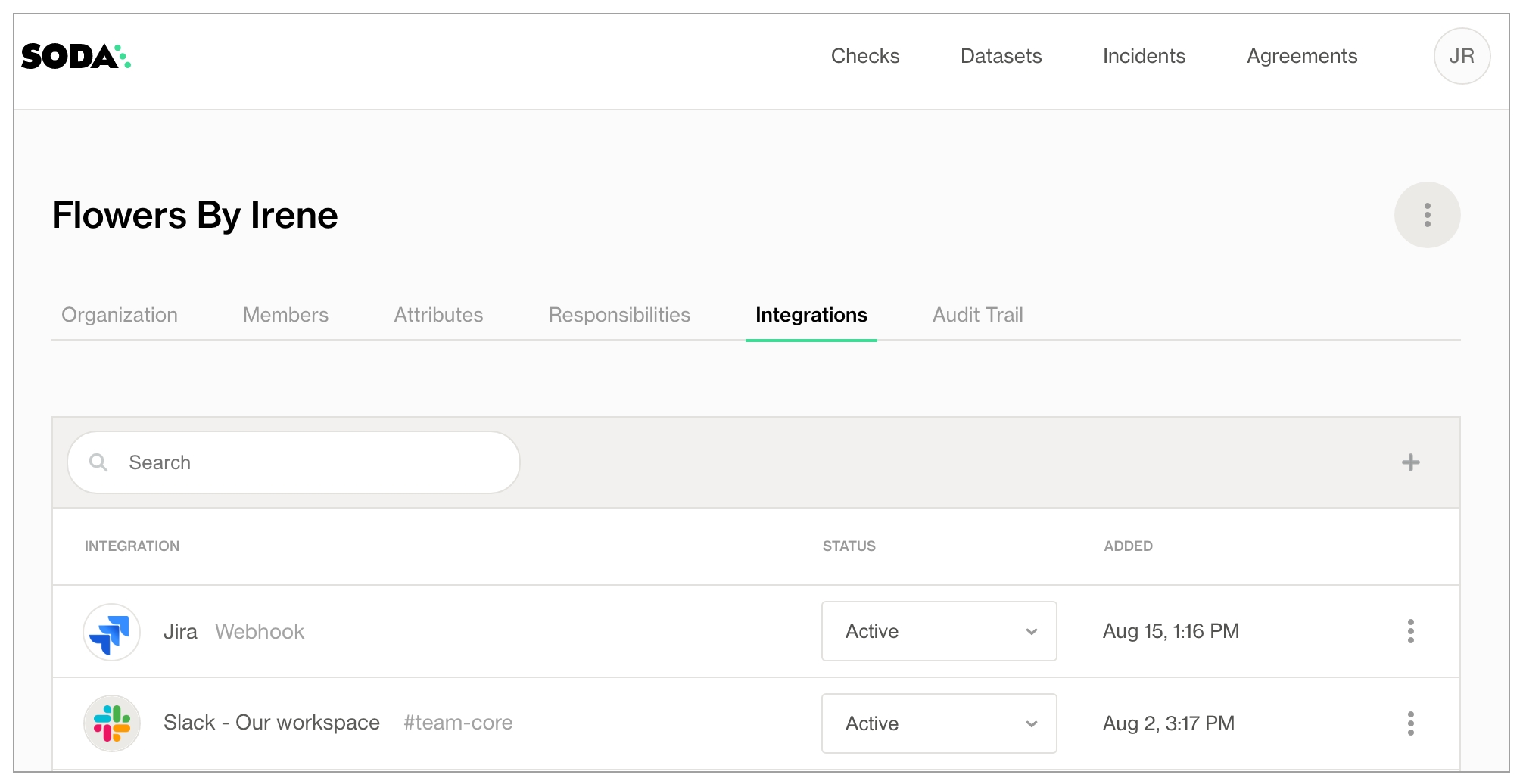

Access a list of all integrations that Soda Cloud supports.

Use a webhook to integrate with Jira, ServiceNow, and other tools your team already uses.

Soda is a data quality platform that provides tools to monitor, test, and improve data quality across all stacks.

Welcome to the Soda documentation hub, your one-stop resource for everything you need to know about Soda’s data quality platform. Dive into our guides, tutorials, reference materials, and integration pages to learn how keep your data quality fresh across your entire stack.

This is the documentation for Soda v3. If you are using Soda v4 or want to learn more about the next iteration of Soda, head to the .

Soda v3 is a checks-based, CLI-driven data quality tool.

Soda v4 has incorporated collaborative data contracts and end-to-end observability features to become a unified data-quality platform for all.

Learn core concepts and best practices:

: Practical Soda usage scenarios

: Define data quality checks

: Execute Soda data scans

: Check results and investigate issues

Extend Soda into your existing tools and workflows:

Detailed command, API, and configuration docs:

Need help or want to contribute?

Join our Slack Community:

Browse GitHub Discussions:

Still have questions? Use the search bar above or reach out through our community channels for additional help.

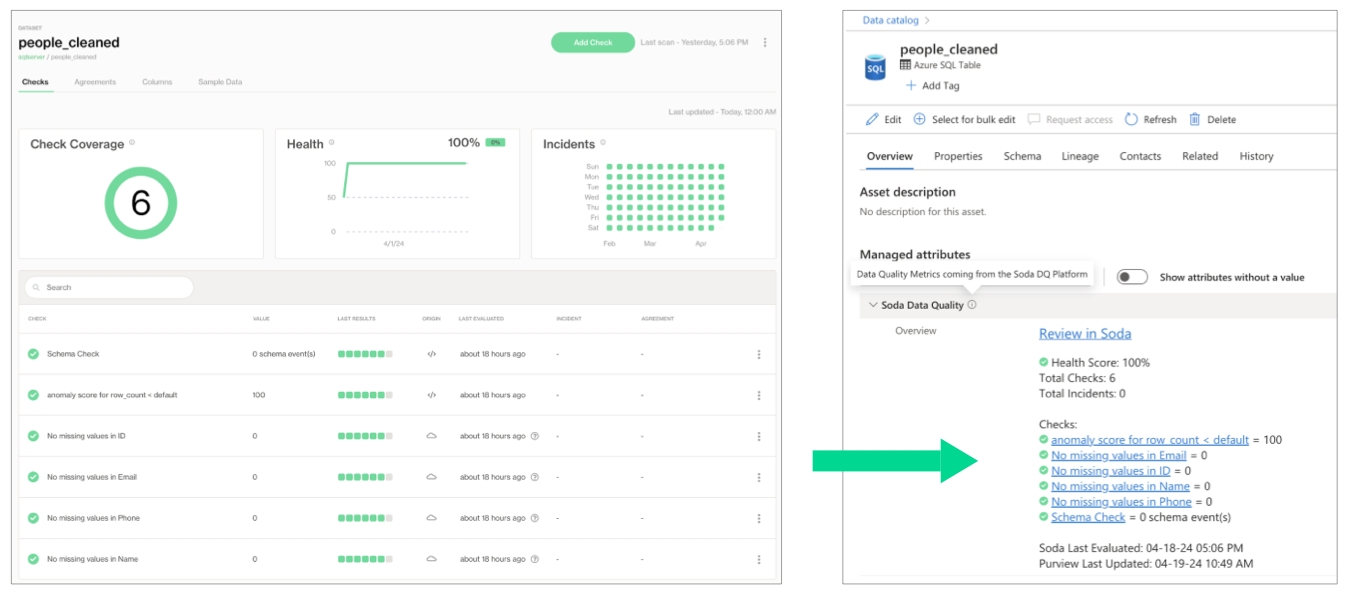

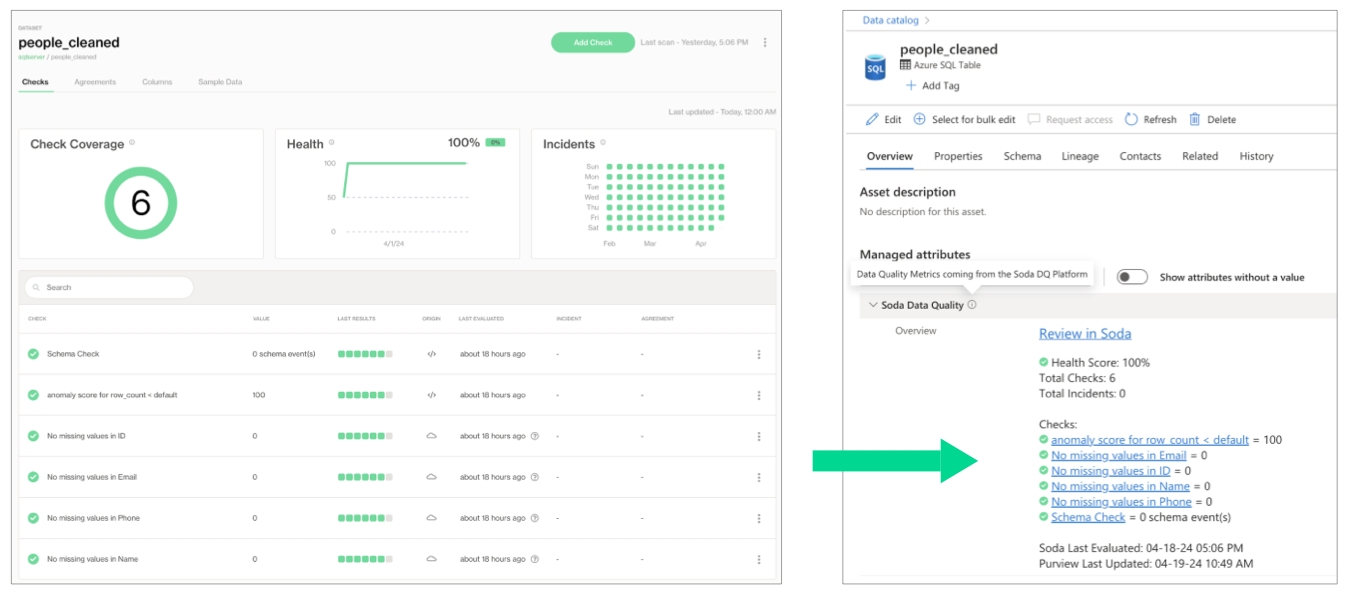

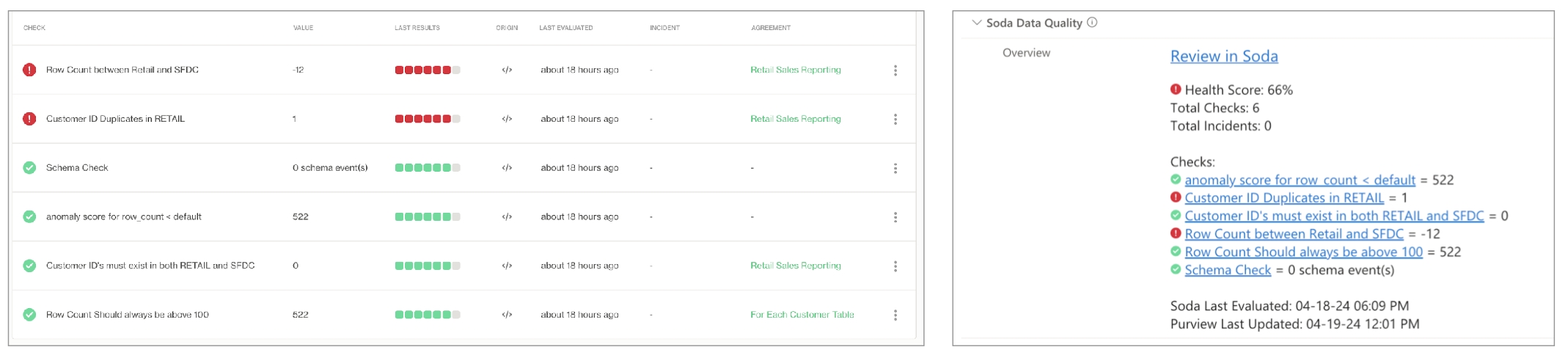

Integrate Soda with Microsoft Purview to access details about the quality of your data from right within your data catalog.

Integrate Soda with Microsoft's Purview data catalog to access details about the quality of your data from within the catalog.

Run data quality checks using Soda and visualize quality metrics and rules within the context of a table in Purview.

Give your Purview-using colleagues the confidence of knowing that the data they are using is sound.

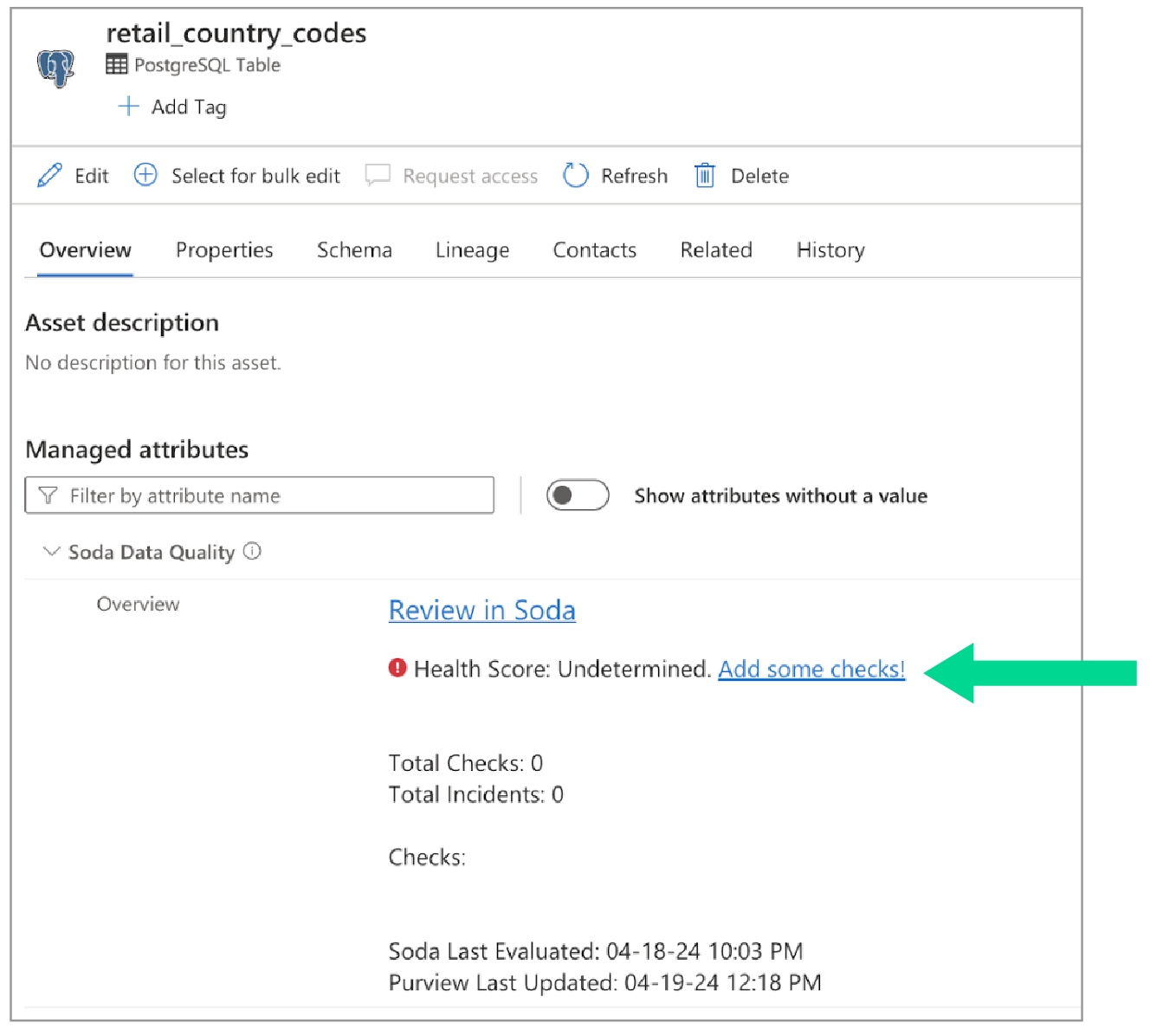

Encourage others to add data quality checks using a link in Purview that connects directly to Soda.

In Purview, you can see all the Soda data quality checks and the value associated with the check's latest measurement, the health score of the dataset, and the timestamp for the most recent update. Each of these checks listed in Purview includes a link that opens a new page in Soda Cloud so you can examine diagnostic and historic information about the check.

Purview displays the latest check results according to the most recent Soda scan for data quality, where color-coded icons indicate the latest result. A gray icon indicates that a check was not evaluated as part of a scan.

If Soda is performing no data quality checks on a dataset, the instructions in Purview invite a catalog user to access soda and create new checks.

You have completed at least one to validate that the data source’s datasets appear in Soda Cloud as expected.

You have a Purview account with the privileges necessary to collect the information Soda needs to complete the integration.

The data source that contains the data you wish to check for data quality is available in Purview.

Sign into your Soda Cloud account and confirm that you see the datasets you expect to see in the data source you wish to test for quality.

In your Soda Cloud account, navigate to your avatar > Profile, then navigate to the API Keys tab. Click the plus icon to generate new API keys.

Copy the following values and paste to a temporary, secure, local location.

API Key ID

API Key Secret

Access for instructions on how to create the following values, then paste to a temporary, secure, local location.

client_id

client_secret

tenant_id

Copy the value of your purview endpoint from the URL (https://XXX.purview.azure.com) and paste to a temporary, secure, local location.

To connect your Soda Cloud account to your Purview Account, contact your Soda Account Executive or email with the details you collected in the previous steps to request Purview integration.

Access configuration details to connect Soda to a Google CloudSQL data source.

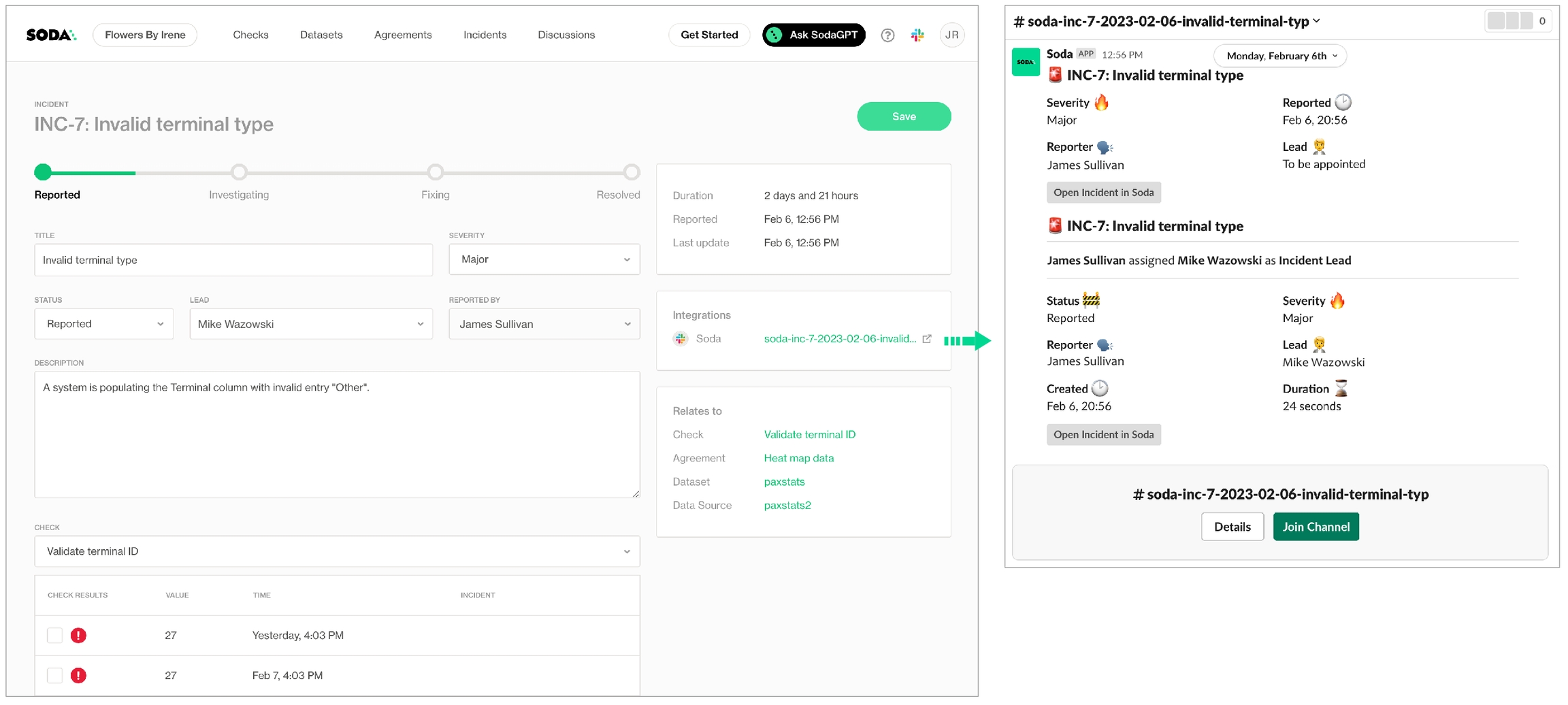

Integrate your Slack workspace in your Soda Cloud account so that Soda Cloud can send Slack notifications to your team when a data issue triggers an alert.

As a user with permission to do so in your Soda Cloud account, you can integrate your Slack workspace in your Soda Cloud account so that Soda Cloud can interact with individuals and channels in the workspace. Use the Slack integration to:

send notifications to Slack when a check result triggers an alert

create a private channel whenever you open new incident to investigate a failed check result

track Soda Discussions wherein your fellow Soda users collaborate on data quality checks

In Soda Cloud, navigate to your avatar > Organization Settings, then navigate to the Integrations tab and click the + icon to add a new integration.

Follow the guided steps to authorize Soda Cloud to connect to your Slack workspace. If necessary, contact your organization’s Slack Administrator to approve the integration with Soda Cloud.

Configuration tab: select the public channels to which Soda can post messages; Soda cannot post to private channels.

Note that Soda caches the response from the Slack API, refreshing it hourly. If you created a new public channel in Slack to use for your integration with Soda, be aware that the new channel may not appear in the Configuration tab in Soda until the hourly Slack API refresh is complete.

You can use this integration to enable Soda Cloud to send alert notifications to a Slack channel to notify your team of warn and fail check results.

With such an integration, Soda Cloud enables users to select a Slack channel as the destination for an alert notification of an individual check or checks that form a part of an agreement, or multiple checks.

To send notifications that apply to multiple checks, see .

You can use this integration to notify your team when a new incident has been created in Soda Cloud. With such an integration, Soda Cloud displays an external link to an incident-specific Slack channel in the Incident Details.

Refer to for more details about using incidents in Soda Cloud.

You can set a default Slack channel that Soda Cloud applies to all alert notifications. If you have not already set the default Slack channel when you initially set up the integration, you can edit it to set the default.

In your Soda Cloud account, go to your avatar > Organization Settings.

Go to the Integrations tab, then click the stacked dots to the right of the Slack integration. Select Edit Integration Settings.

In the Slack Channels dialog, go to the Scope tab.

Set that apply to multiple checks in your account.

Learn more about using Slack to collaborate on resolving .

Access a list of that Soda Cloud supports.

Access reference configuration to connect Soda to a MotherDuck data source.

Install package: soda-duckdb

Refer to MotherDuck instructions for further detail.

data_source quack:

type: duckdb

database: "md:sample_data?motherduck_token=eyJhbGciOxxxxx.eyJzZXxxxxx.l4sxxxxx"

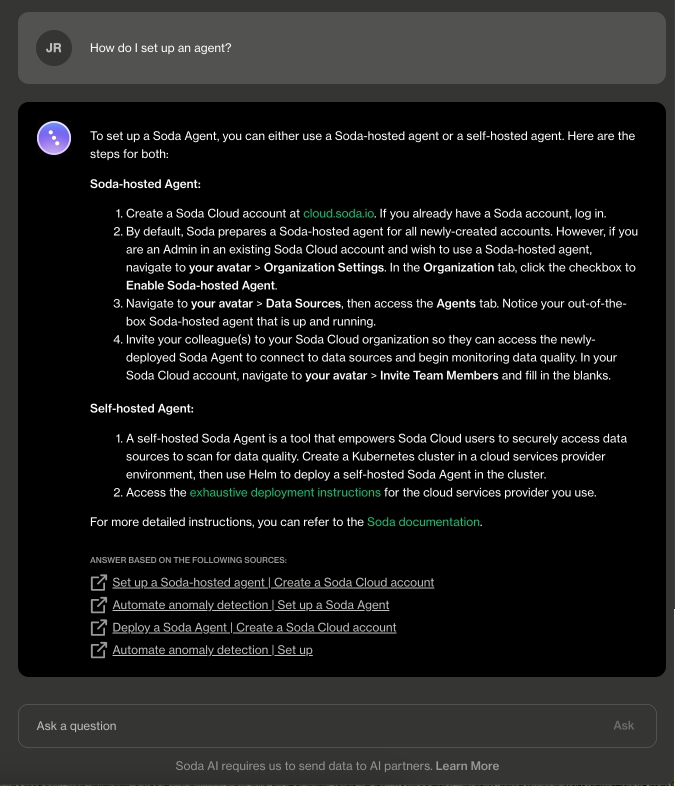

read_only: trueUse Soda's Ask AI assistant to turn natural language into production-ready data quality checks in SodaCL.

Ask AI is an in-product generative AI assistant for data quality testing. Ask AI replaces SodaGPT, the original implementation of a generative AI assistant.

✖️ Requires Soda Core Scientific (included in a Soda Agent) ✖️ Supported in Soda Core ✔️ Supported in Soda Library + Soda Cloud ✔️ Supported in Soda Cloud + Soda Agent

to your Soda Cloud account, click the Ask AI button in the main nav, then provide natural language instructions to the interface to:

receive fully-formed, syntax-correct checks in the

Generate API keys to securely connect Soda Library or a Soda Agent to Soda Cloud, or to access Soda Cloud via API.

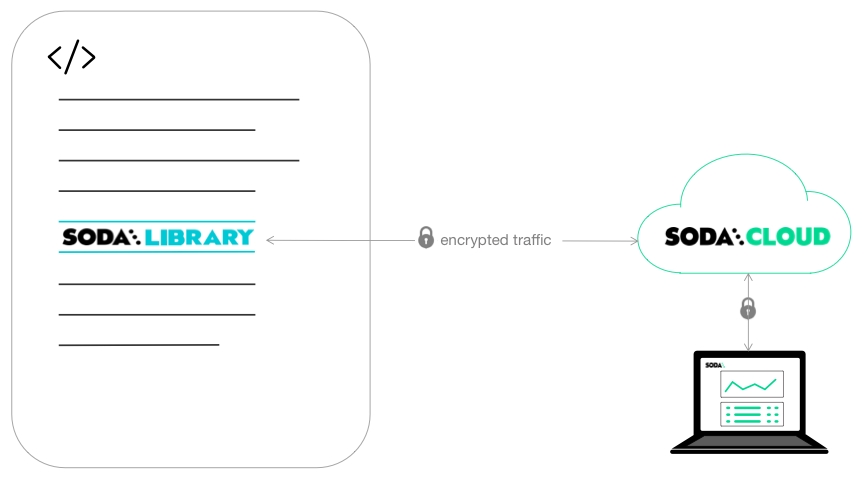

Soda Cloud uses API keys to securely communicate with other entities such as Soda Library and self-hosted Soda Agents, and to provide secure access to Soda Cloud via API.

There are two sets of API keys that you can generate and use with Soda Cloud:

API keys for communicating with Soda Library, the Soda Cloud API or Soda Cloud Reporting API, and the Soda Library Docker image that the GitHub Action for Soda uses

API keys for communicating with a self-hosted Soda Agent

Get started with Soda! Use this curated set of instructions to quickly get data quality tests up and running.

The Soda environment has been updated since this tutorial.

Refer to for updated tutorials.

The roadmap to get started offers a curated experience to help you get from zero to productive with Soda software.

However, if a guided experience is not your style, take a different path!

data_source my_datasource_name:

type: postgres

host: db

port: "5432"

username: simple

password: simple_pass

database: postgres

schema: publicData Testing

(Checks)

CLI-centric checks written in YAML/SodaCL, run via the Python library or Agent.

Still supports YAML/SodaCL checks.

Adds full Data Testing workflows in both CLI and Web UI.

Data Observability

(Monitoring)

Anomaly dashboards provide threshold-based monitoring configured via Soda Cloud.

Metric Monitoring leverages an in-house anomaly detection algorithm to monitor data and metadata metric trends, and provide built-in alerts via Soda Cloud.

Data Contracts

File-based contracts executed via CLI/Git; verification via soda scan.

Collaborative data contracts: file-based and UI-based, executed via CLI/Git or the Soda Cloud UI.

Scope tab: select the Soda features (alert notifications and/or incidents) which can access the Slack integration.

obtain advice about how to resolve an error while using Soda

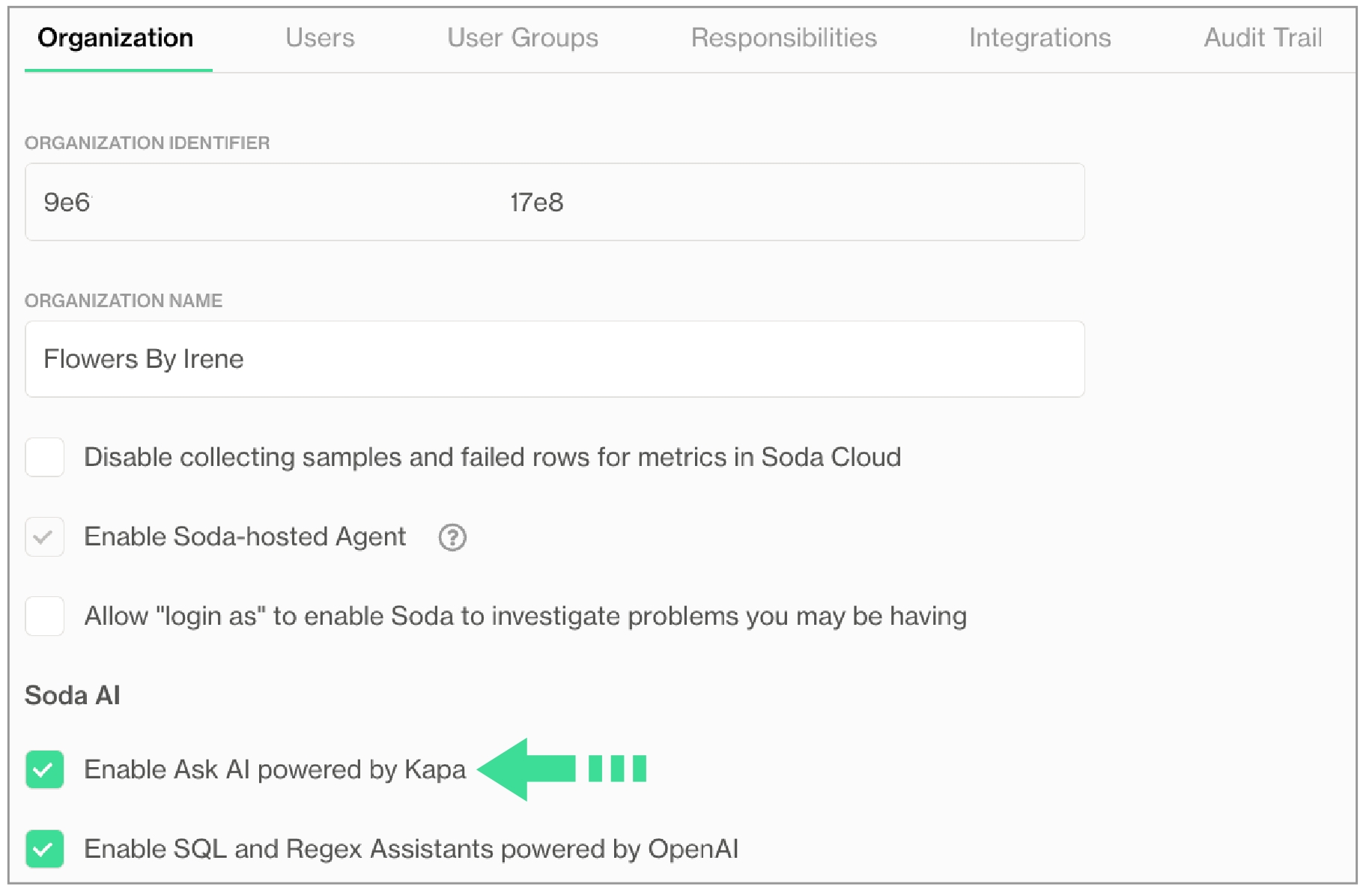

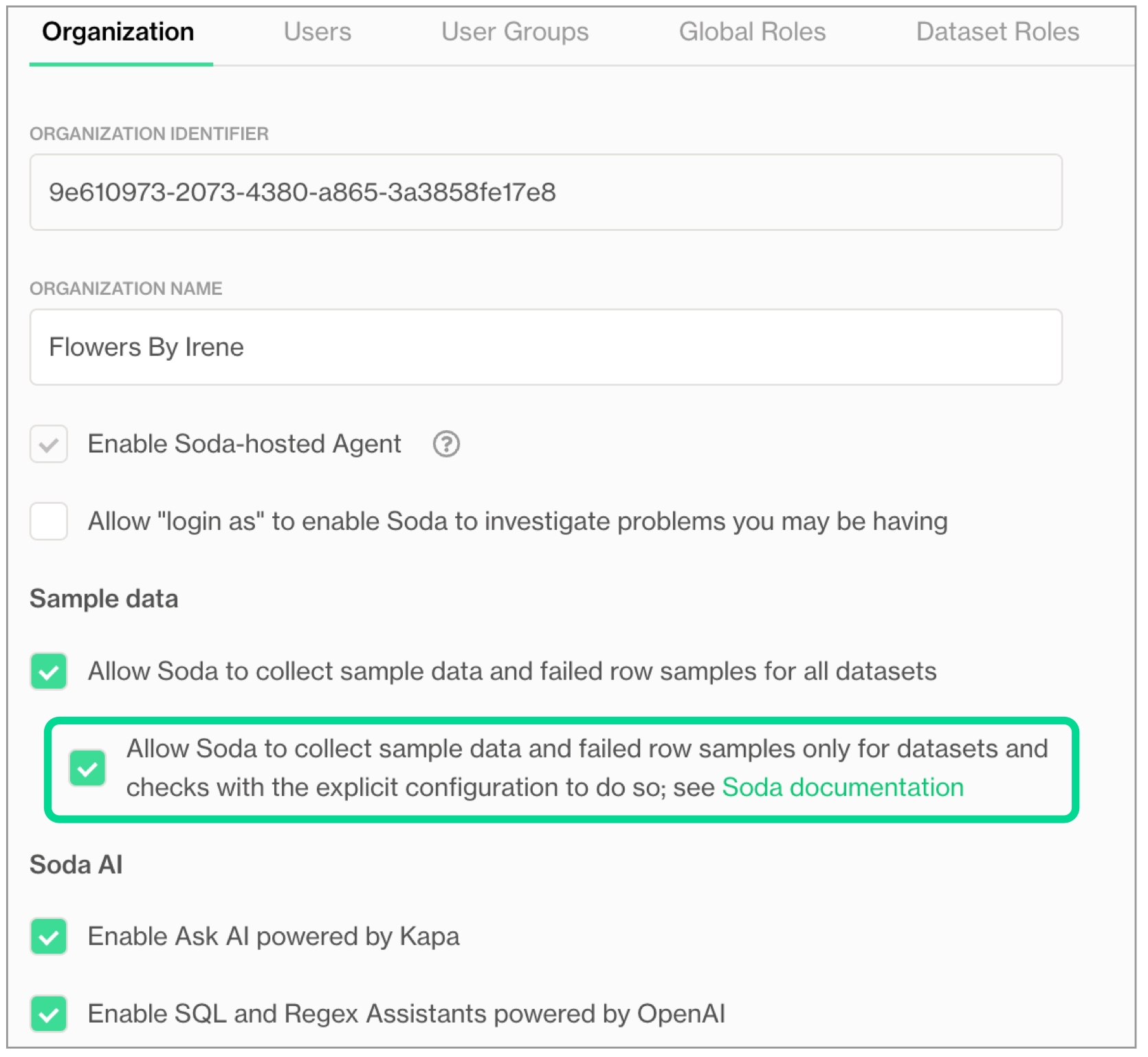

If you do not already have an account, sign up for Soda Cloud for a 45-day free trial. Then, as a user with permission to do so, navigate to your avatar > Organization Settings, then check the box to Enable Ask AI powered by Kapa.

The Ask AI Assistant is powered by kapa.ai and replaces SodaGPT. While Soda collaborates with third parties to develop certain AI features, it’s important to note that Soda does not disclose any primary data with our partners, such as data samples or data profiling details. We only share prompts and some schema information with OpenAI and kapa.ai to enhance the accuracy of the assistants.

Refer to Soda’s General Terms & Conditions in the Use of AI section for further details.

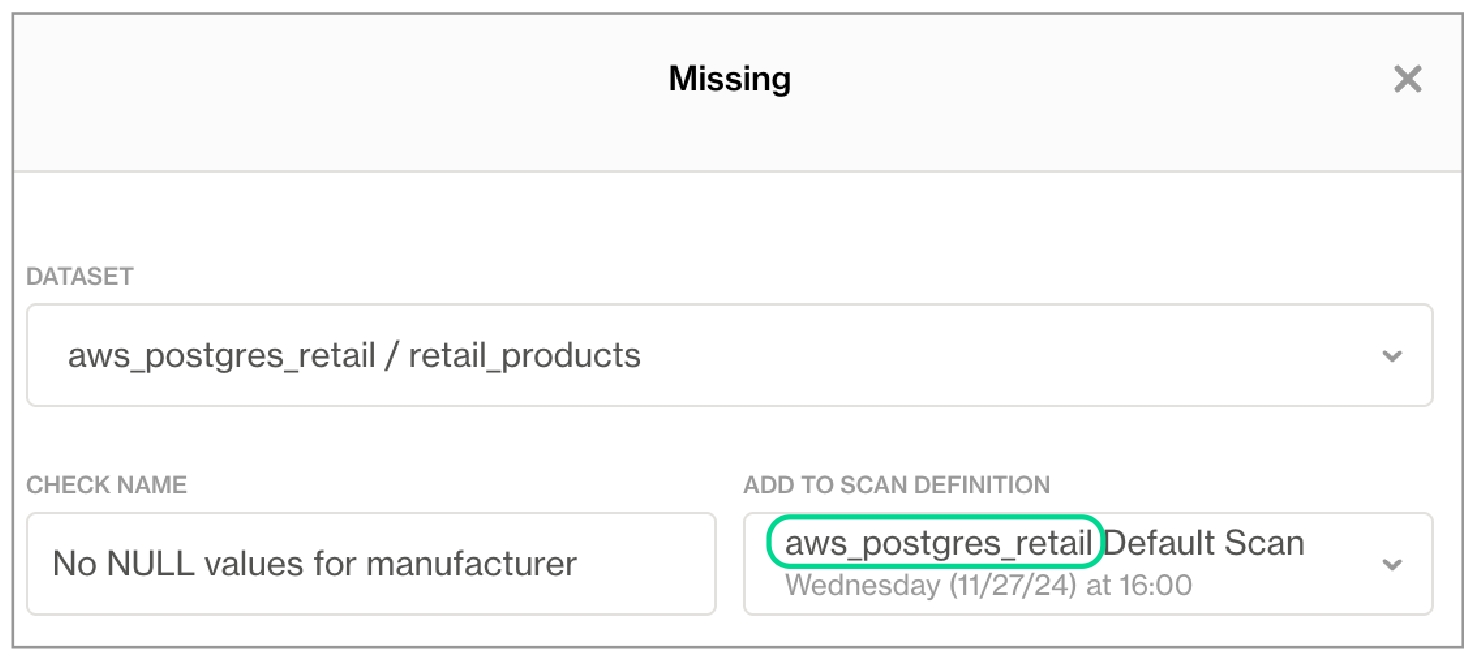

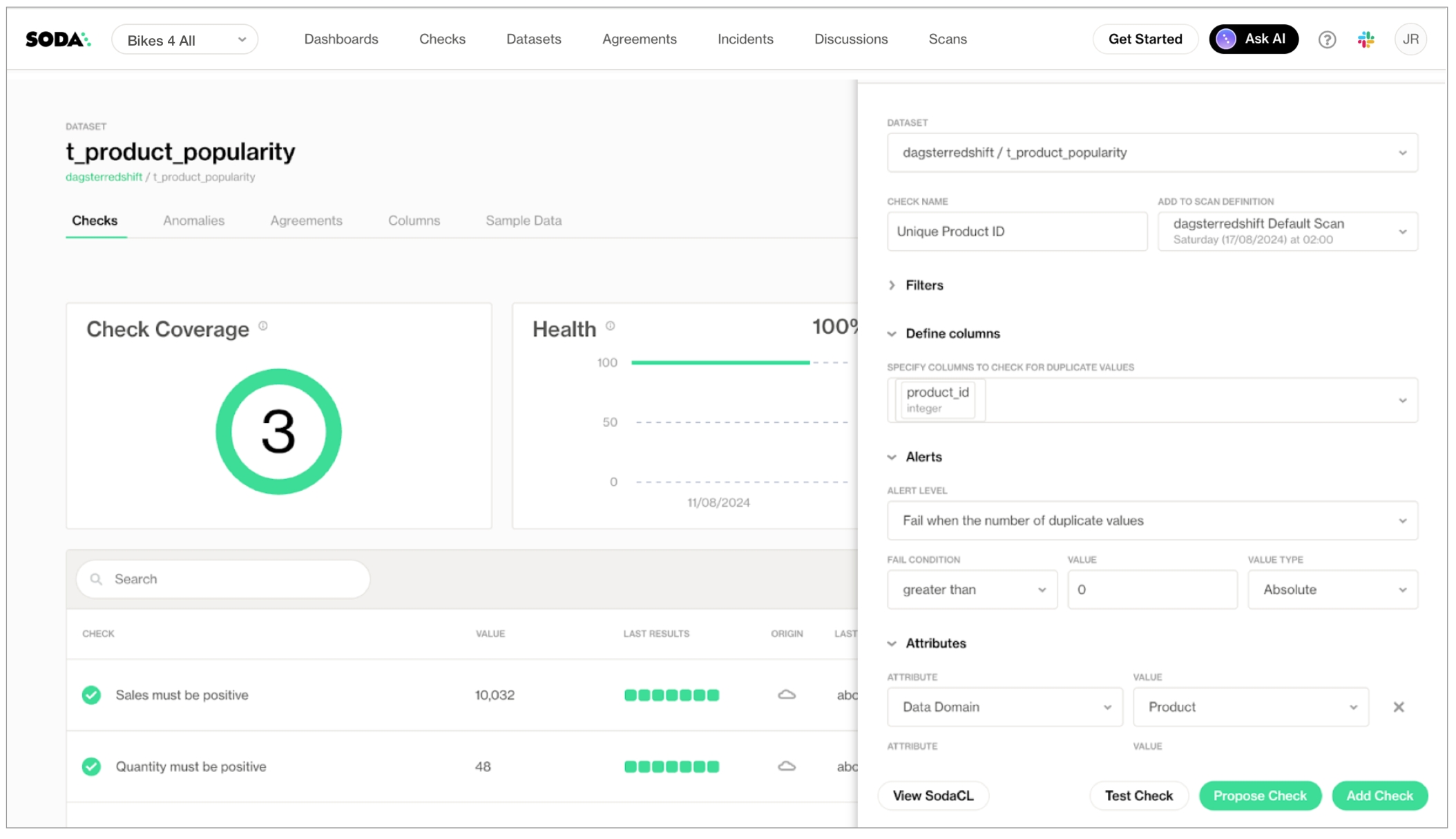

Create no-code checks via the Soda Cloud user interface.

Get started with Soda by following a tutorial.

Consider using check suggestions to profile your data and suggest basic checks for data quality.

In your Soda Cloud account, navigate to your avatar > Profile, then navigate to the API Keys tab. Click the plus icon to generate new API keys.

Copy the syntax for the soda_cloud configuration, including the values API Key ID and API Key Secret, then apply the keys according to how you intend to use them:

for use in a configuration.yml file: follow

for use with the Reporting API if your organization uses Single Sign On (SSO) to access Soda Cloud: follow

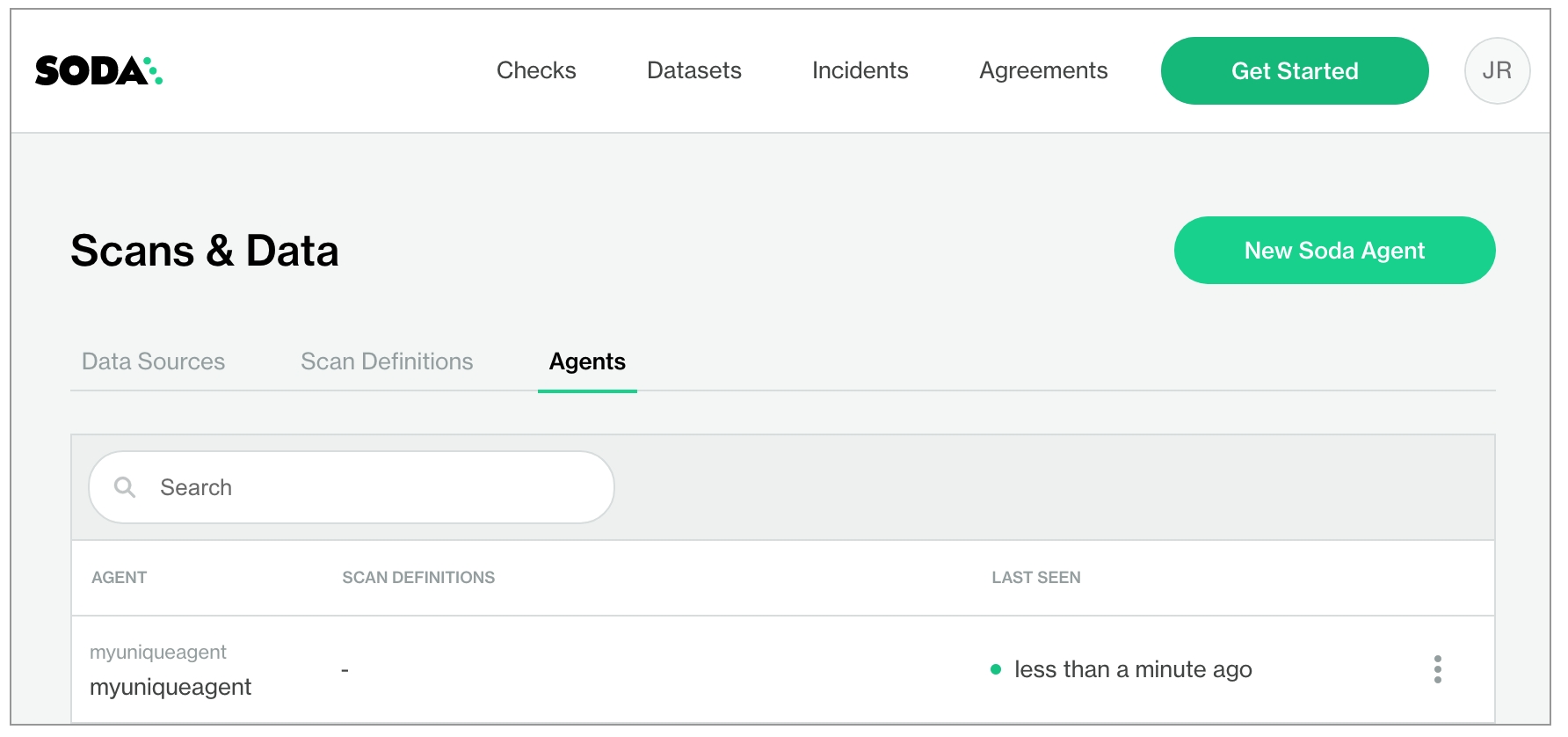

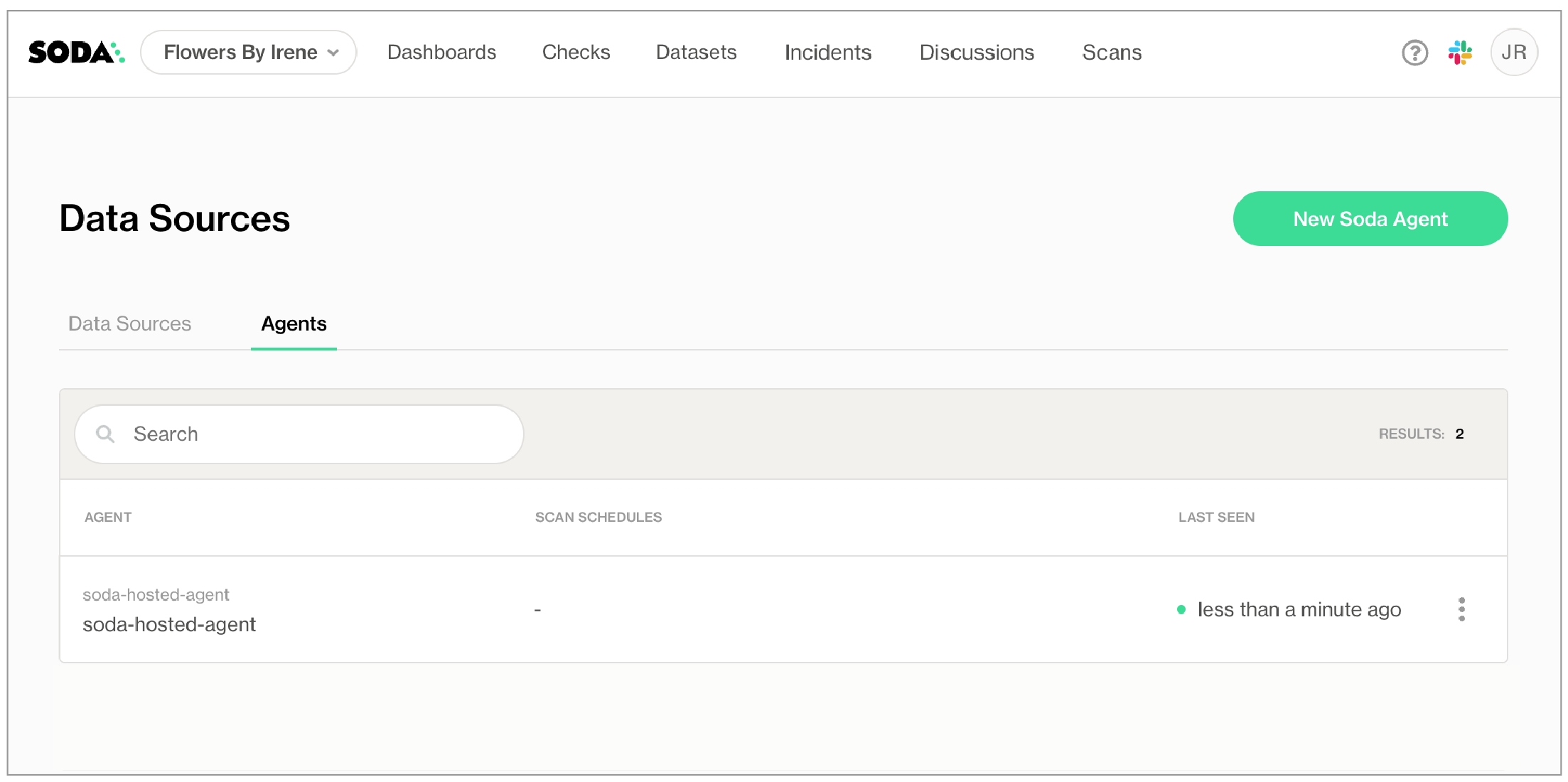

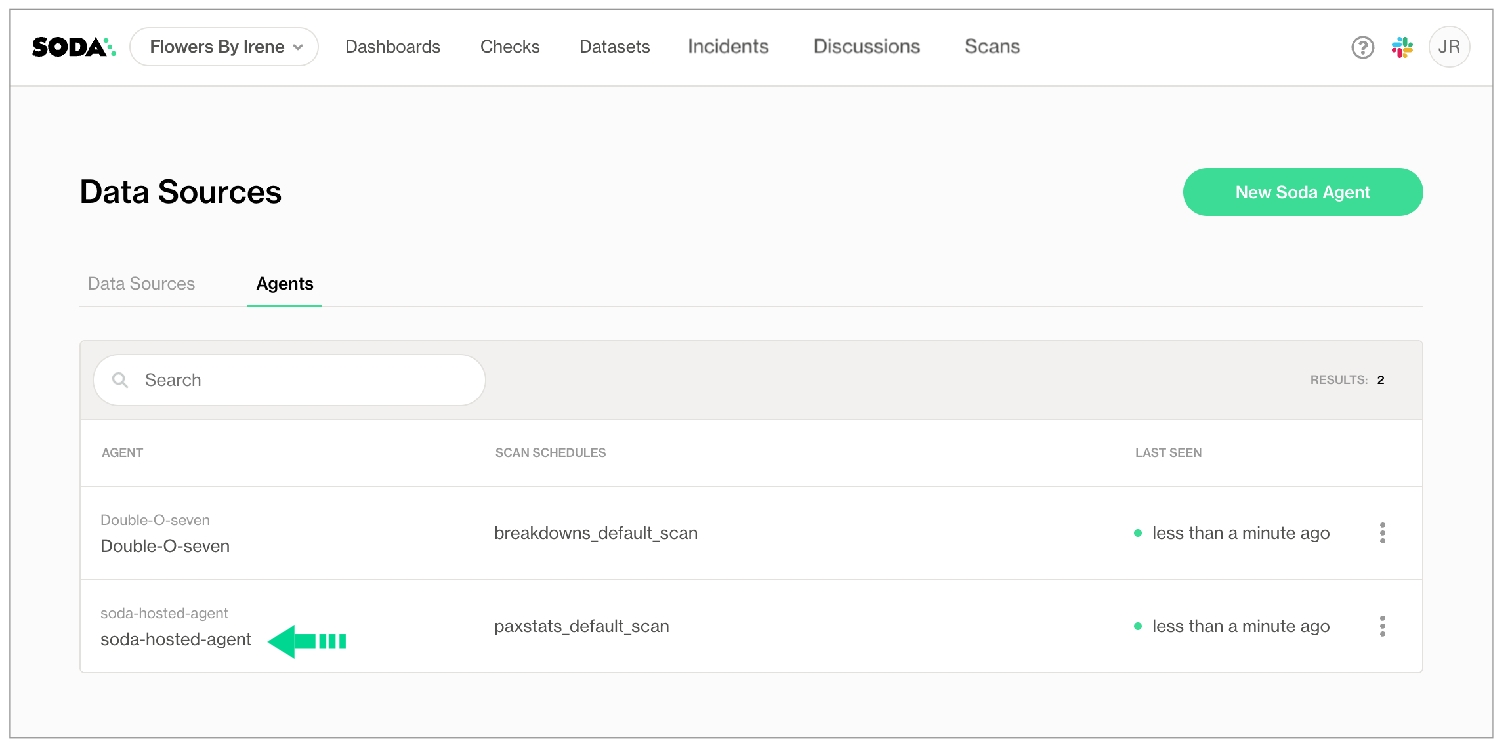

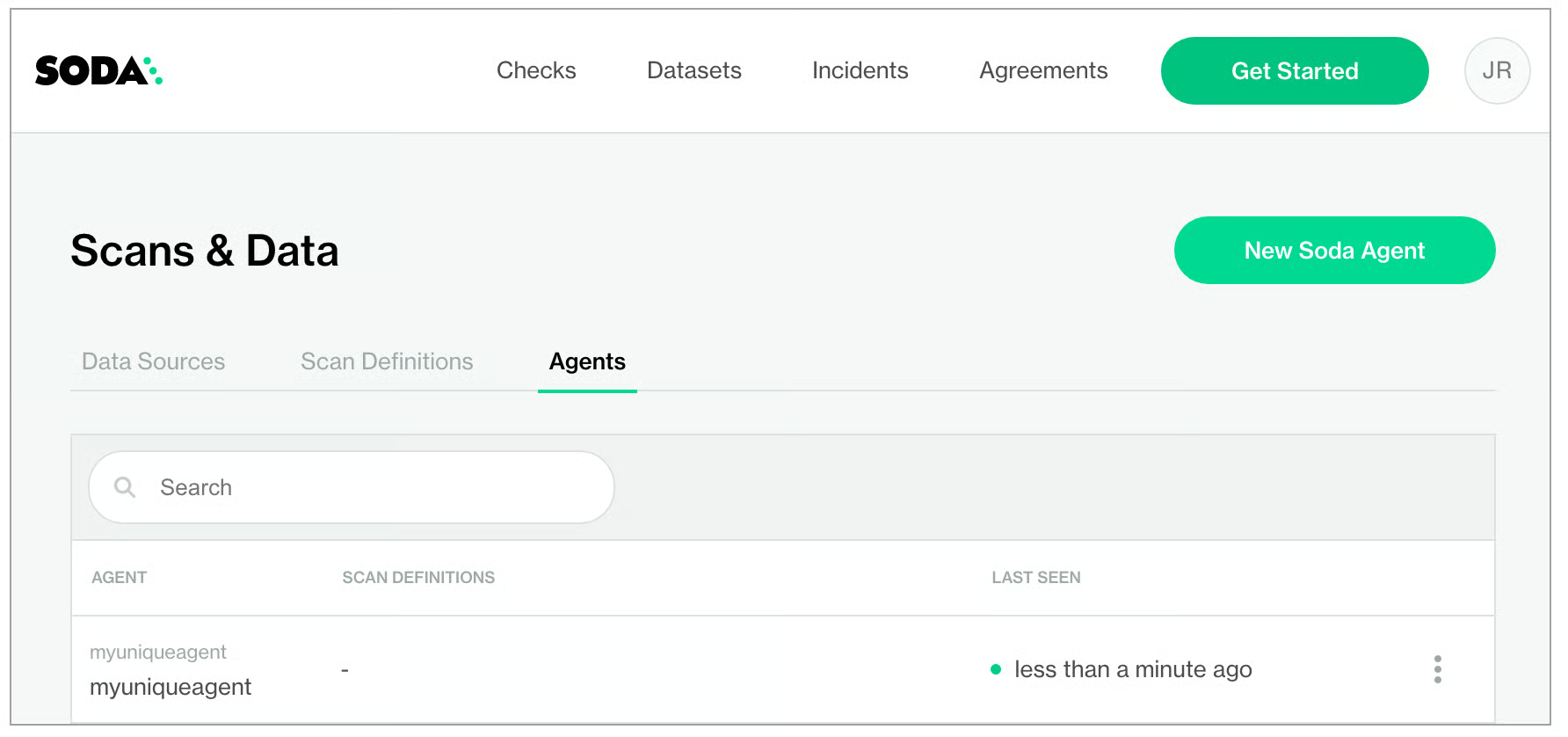

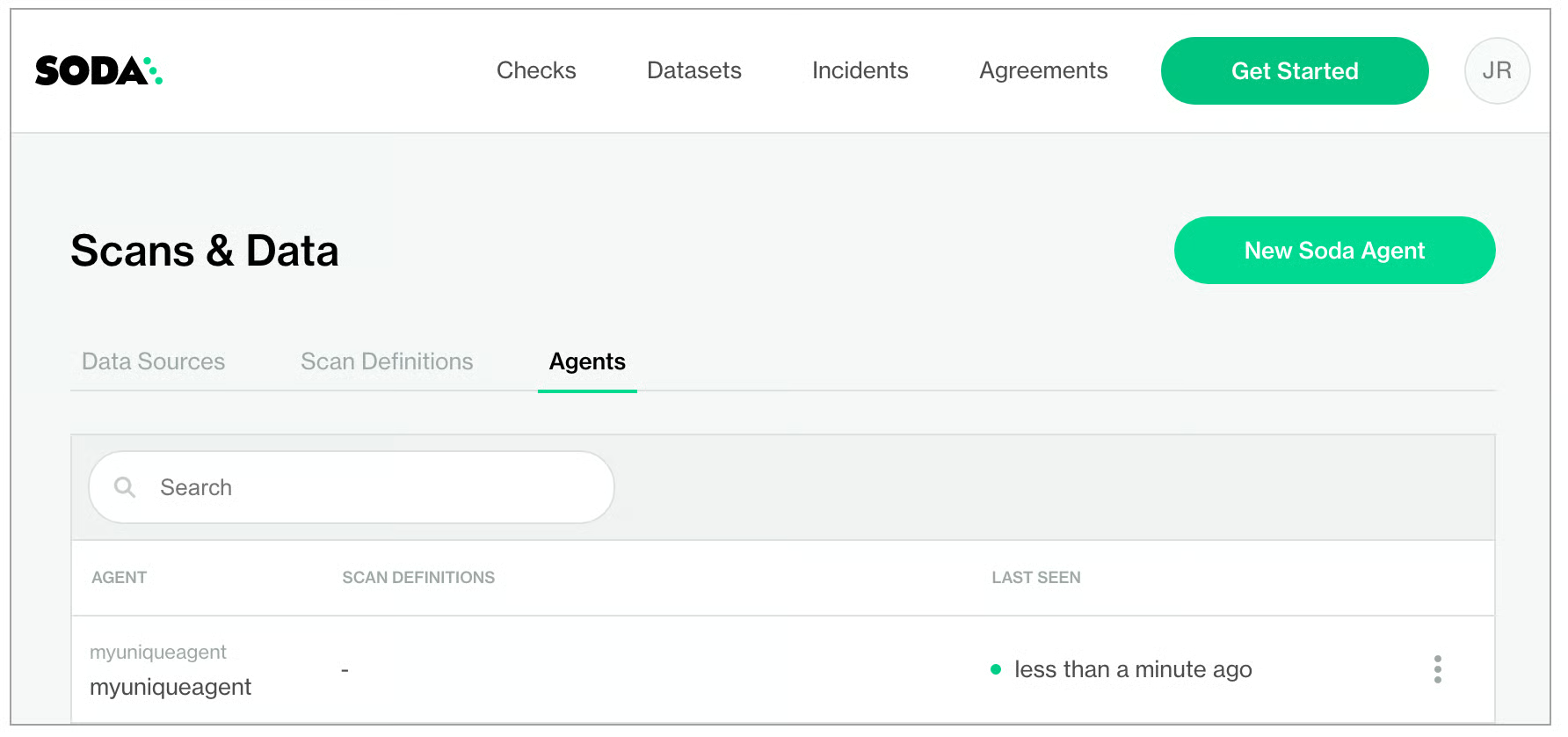

In your Soda Cloud account, navigate to your avatar > Data Sources, then navigate to the Agents tab. Click New Soda Agent.

Copy the values of the API Key ID and API Key Secret to a secure location, then apply the keys according to the instructions in the Deploy a Soda Agent documentation.

Learn more about integrating with third-party tools via a webhook.

Access a list of all integrations that Soda Cloud supports.

required

Identify the type of data source for Soda.

database

required

Identify the location of the . Refer to DuckDB documentation for details on and . This can also be a .

Some users have reported issues using the database key, but have been successful using path instead.

read_only

required

Indicate users’ access by providing a boolean value: true or false

schema_name

optional

Provide an identifier for the schema in which your dataset exists.

To confirm that you have correctly configured the connection details for the data source(s) in your configuration YAML file, use the test-connection command. If you wish, add a -V option to the command to returns results in verbose mode in the CLI.

text

CHARACTER VARYING, CHARACTER, CHAR, TEXT

number

SMALLINT, INTEGER, BIGINT, DECIMAL, NUMERIC, VARIABLE, REAL, DOUBLE PRECISION, SMALLSERIAL, SERIAL, BIGSERIAL

time

TIMESTAMP, DATE, TIME, TIMESTAMP WITH TIME ZONE, TIMESTAMP WITHOUT TIME ZONE, TIME WITH TIME ZONE, TIME WITHOUT TIME ZONE

type

Install package: soda-db2

type

required

Identify the type of data source for Soda.

host

required

Provide a host identifier.

port

required

Provide a port identifier.

username

required

Consider using system variables to retrieve this value securely.

text

VARCHAR

number

INT, INTEGER, DOUBLE, FLOAT

time

DATE, TIME, TIMESTAMP

Follow a 15-min tutorial to set up and run Soda using demo data.

Follow a Use case guide for implementation instructions that target a specific outcome.

Request a demo so we can help you get the most out of your Soda experience.

Choose a flavor of Soda 🚀 Start here!

Set up Soda

Need help? Join the Soda community on Slack.

Soda enables Data Engineers, Data Scientists, and Data Analysts to test data for quality where and when they need to.

Is your data fresh? Is it complete or missing values? Are there unexpected duplicate values? Did something go wrong during transformation? Are all the data values valid? These are the questions that Soda answers.

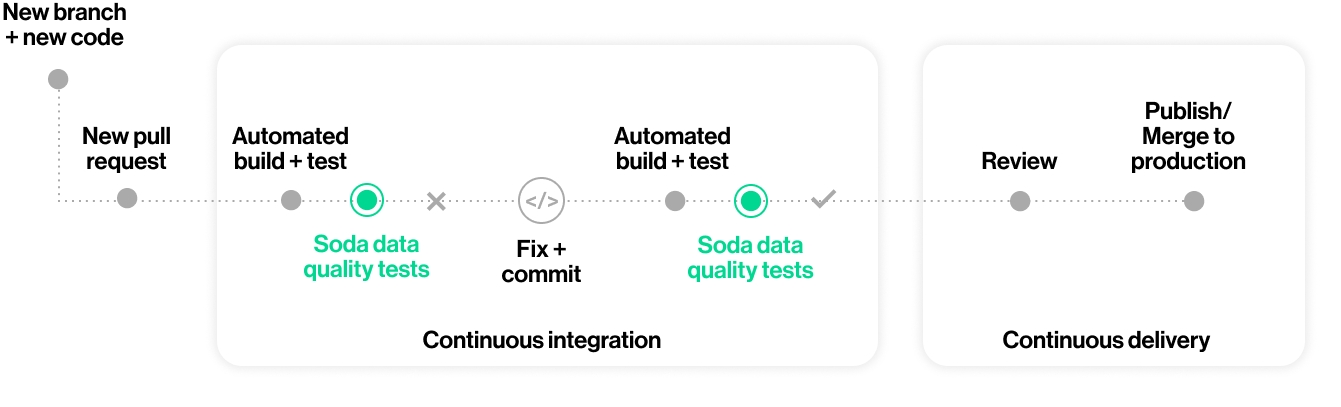

Use Soda with GitHub Actions to test data quality during CI/CD development.

Use Soda to build data quality rules in a collaborative, browser user interface.

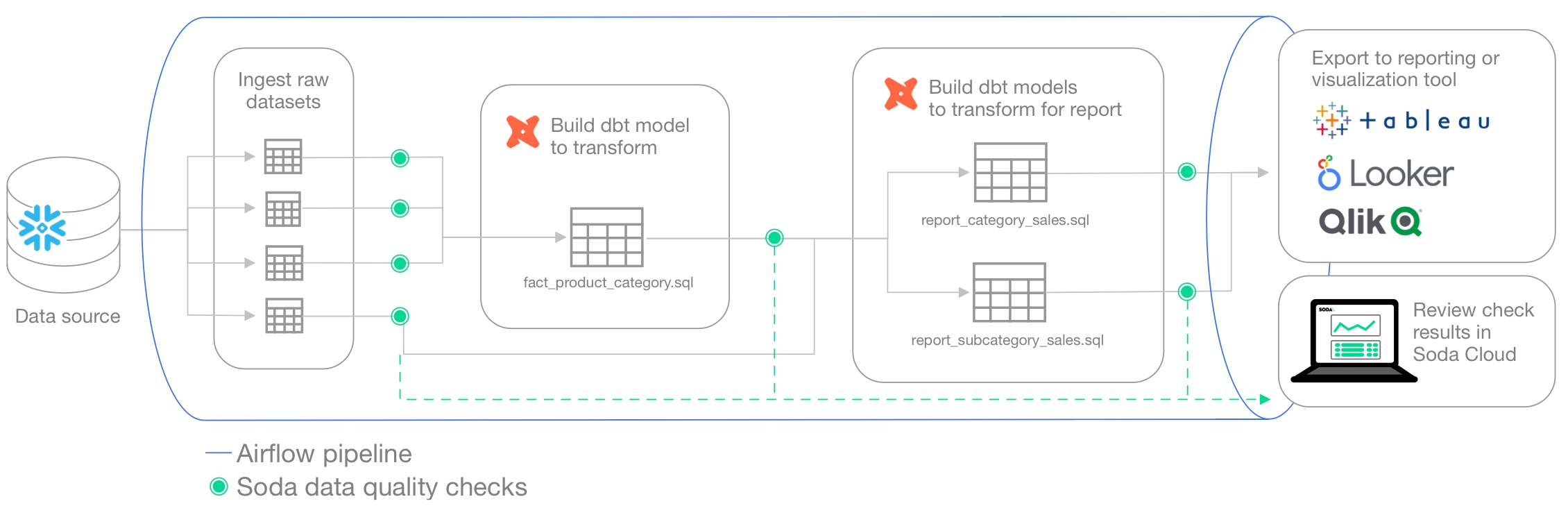

Use it with Airflow to test data quality after ingestion and transformation in your pipeline.

Import your dbt tests into Soda to facilitate issue investigation and track dataset health over time.

Integrate Soda with your data catalog to gauge dataset health from within the catalog.

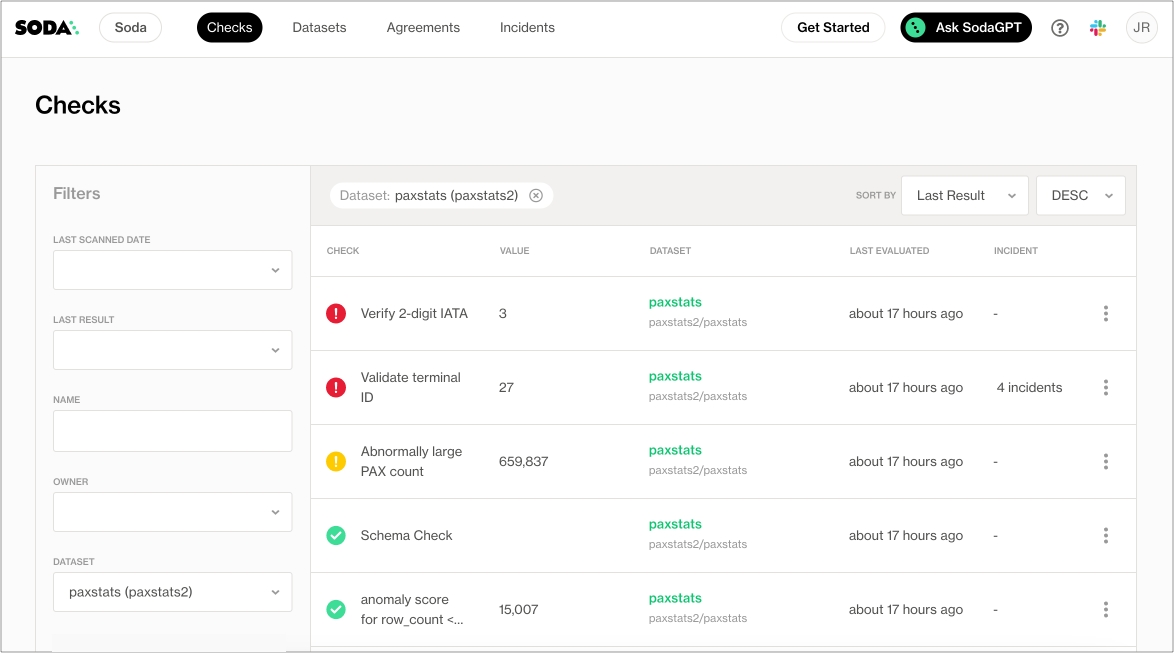

Soda works by taking the data quality checks that you prepare and using them to run a scan of datasets in a data source. A scan is a command which instructs Soda to prepare optimized SQL queries that execute data quality checks on your data source to find invalid, missing, or unexpected data. When checks fail, they surface bad-quality data and present check results that help you investigate and address quality issues.

To test your data quality, you choose a flavor of Soda (choose a deployment model) which enables you to configure connections with your data sources and define data quality checks, then run scans that execute your data quality checks.

Connect to your data source. Connect Soda to a data source such as Snowflake, Amazon Athena, or BigQuery by providing access details for your data source such as host, port, and data source login credentials.

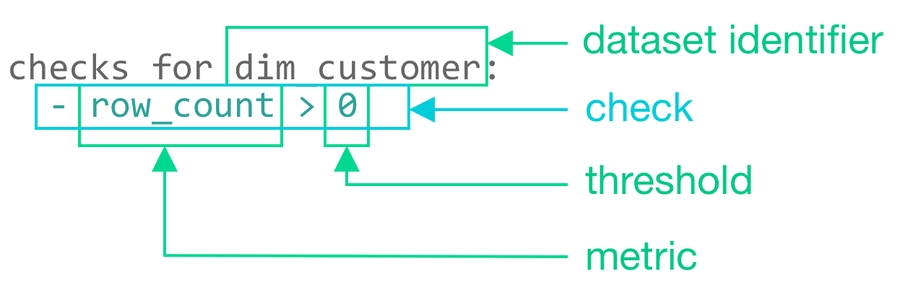

Define checks to surface bad-quality data. Define data quality checks using Soda Checks Language (SodaCL), a domain-specific language for data quality testing. A Soda Check is a test that Soda performs when it scans a dataset in your data source.

Run a scan to execute your data quality checks. During a scan, Soda does not ingest your data, it only scans it for quality metrics, then uses the metadata to prepare scan results1. After a scan, each check results in one of three default states:

pass: the values in the dataset match or fall within the thresholds you specified

fail: the values in the dataset do not match or fall within the thresholds you specified

error: the syntax of the check is invalid, or there are runtime or credential errors

A fourth state, warn, is something you can explicitly configure for individual checks.

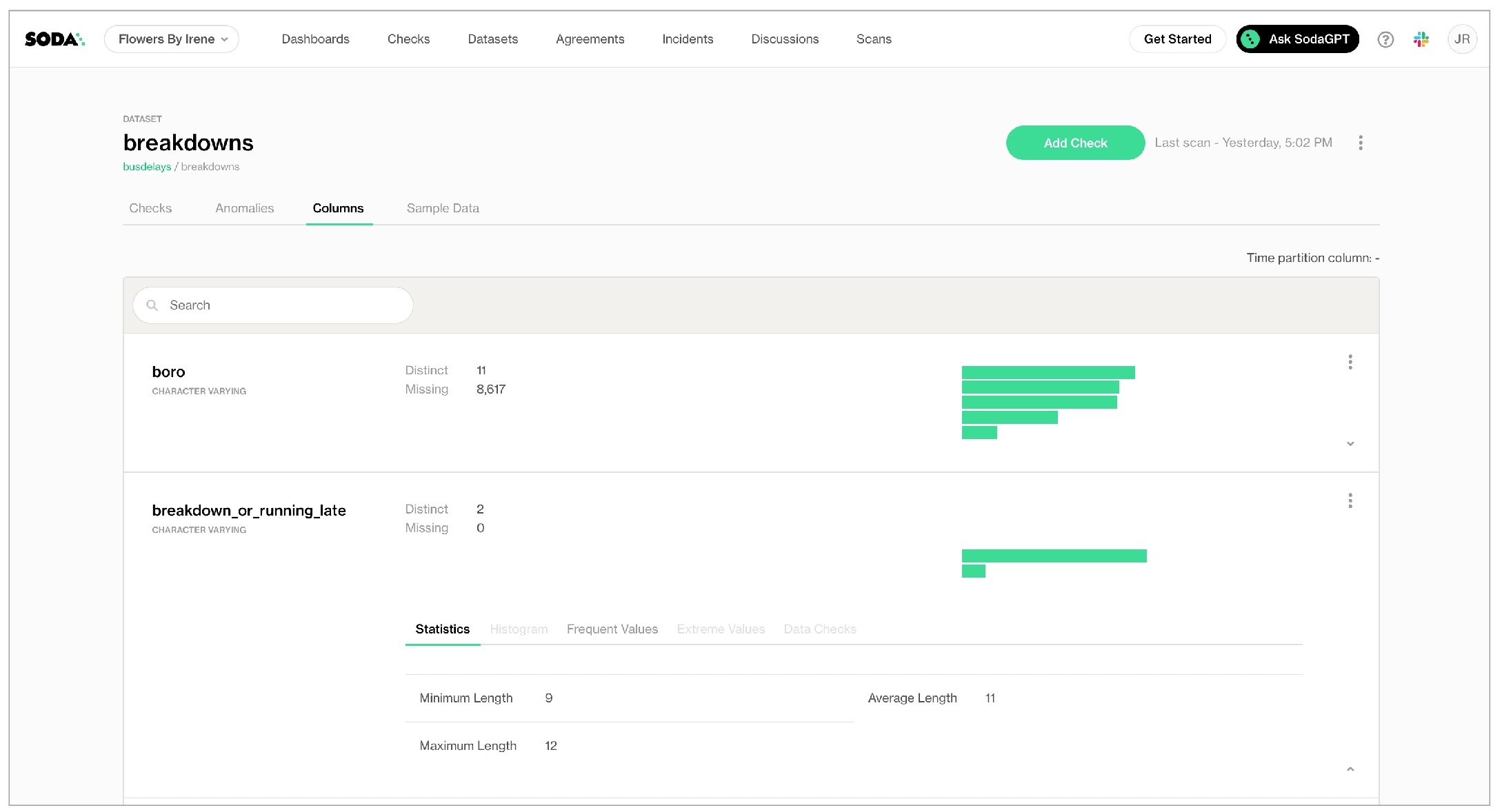

Review scan results and investigate issues. You can review the scan output in the command-line and in your Soda Cloud account. Access visualized scan results, set alert notifications, track trends in data quality over time, and integrate with the messaging, ticketing, and data cataloging tools you already use, like Slack, Jira, and Atlan.

1 An exception to this rule is when Soda collects failed row samples that it presents in scan output to aid with issue investigation, a feature you can disable.

Access a Soda product overview.

Learn more about How Soda works.

Learn more about SodaCL.

Access the Glossary for a full list of Soda terminology.

type

required

Identify the type of data source for Soda.

host

required

Provide a host identifier.

port

required

Provide a port identifier.

username

required

Consider using system variables to retrieve this value securely.

password

required

Consider using system variables to retrieve this value securely.

database

required

Identify the name of your database.

schema

required

Provide an identifier for the schema in which your table exists.

text

CHARACTER VARYING, CHARACTER, CHAR, TEXT

number

SMALLINT, INTEGER, BIGINT, DECIMAL, NUMERIC, VARIABLE, REAL, DOUBLE PRECISION, SMALLSERIAL, SERIAL, BIGSERIAL

time

TIMESTAMP, DATE, TIME, TIMESTAMP WITH TIME ZONE, TIMESTAMP WITHOUT TIME ZONE, TIME WITH TIME ZONE, TIME WITHOUT TIME ZONE

type

required

Identify the type of data source for Soda.

database

required

Provide an identifier for your database.

Some users have reported issues using the database key, but have been successful using path instead.

read_only

required

Indicate users' access by providing a boolean value: true or false

text

CHARACTER VARYING, CHARACTER, CHAR, TEXT

number

SMALLINT, INTEGER, BIGINT, DECIMAL, NUMERIC, VARIABLE, REAL, DOUBLE PRECISION, SMALLSERIAL, SERIAL, BIGSERIAL

time

TIMESTAMP, DATE, TIME, TIMESTAMP WITH TIME ZONE, TIMESTAMP WITHOUT TIME ZONE, TIME WITH TIME ZONE, TIME WITHOUT TIME ZONE

Follow this tutorial to set up and run a simple Soda scan for data quality using example data.

The Soda environment has been updated since this tutorial.

Refer to for updated tutorials.

Is Soda the data quality testing solution you've been looking for? Take a sip and see! 🫧

Use the example data in this quick tutorial to set up and run a simple Soda scan for data quality.

Set up Soda | 3 minutes Build an example data source | 2 minutes | 5 minutes | 5 minutes

💡 For standard set up instructions, access the .

✨ Want a total UI experience? Use the out-of-the-box to skip the CLI.

This tutorial references a MacOS environment.

Check the following prerequisites:

You have installed Python 3.8, 3.9, or 3.10.

You have installed Pip 21.0 or greater.

(Optional) You have installed and have access to , to set up an example data source.

Visit to sign up for a Soda Cloud account which is free for a 45-day trial.

In your command-line interface, create a Soda project directory in your local environment, then navigate to the directory.

Best practice dictates that you install the Soda using a virtual environment. In your command-line interface, create a virtual environment in the .venv directory, then activate the environment.

Execute the following command to install the Soda package for PostgreSQL in your virtual environment. The example data is in a PostgreSQL data source, but there are 15+ data sources with which you can connect your own data beyond this tutorial.

Validate the installation.

To exit the virtual environment when you are done with this tutorial, use the command deactivate.

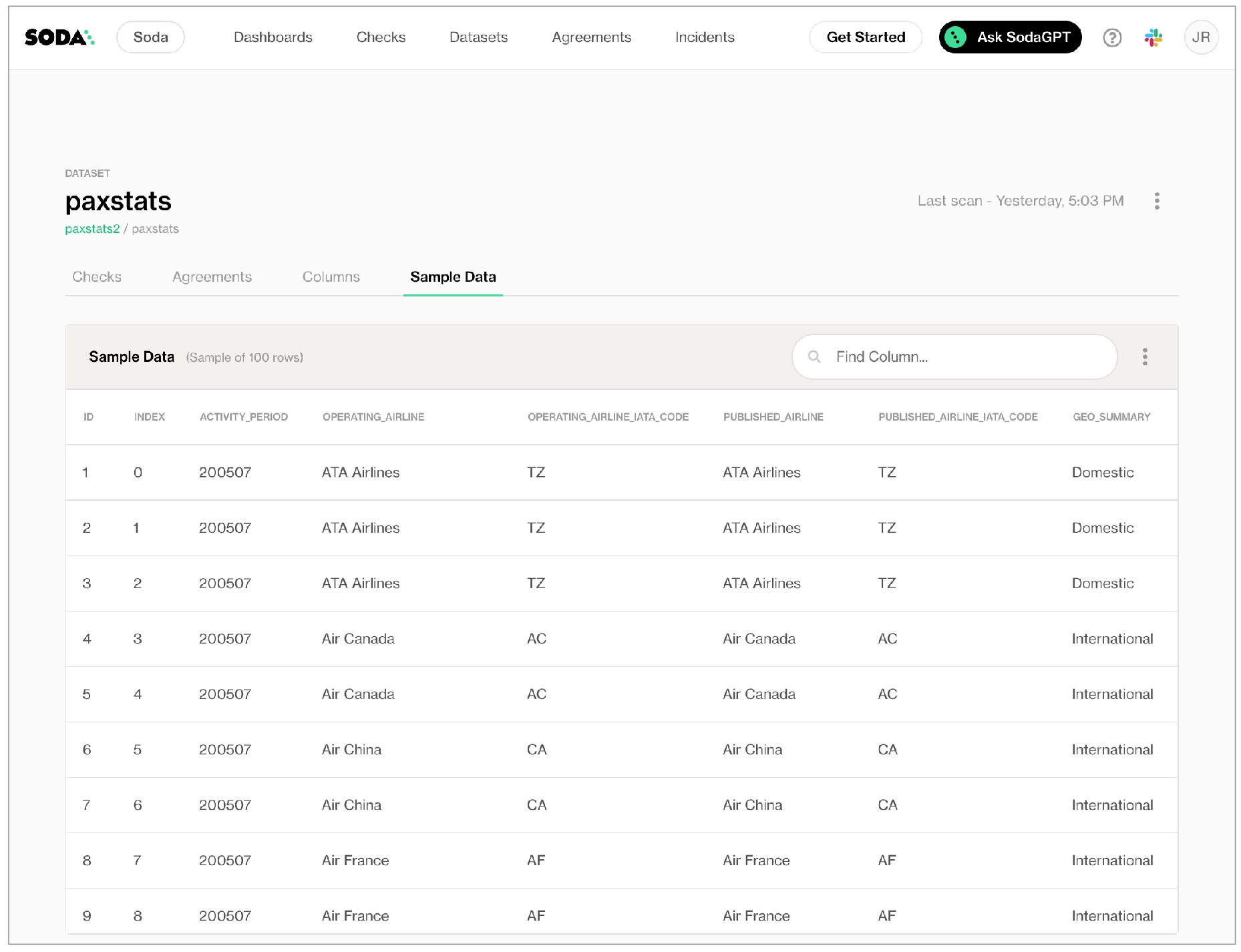

To enable you to take a first sip of Soda, you can use Docker to quickly build an example PostgreSQL data source against which you can run scans for data quality. The example data source contains data for AdventureWorks, an imaginary online e-commerce organization.

(Optional) Access the repository in GitHub.

(Optional) Access a quick view of the .

Open a new tab in Terminal.

If it is not already running, start Docker Desktop.

Run the following command in Terminal to set up the prepared example data source.

When the output reads data system is ready to accept connections, your data source is set up and you are ready to proceed.

Alternatively, you can use your own data for this tutorial. To do so:

Skip the steps above involving Docker.

Install the Soda Library package that corresponds with your data source, such as soda-bigquery, soda-athena, etc. See full list.

Collect your data source's login credentials that you must provide to Soda so that it can scan your data for quality.

To connect to a data source such as Snowflake, PostgreSQL, Amazon Athena, or GCP BigQuery, you use a configuration.yml file which stores access details for your data source.

This tutorial also instructs you to connect to a Soda Cloud account using API keys that you create and add to the same configuration.yml file. Available for free as a 45-day trial, your Soda Cloud account validates your free trial or license, gives you access to visualized scan results, tracks trends in data quality over time, lets you set alert notifications, and much more.

In a code editor such as Sublime or Visual Studio Code, create a new file called configuration.yml and save it in your soda_sip directory.

Copy and paste the following connection details into the file. The data_source configuration details connect Soda to the example AdventureWorks data source you set up using Docker. If you are using your own data, provide the data_source values that correspond with your own data source.

Output:

Create another file in the soda_sip directory called checks.yml. A check is a test that Soda executes when it scans a dataset in your data source. The checks.yml file stores the checks you write using the Soda Checks Language (SodaCL).

Open the checks.yml file in your code editor, then copy and paste the following checks into the file.

Save the changes to the checks.yml file, then, in Terminal, use the following command to run a scan. A scan is a CLI command which instructs Soda to prepare SQL queries that execute data quality checks on your data source. As input, the command requires:

-d the name of the data source to scan

-c the filepath and name of the configuration.yml file

the filepath and name of the checks.yml file

Command:

Output:

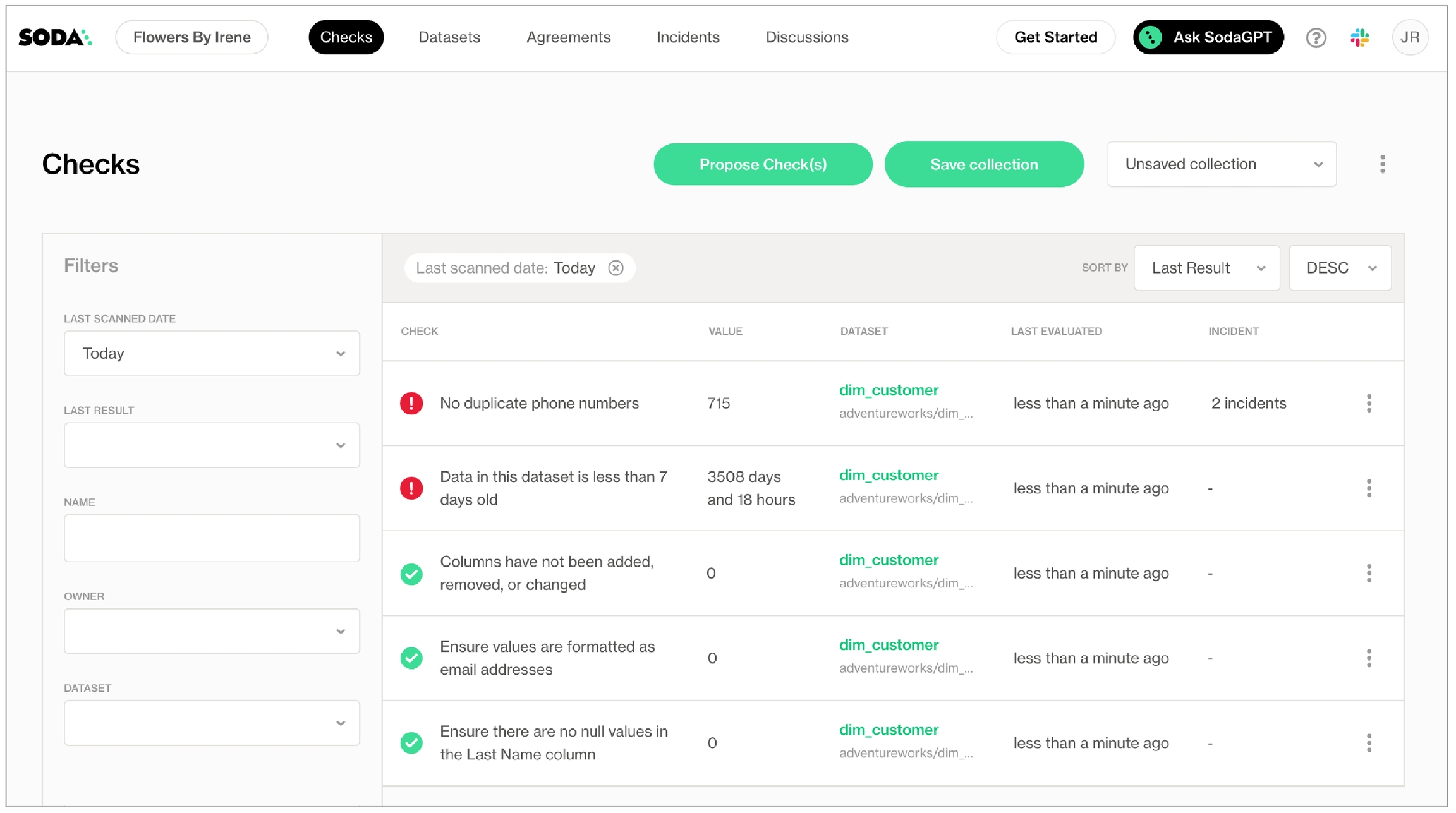

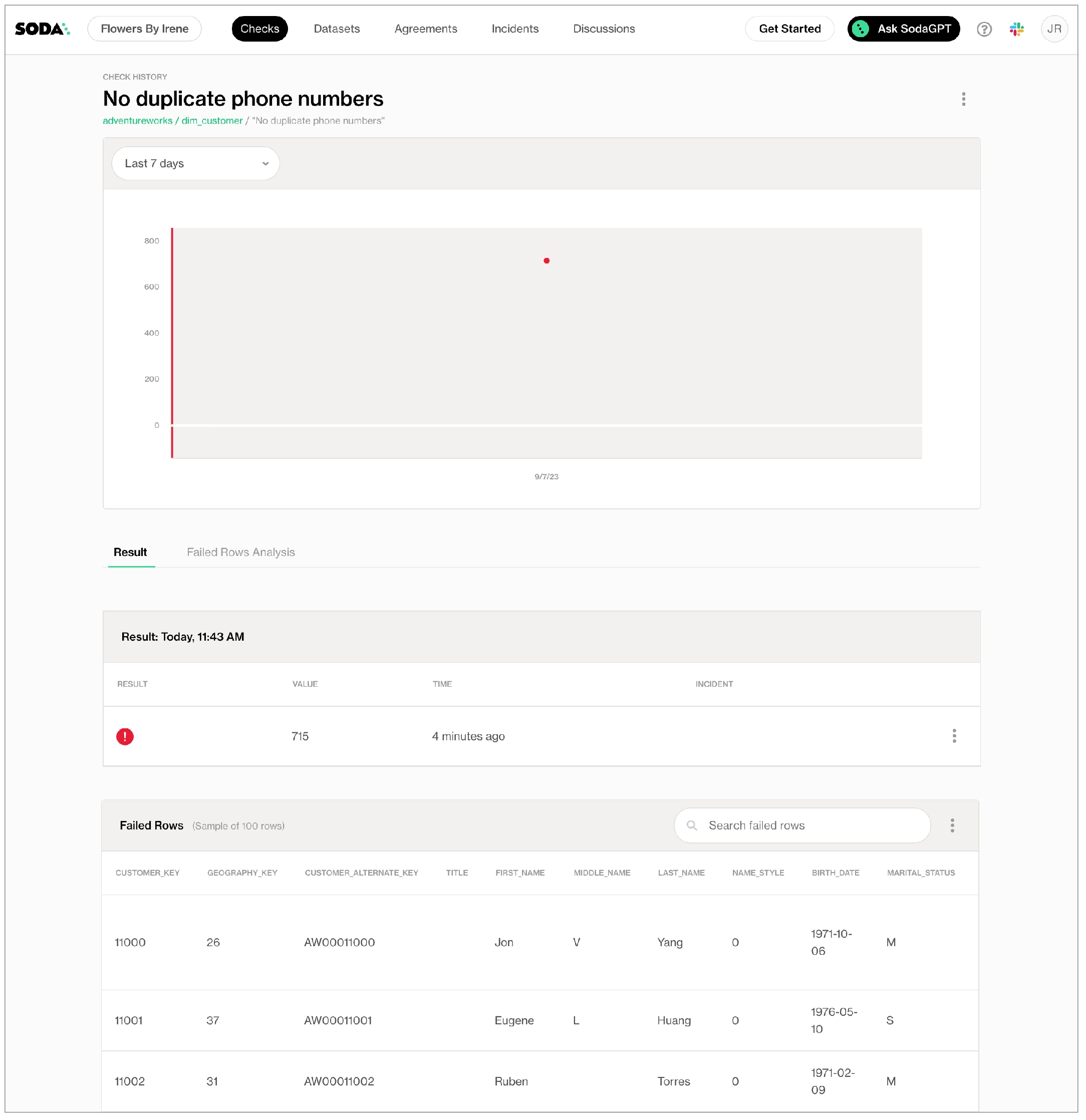

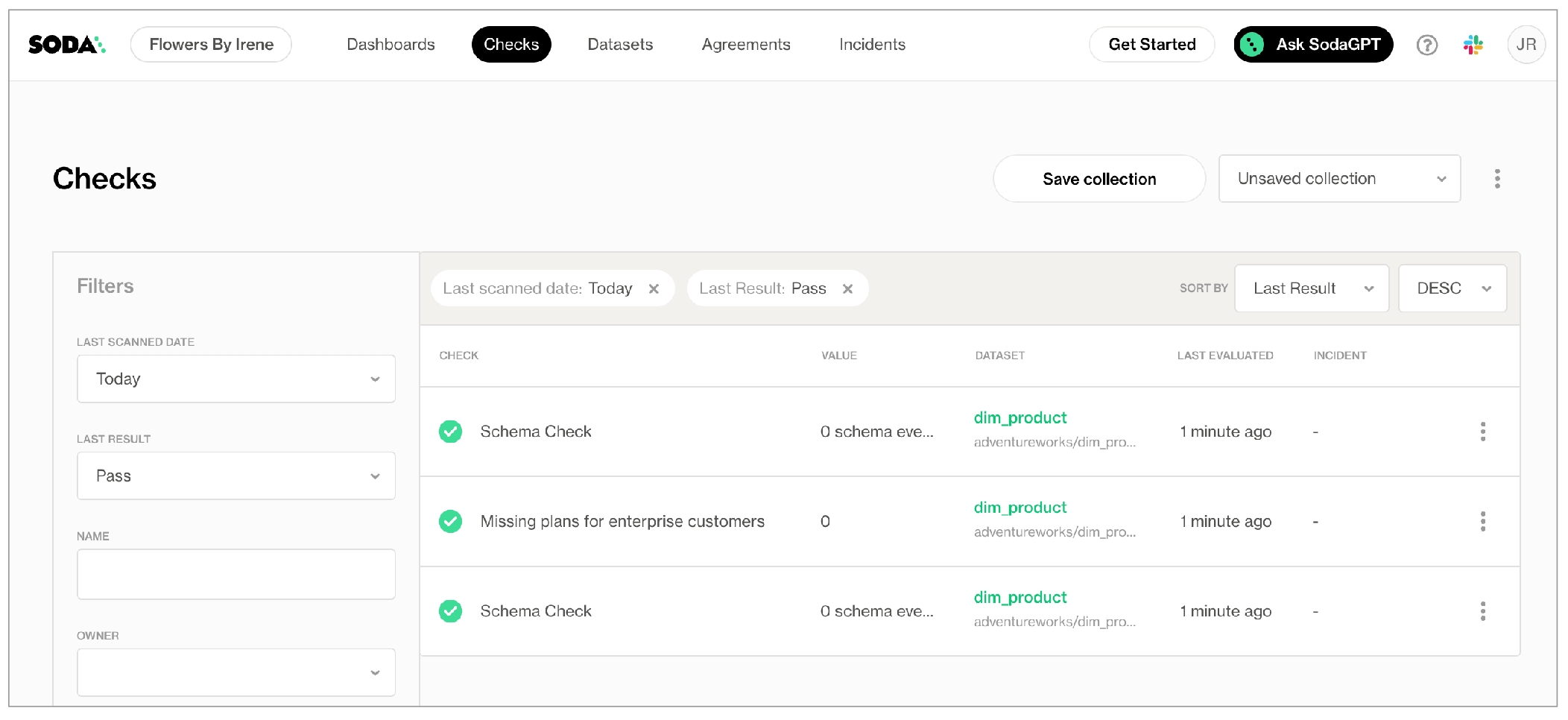

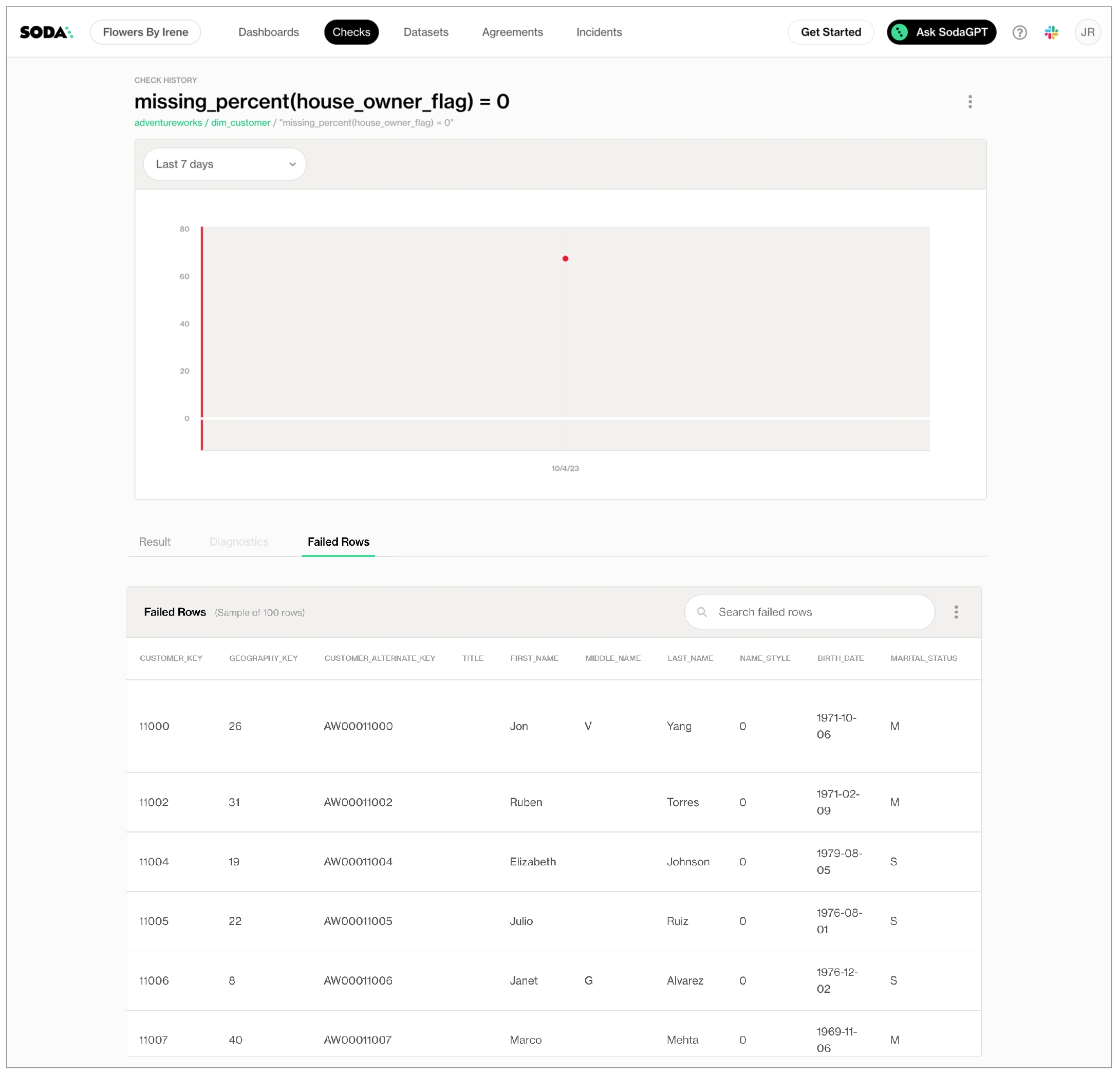

As you can see in the Scan Summary in the command-line output, some checks failed and Soda sent the results to your Cloud account. To access visualized check results and further examine the failed checks, return to your Soda account in your browser and click Checks.

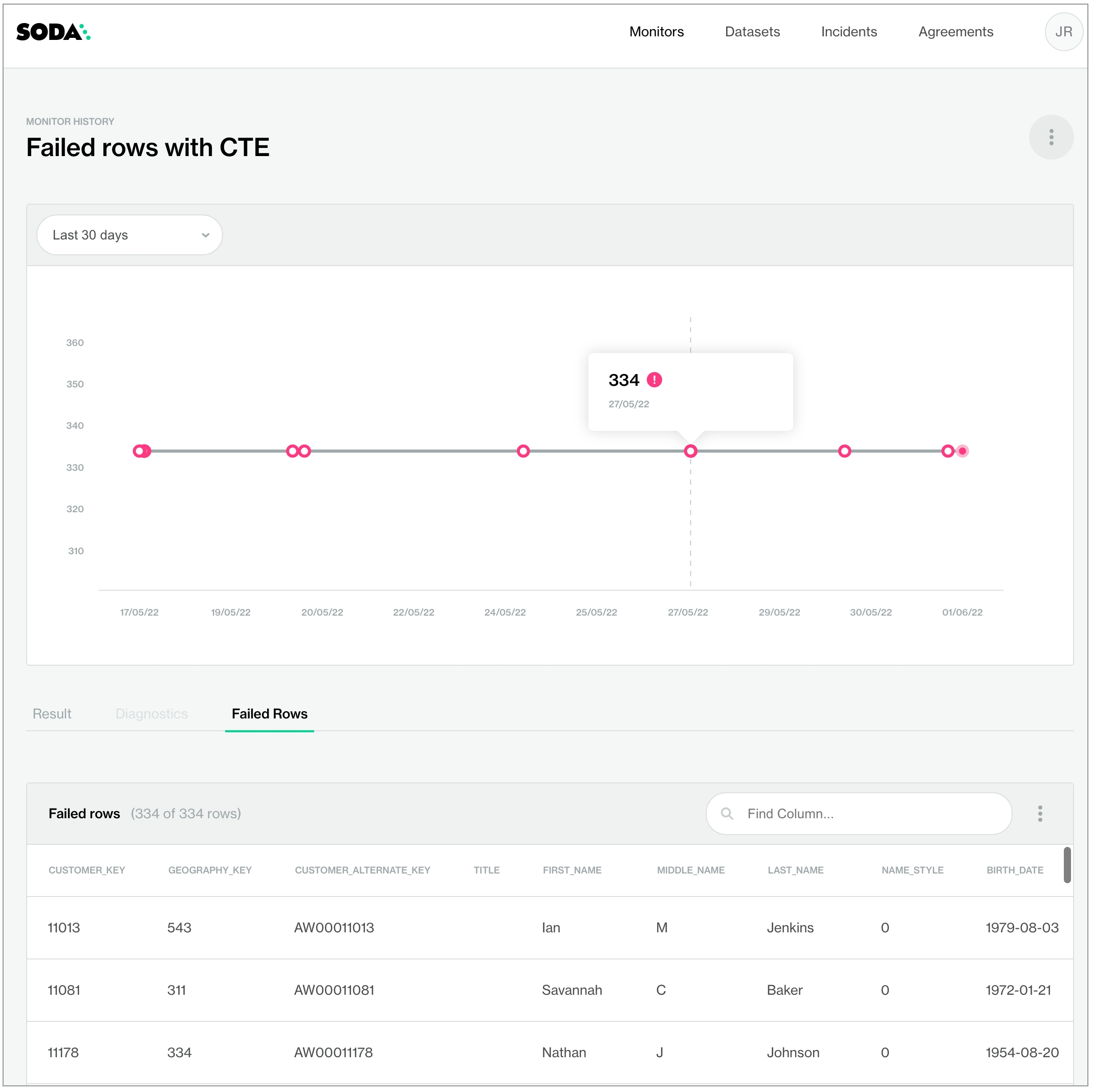

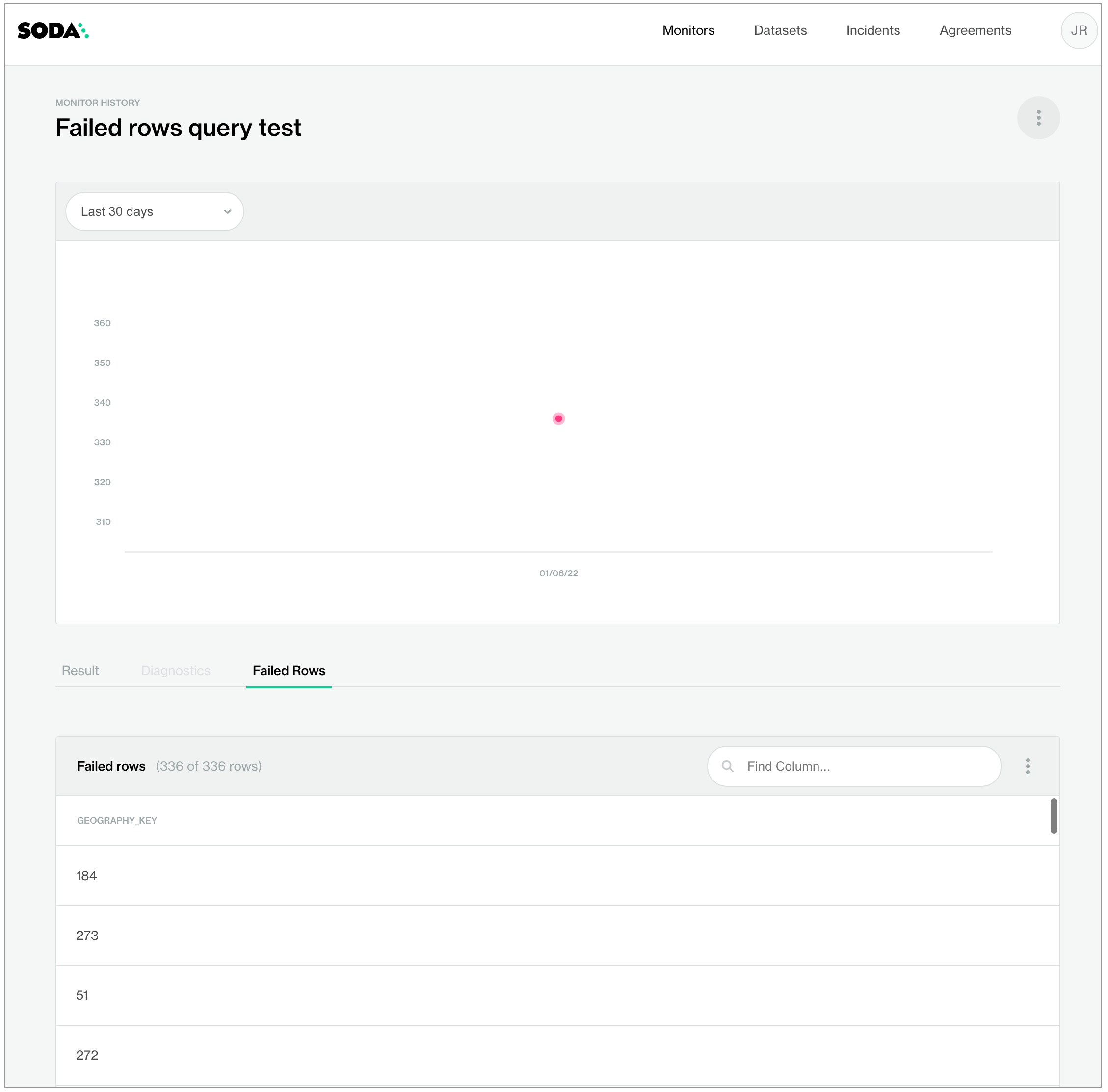

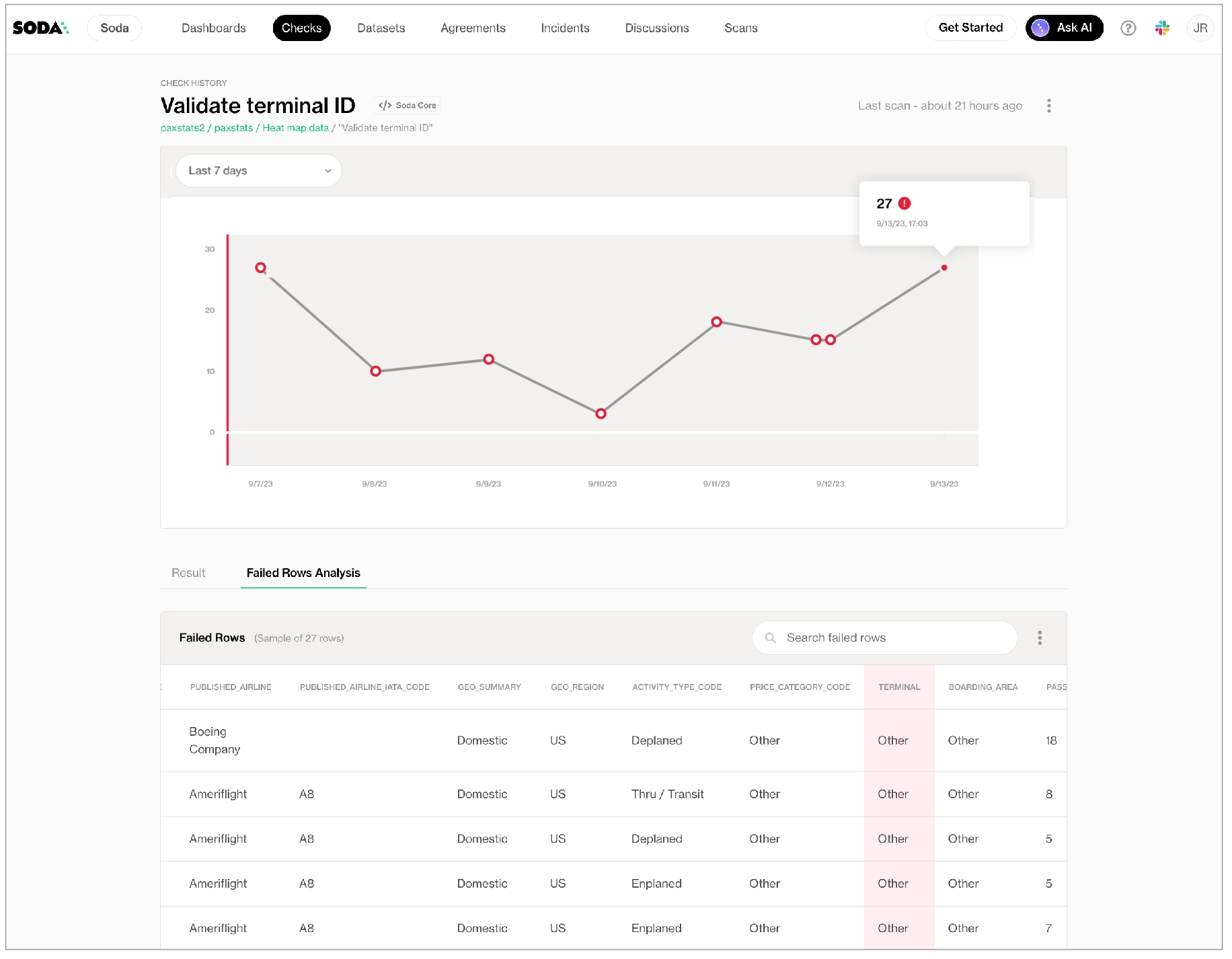

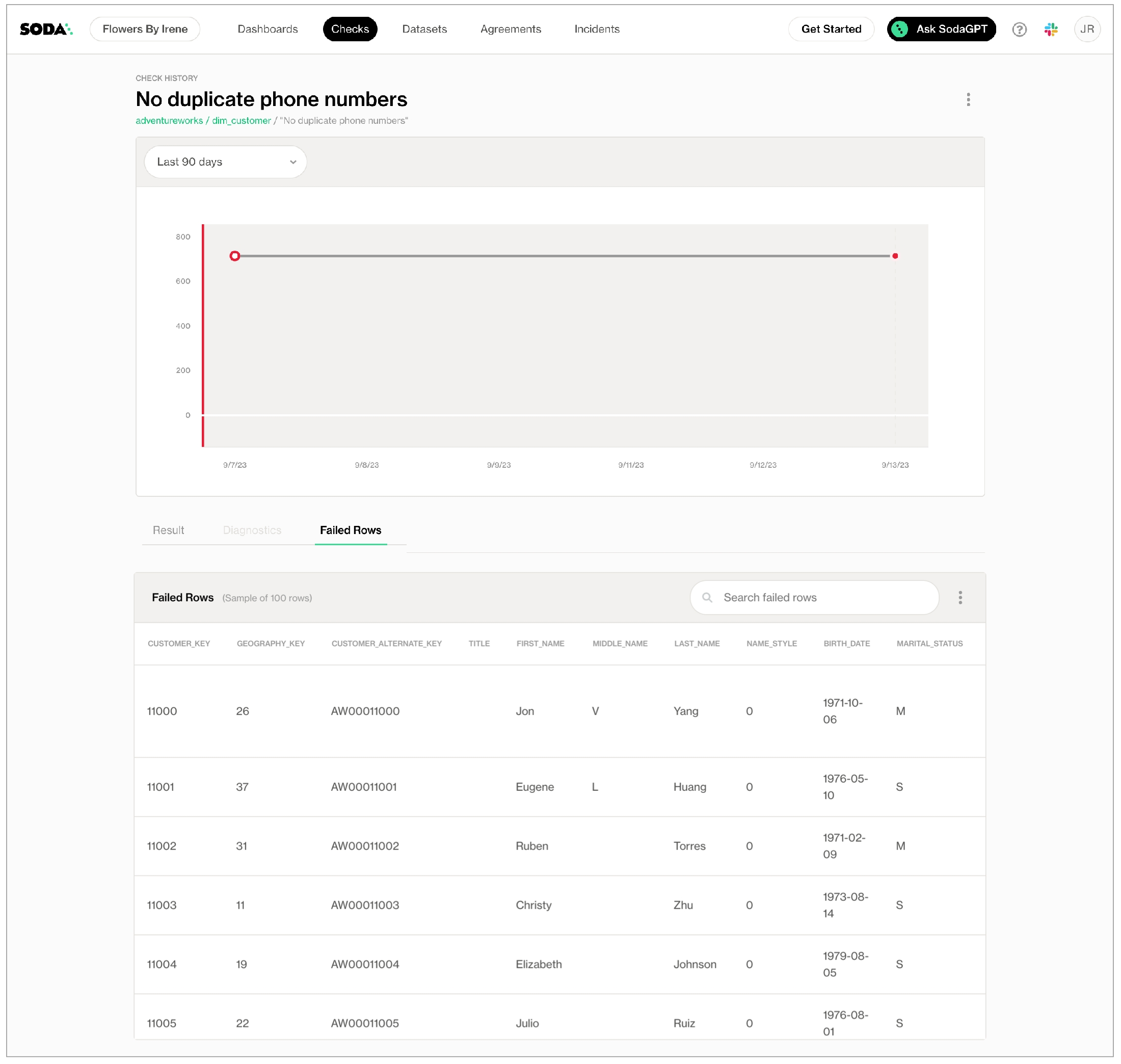

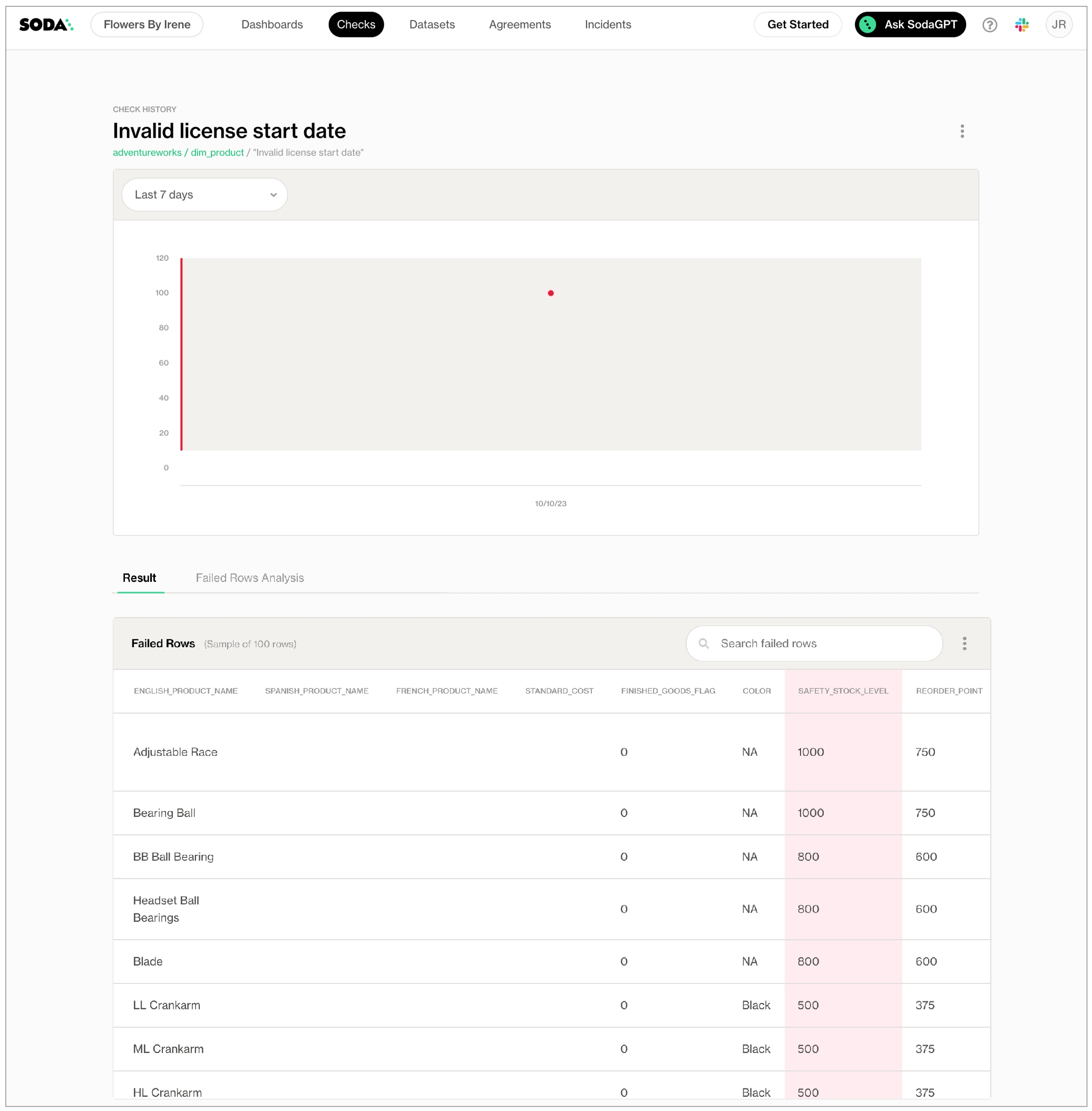

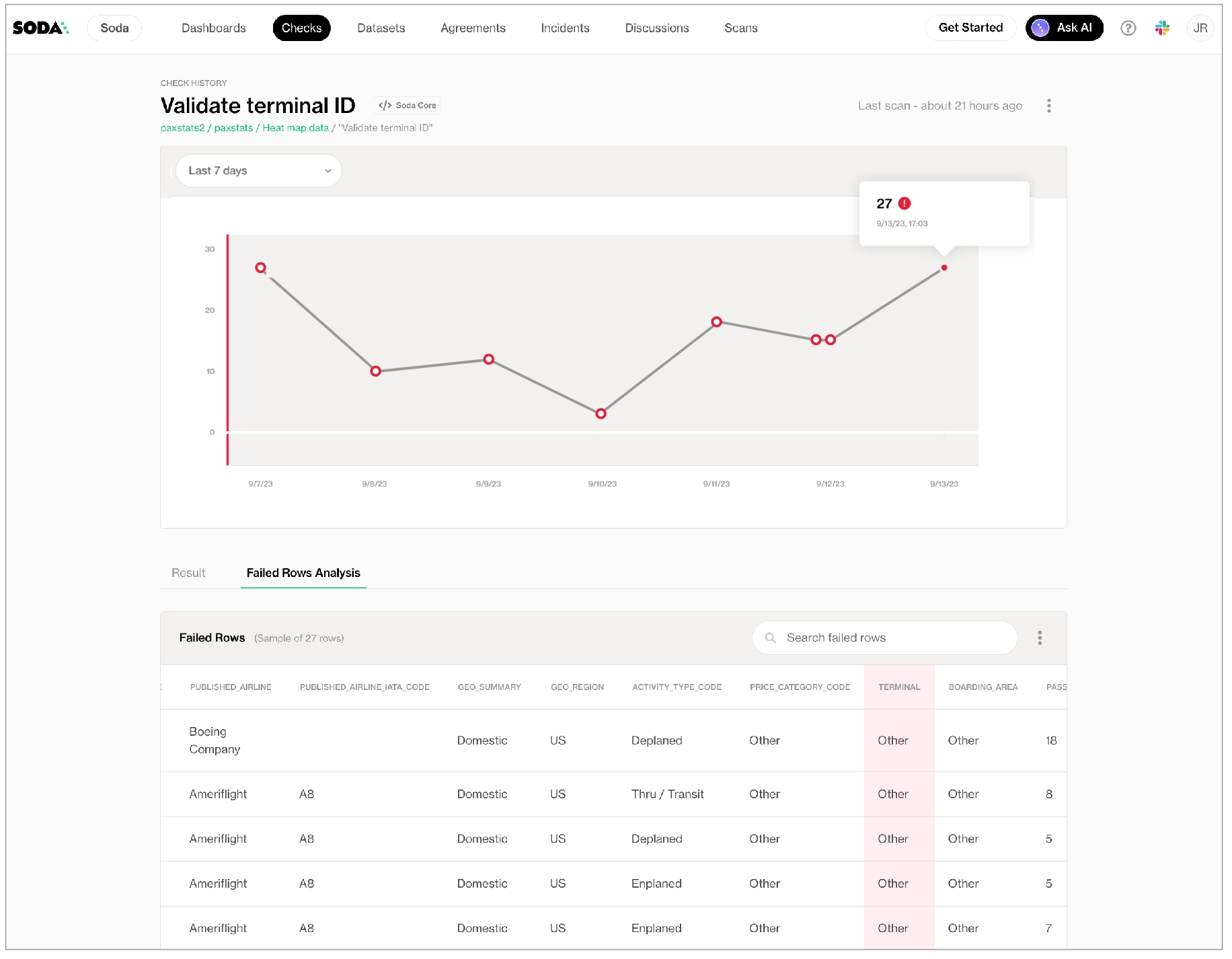

In the table of checks that Soda displays, you can click the line item for one of the checks that failed to examine the visualized results in a line graph, and to access the failed row samples that Soda automatically collected when it ran the scan and executed the checks. Use the failed row samples, as in the example below, to determine what caused a data quality check to fail.

✨Well done!✨ You've taken the first step towards a future in which you and your colleagues can trust the quality and reliability of your data. Huzzah!

If you are done with the example data, you can delete it from your account to start fresh with your own data.

Navigate to your avatar > Data Sources.

In the Data Sources tab, click the stacked dots to the right of the adventureworks data source, then select Delete Data Source.

Follow the steps to confirm deletion.

Get inspired on how to set up Soda to meet your .

Use to quickly get off the ground with basic checks for data quality.

Learn writing SodaCL checks.

Read more about in general.

What can Soda do for you? .

Join the .

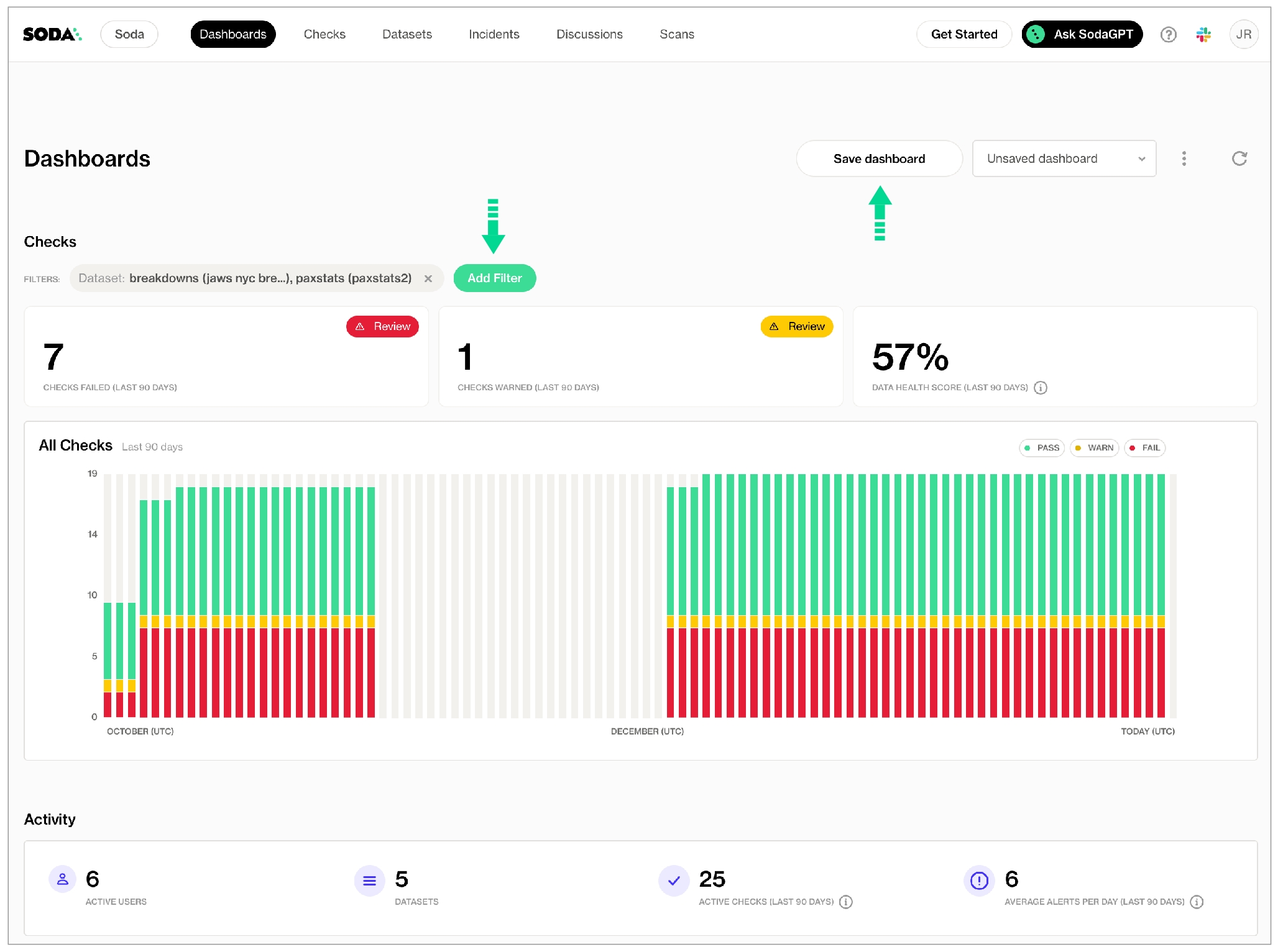

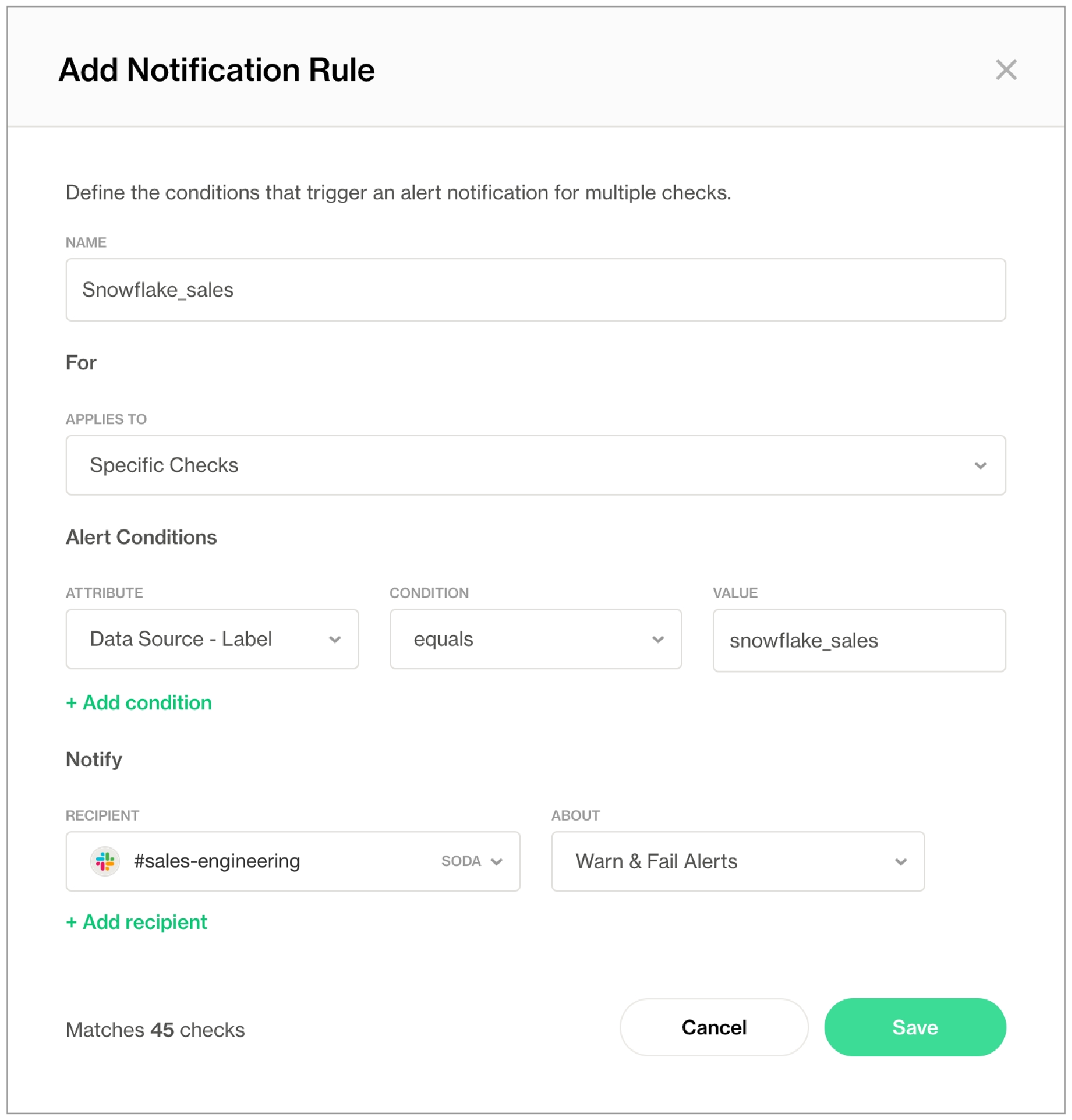

Use Soda Cloud to set alert notification rules for multiple checks across datasets in your account.

In Soda Cloud, you can define where and when to send alert notifications when check results warn or fail. You can define these parameters for:

agreements as you create or edit them; see Define SodaCL checks for Use an agreement.

no-code checks after you have created them; see Define SodaCL checks for Use a no-code check.

multiple checks by defining notification rules; read on!

For example, you can define a notification rule to instruct Soda Cloud to send an alert to your #sales-engineering Slack channel whenever a data quality check on the snowflake_sales data source fails.

By default, Soda Cloud establishes two notification rules on your Soda Cloud account by default. You can these rules if you wish.

Refer to for details on resource ownership.

For a new rule, you define conditions for sending notifications including the severity of a check result and whom to notify when bad data triggers an alert.

In Soda Cloud, navigate to your avatar > Notification Rules, then click New Notification Rule. Follow the guided steps to complete the new rule. Use the table below for insight into the values to enter in the fields and editing panels.

Navigate to your avatar > Notification Rules, then click the stacked dots at the right of a check and select Edit Notification Rule or Delete Notification Rule.

Learn more about SodaCL .

Integrate your Soda Cloud account with your .

Integrate your Soda Cloud account with a third-party tool using a .

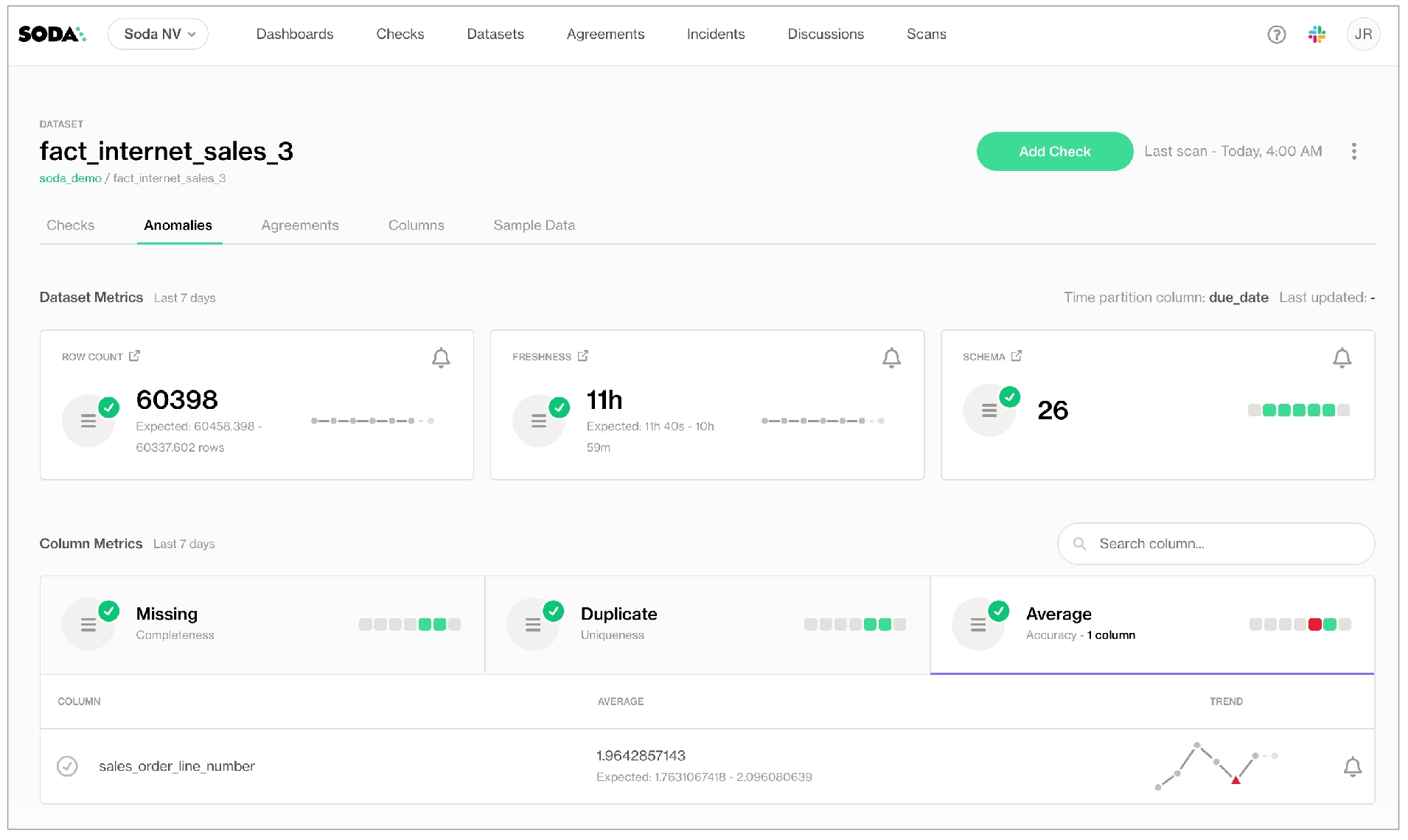

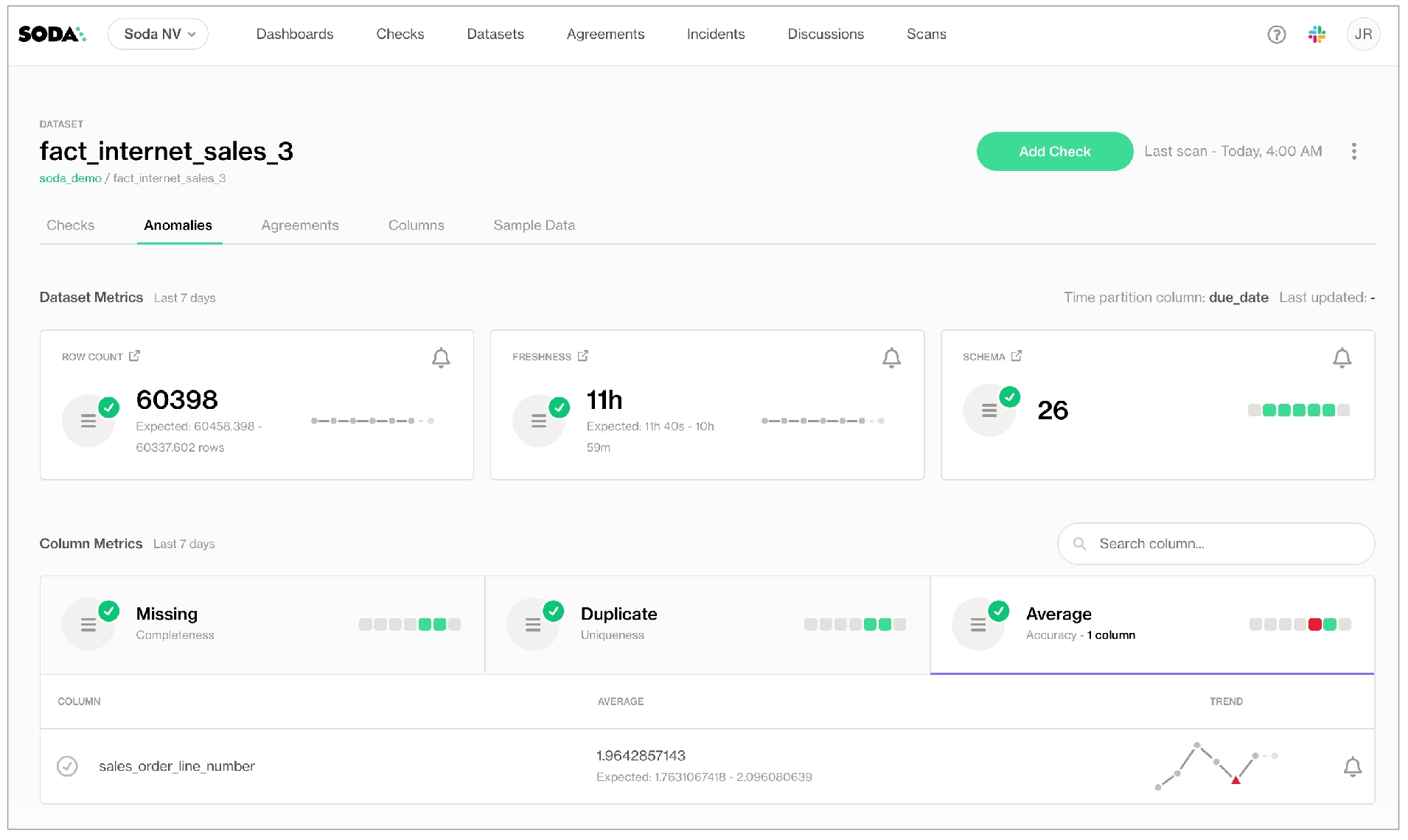

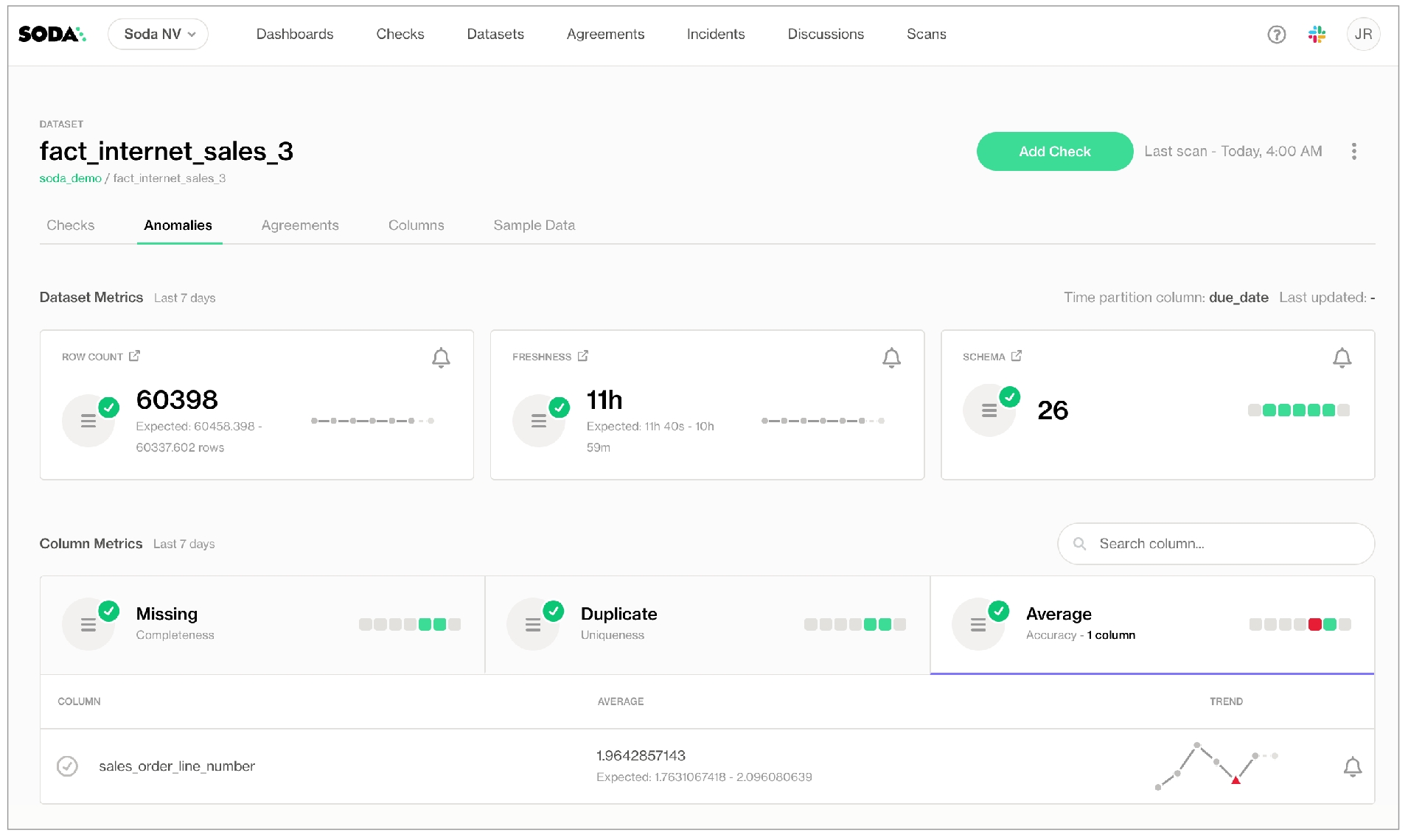

Use a SodaCL automated monitoring check to automatically check for row count anomalies and schema changes.

This feature is not supported in Soda Core OSS.

Migrate to Soda Library in minutes to start using this feature for free with a 45-day trial.

Use automated monitoring checks to instruct Soda to automatically check for row count anomalies and schema changes in a dataset.

✔️ Requires Soda Core Scientific (included in a Soda Agent) ✖️ Supported in Soda Core ✔️ Supported in Soda Library + Soda Cloud ✔️ Supported in Soda Cloud Agreements + Soda Agent ✖️ Available as no-code checks

When you add automated monitoring checks to a data source connected to your Soda Cloud account via a self-hosted agent, Soda prepares and executes two checks on all the datasets you indicated as included in the configuration.

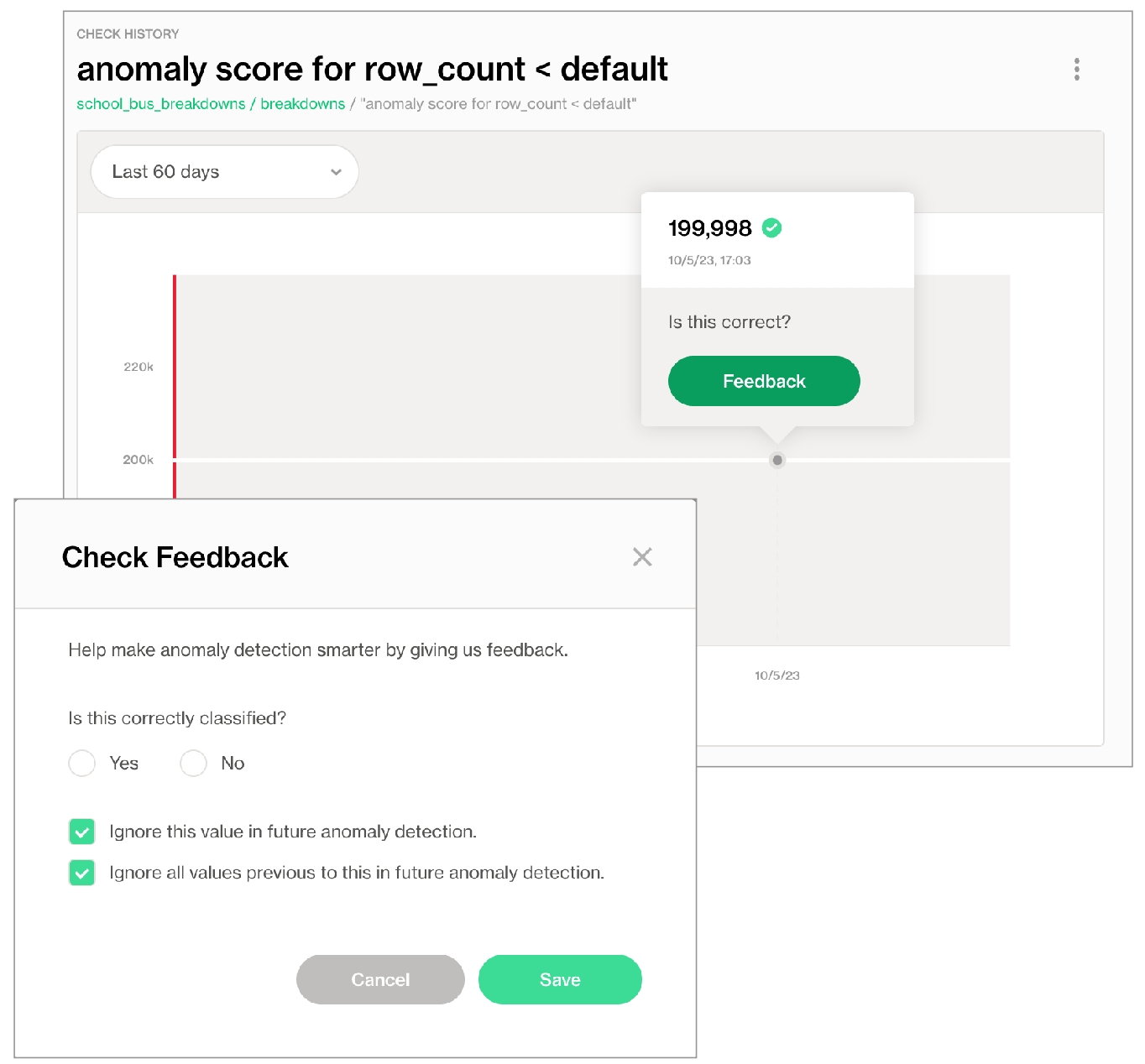

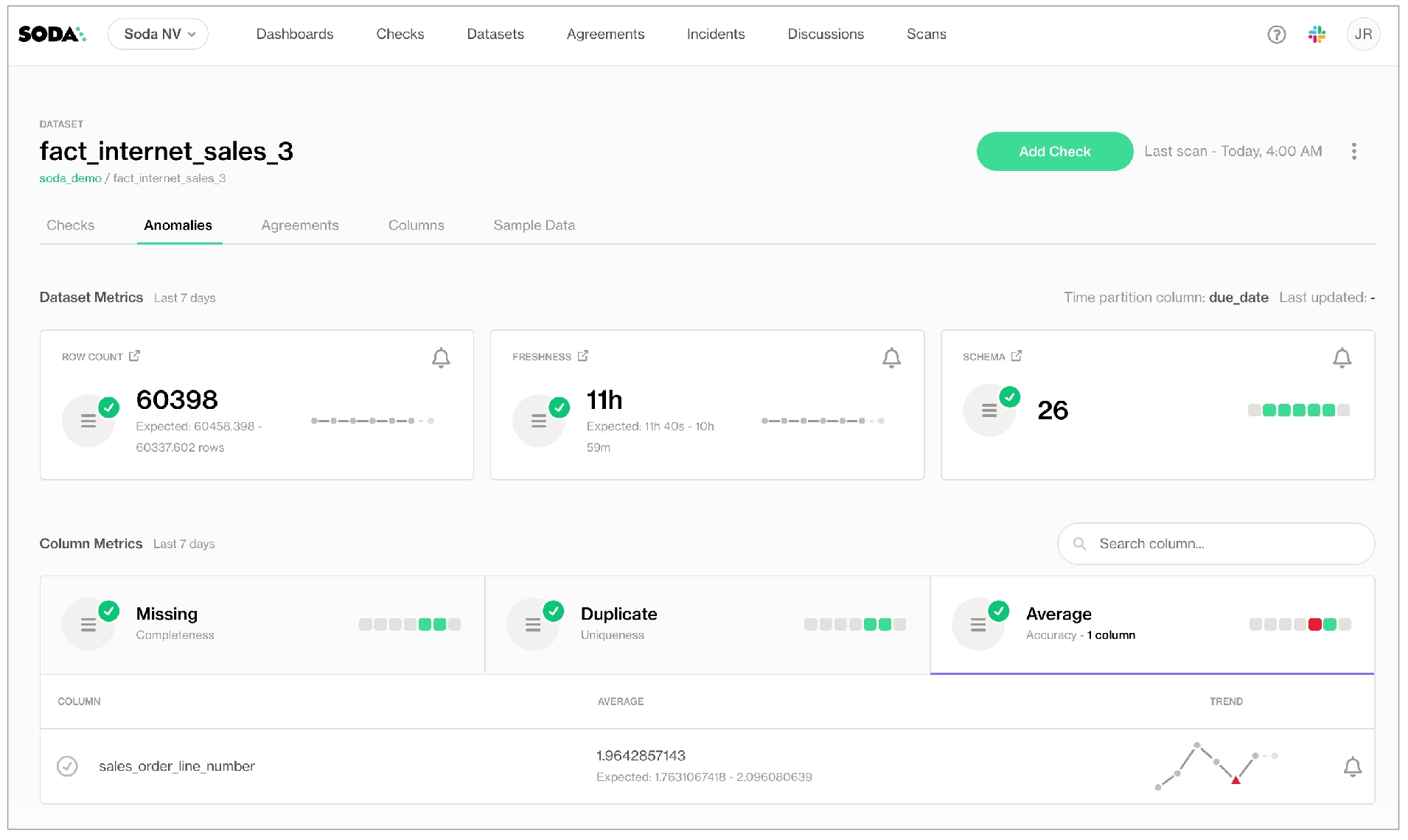

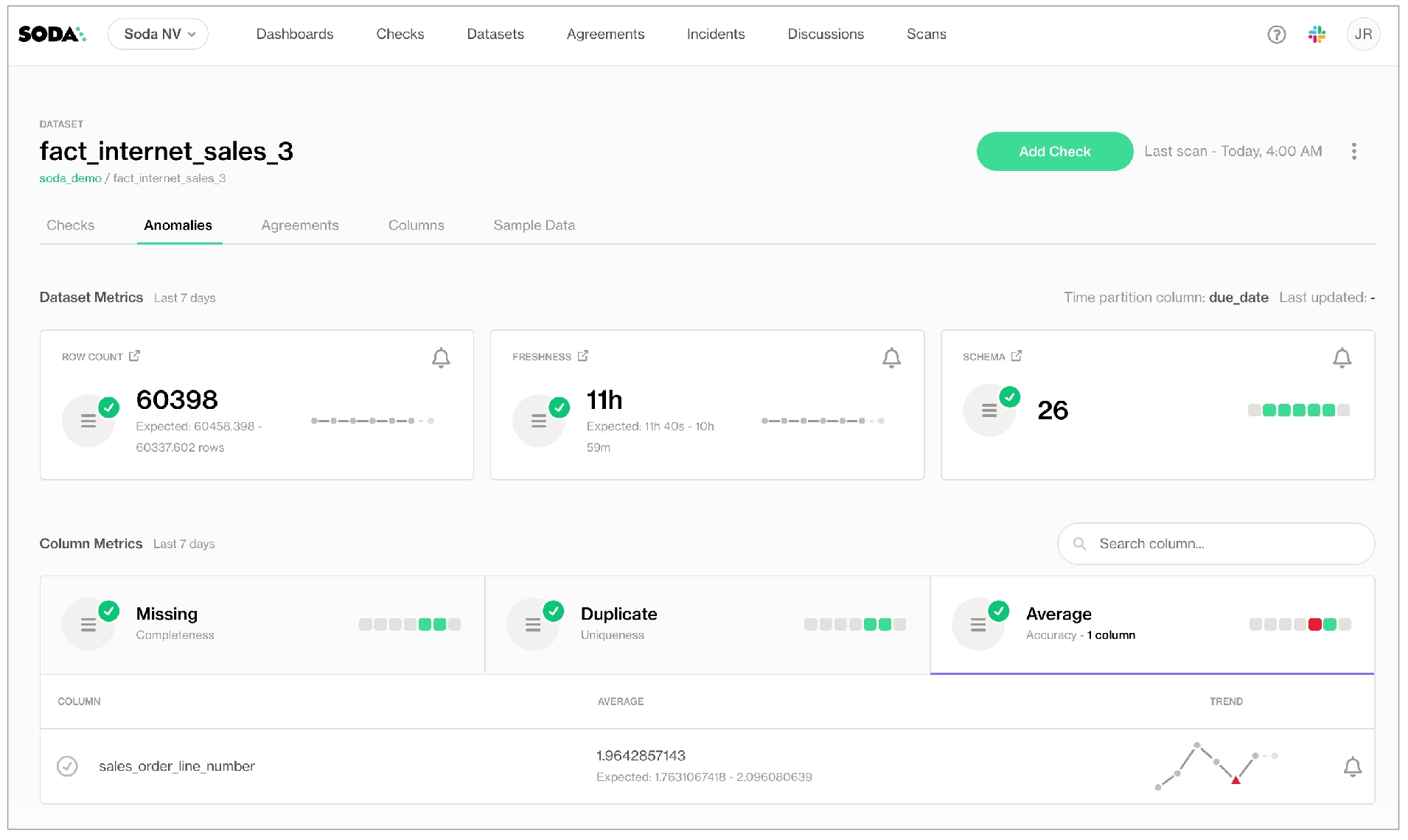

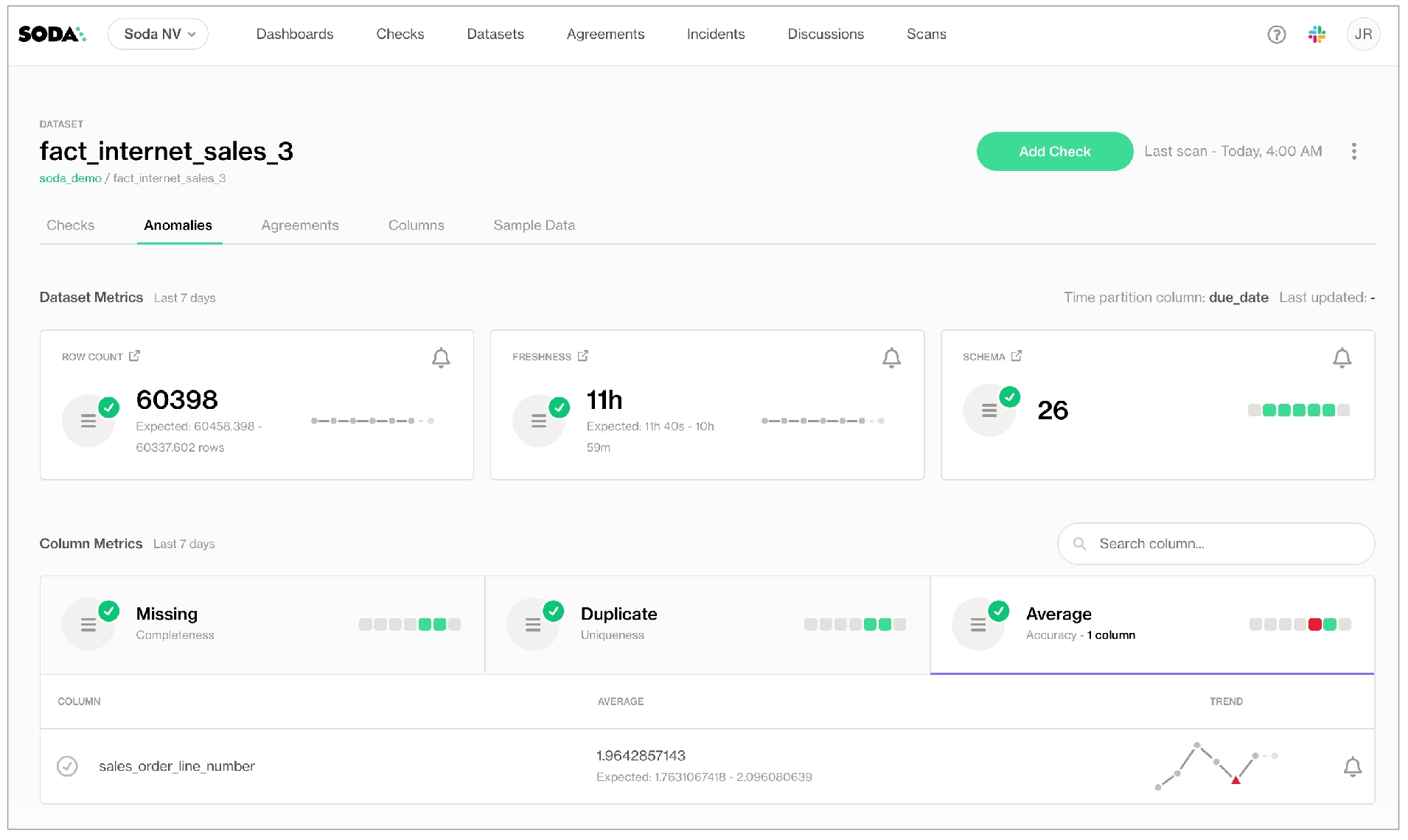

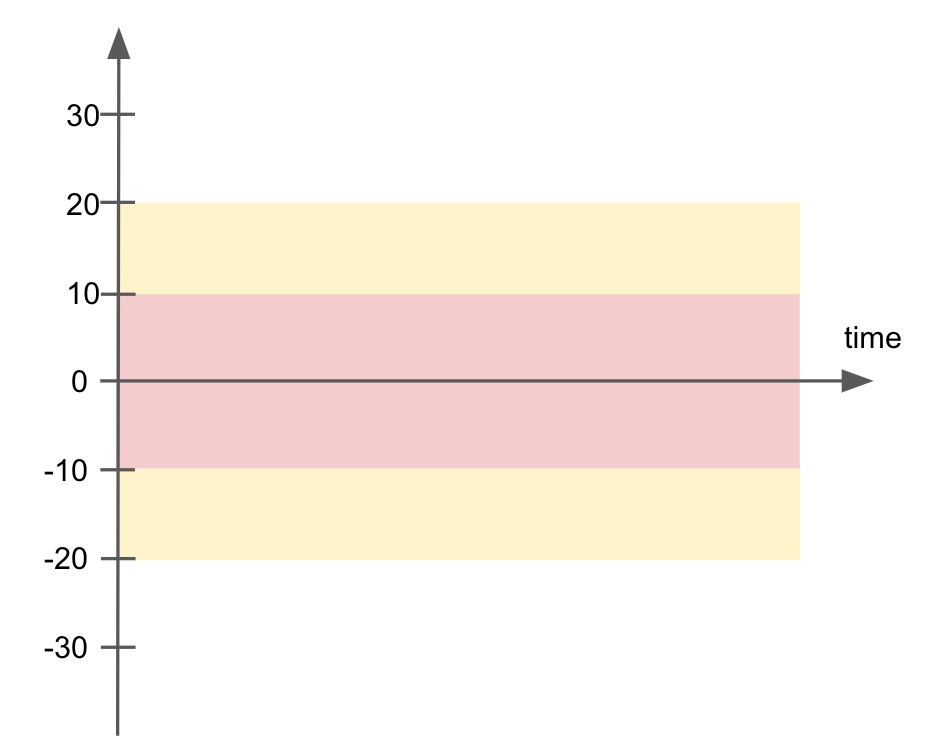

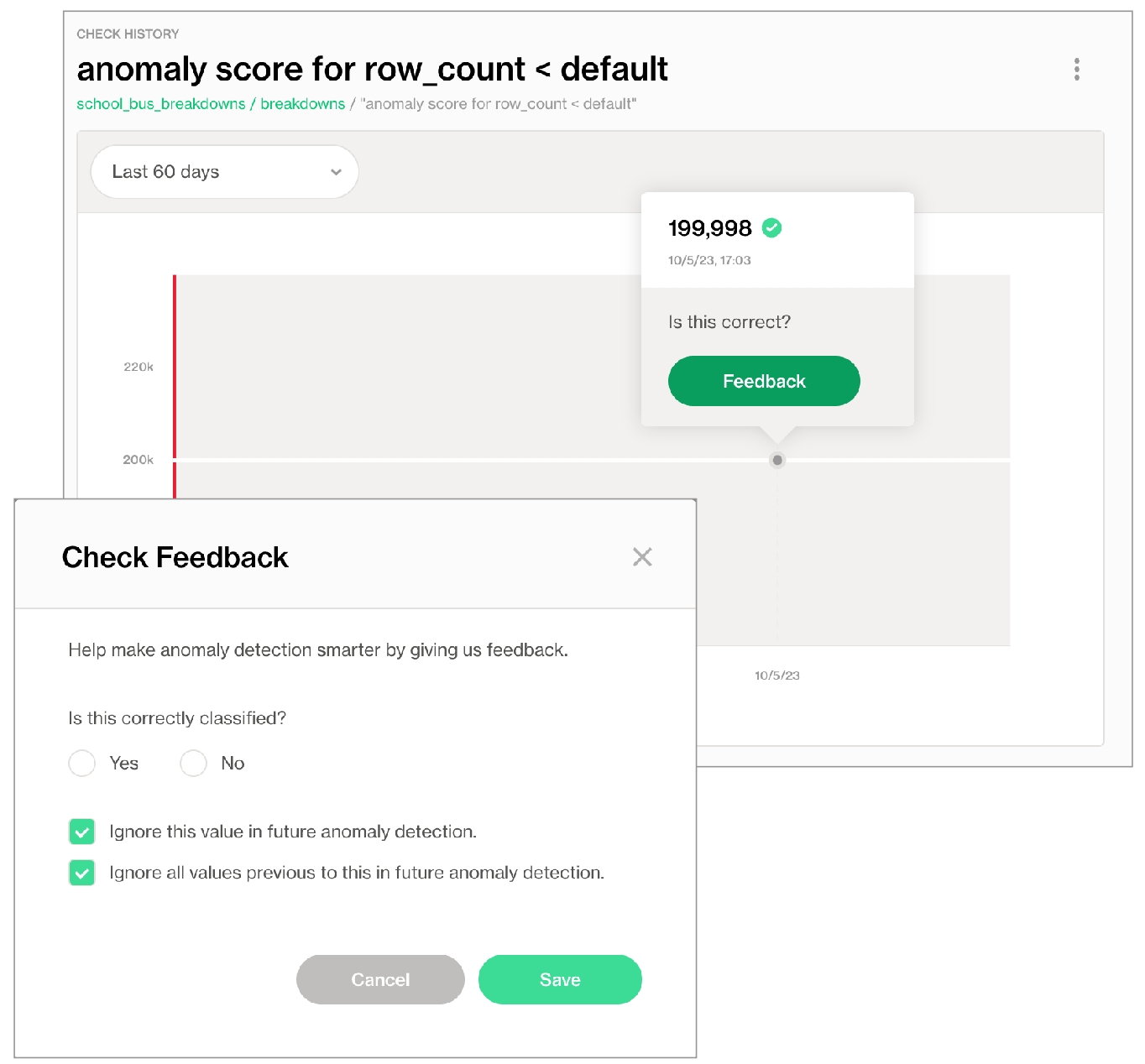

Anomaly score check on row count: This check counts the number of rows in a dataset during scan and registers anomalous counts relative to previous measurements for the row count metric. Refer to for details. Anomaly score checks require a minimum of four data points (four scans at stable intervals) to establish a baseline against which to gauge anomalies. If you do not see check results immediately, allow Soda Library to accumulate the necessary data points for relative comparison.

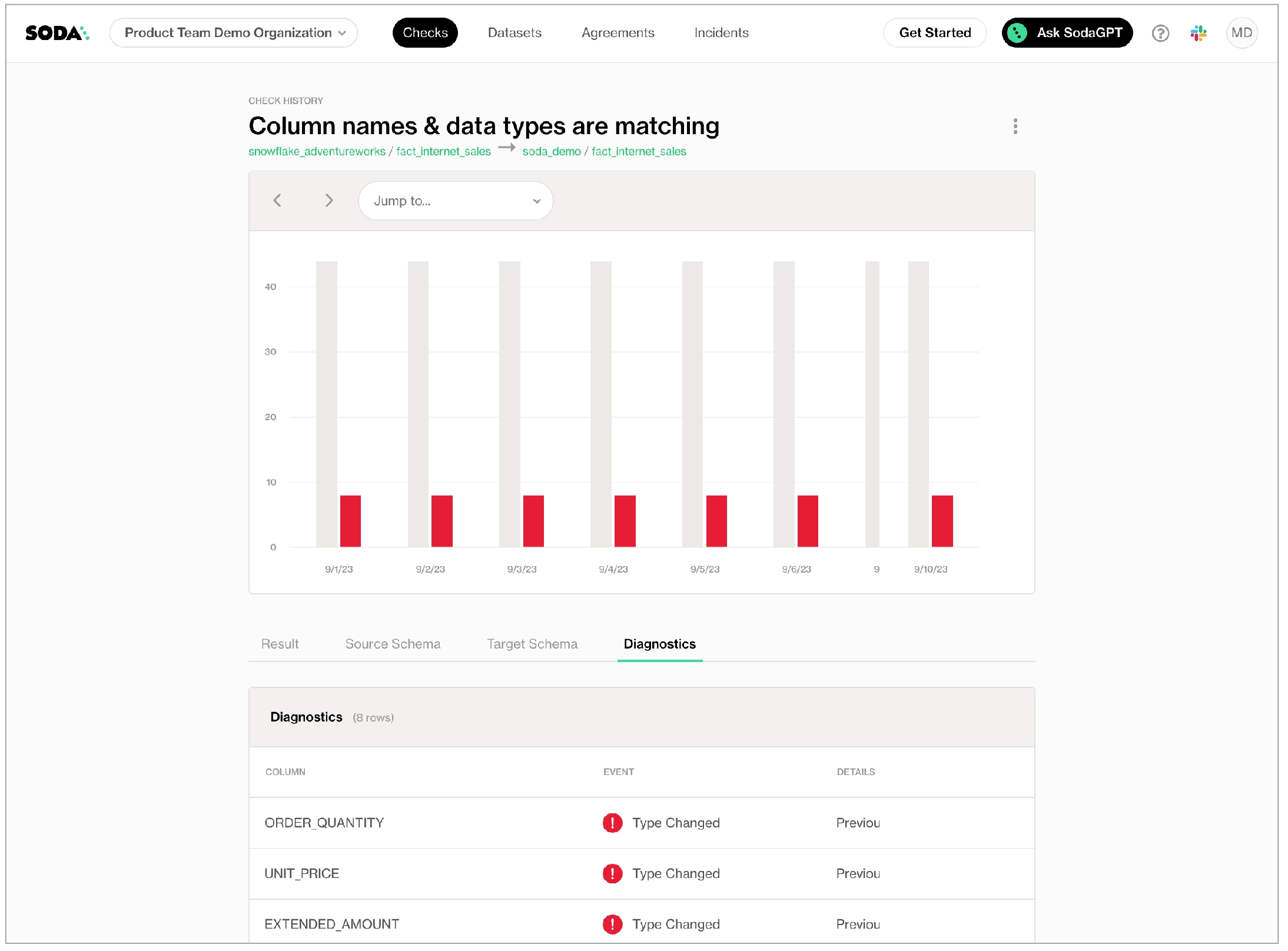

Schema evolution check: This check monitors schema changes in datasets, including column addition, deletion, data type changes, and index changes. By default, this automated check results in a failure if a column is deleted, its type changes, or its index changes; it results in a warning if a column is added. Refer to for details. Schema checks require a minimum of one data point to use as a baseline against which to gauge schema changes. If you do not see check results immediately, wait until after you have scanned the dataset twice.

Add automated monitoring checks as part of the guided workflow to create a new data source only in deployment models that use a self-hosted Soda agent, not a Soda-hosted Soda agent. For a Soda-hosted agent, consider using the automated for observability into basic data quality in your datasets.

If you are using a self-operated deployment model that leverages Soda Library, add the column profiling configuration outlined below to your checks YAML file.

In Soda Cloud, navigate to your avatar > Data Sources > New Data Source to begin.

In step 5. Check of the guided workflow, you have the option of listing the datasets to which you wish to automatically add anomaly score and schema evolution checks. (Note that if you have signed up for early access to for datasets, this Check tab is unavailable as Soda performs all automated monitoring automatically in the dashboards.)

The example check below uses a wildcard character (%) to specify that Soda Library executes automated monitoring checks against all datasets with names that begin with prod, and not to execute the checks against any dataset with a name that begins with test.

You can also specify individual datasets to include or exclude, as in the following example.

To review the check results for automated monitoring checks in Soda Cloud, you can:

navigate to the Checks dashboard to see the check results

navigate to the Datasets dashboard to see the check results for an individual dataset

To add those necessary quotes to dataset names that Soda acts upon automatically – discovering, profiling, or sampling datasets, or creating automated monitoring checks – you can add a quote_tables configuration to your data source, as in the following example.

If your dataset names include white spaces or use special characters, you must wrap those dataset names in quotes whenever you identify them to Soda, such as in a checks YAML file.

Learn more about the for datasets.

Reference .

Use a to gauge how recently your data was captured.

Use to compare the values of one column to another.

Soda enables you to seamlessly integrate data quality checks into the tools and workflows you already use across your data stack, whether aligning data governance efforts, collaborating across teams, or triggering automated CI/CD and incident-management workflows.

These integrations surface data quality metrics and rule definitions directly within your existing tools, automate alert notifications to your teams, and streamline the creation and tracking of incidents and tickets based on check results.

To get started, select the integration you need from the list below for detailed instructions, prerequisites, and troubleshooting tips:

Data catalogs & governance tools

Access data quality insights directly within your Alation catalog. Run Soda scans to surface quality metrics and rules in the context of data sources, datasets, or columns.

Surface Soda-driven quality checks and metrics alongside your Atlan metadata. Flag poor-quality data in lineage diagrams and explore data-profile details in Atlan.

Explore any of these guides to get started with your preferred integration, and unlock end-to-end data-quality observability across your stack.

From time to time, Soda may encounter runtime issues when it attempts to run a data quality scan on data in your data source. Issues such as unresponsive databases, or incorrectly defined checks may cause delays in the scan process which can result in excessive check execution times, sluggish database responsiveness due to heavy loads, or scheduling conflicts with other processes that cause bottlenecks.

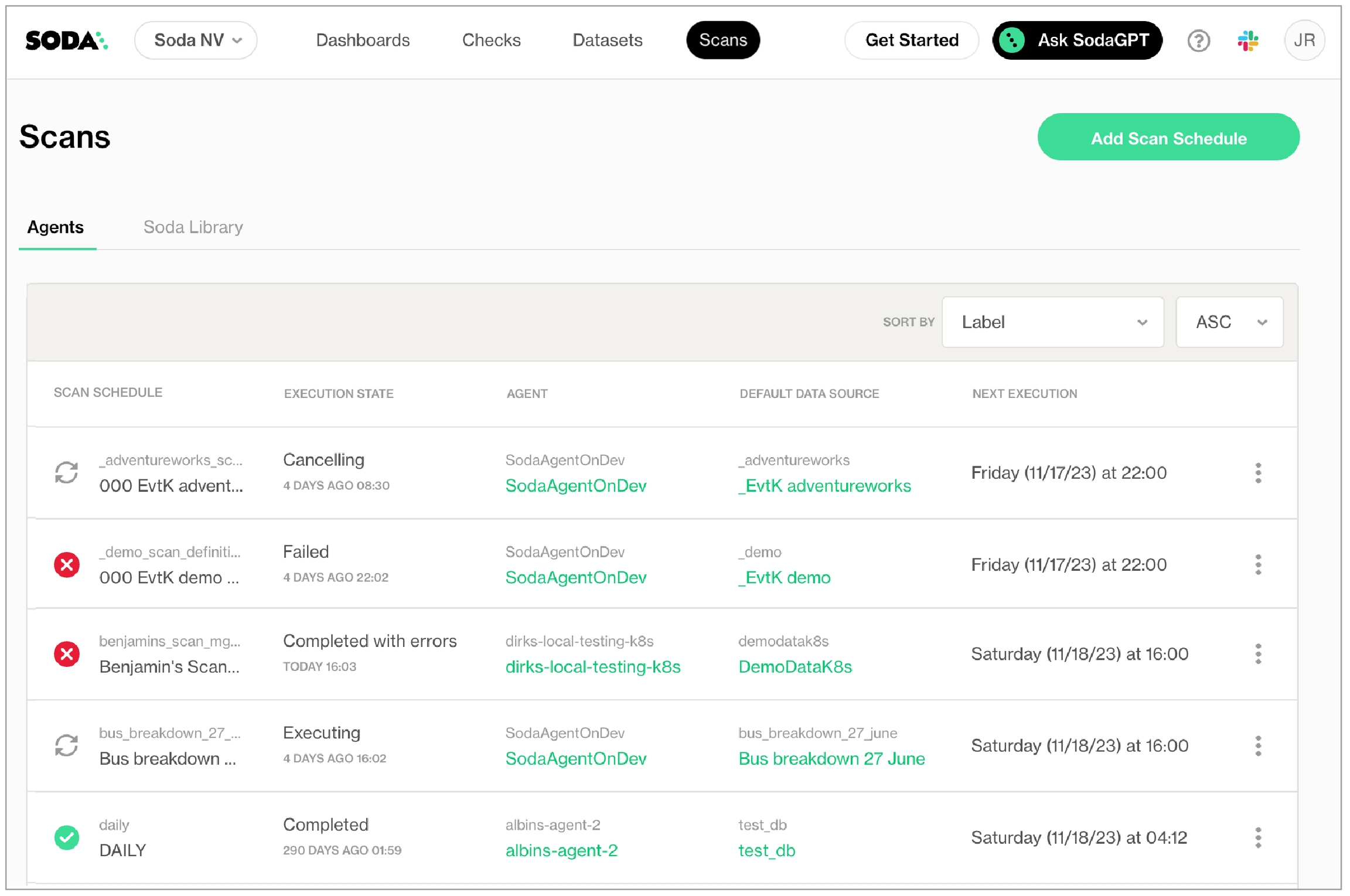

You can view the status of scans that are in progress, queuing, completed, or partially complete with errors in the Scans dashboard in Soda Cloud.

To provide visibility into slow, incomplete, or failed Soda scans, you can set up customized alerts notifications for each Scan Definition that you created using Soda Cloud.

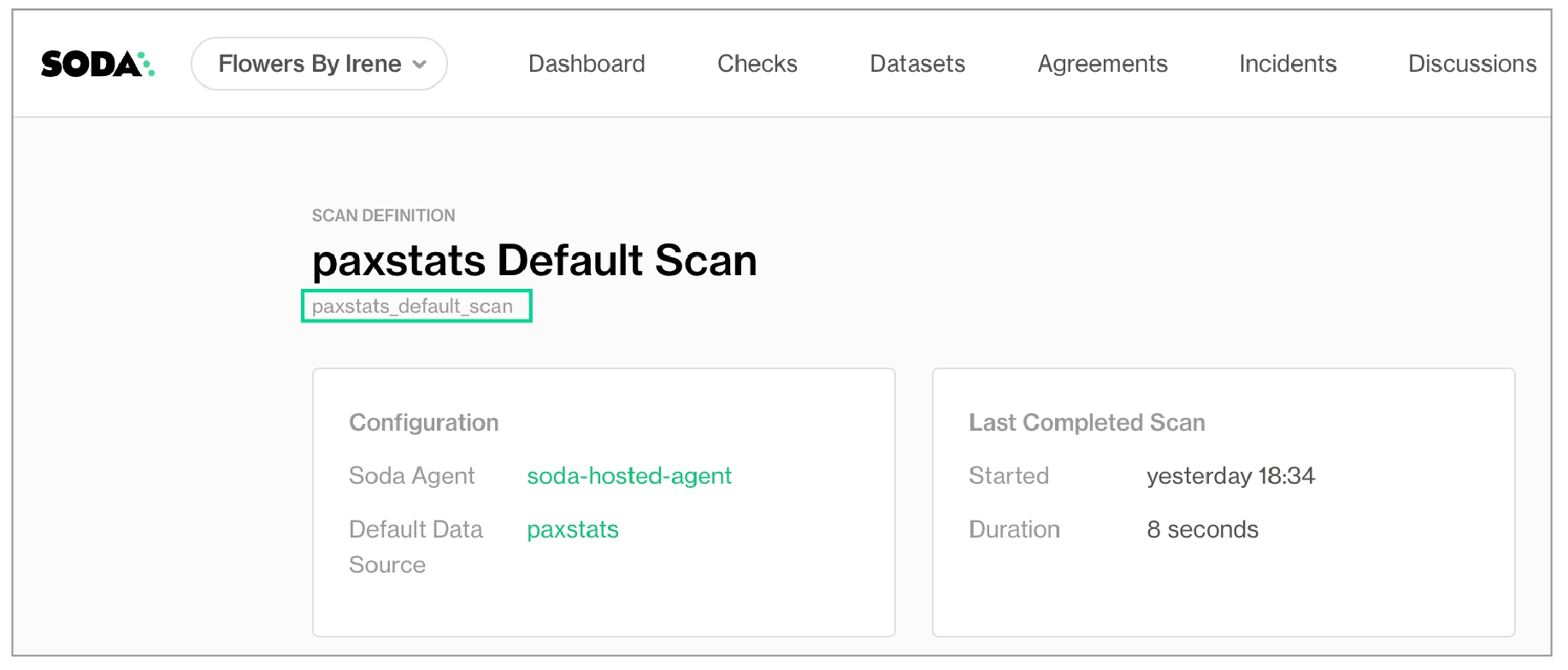

Log in to your Soda Cloud account, then navigate to Scans, and access the Agents tab. (You cannot set scan definition notifications for scans that you run using Soda Library.)

From the list, select one that uses the Scan Definition for which you wish to configure alerts.

On the scan definition's page, click the stacked dots at right, then select Edit Scan Definition.

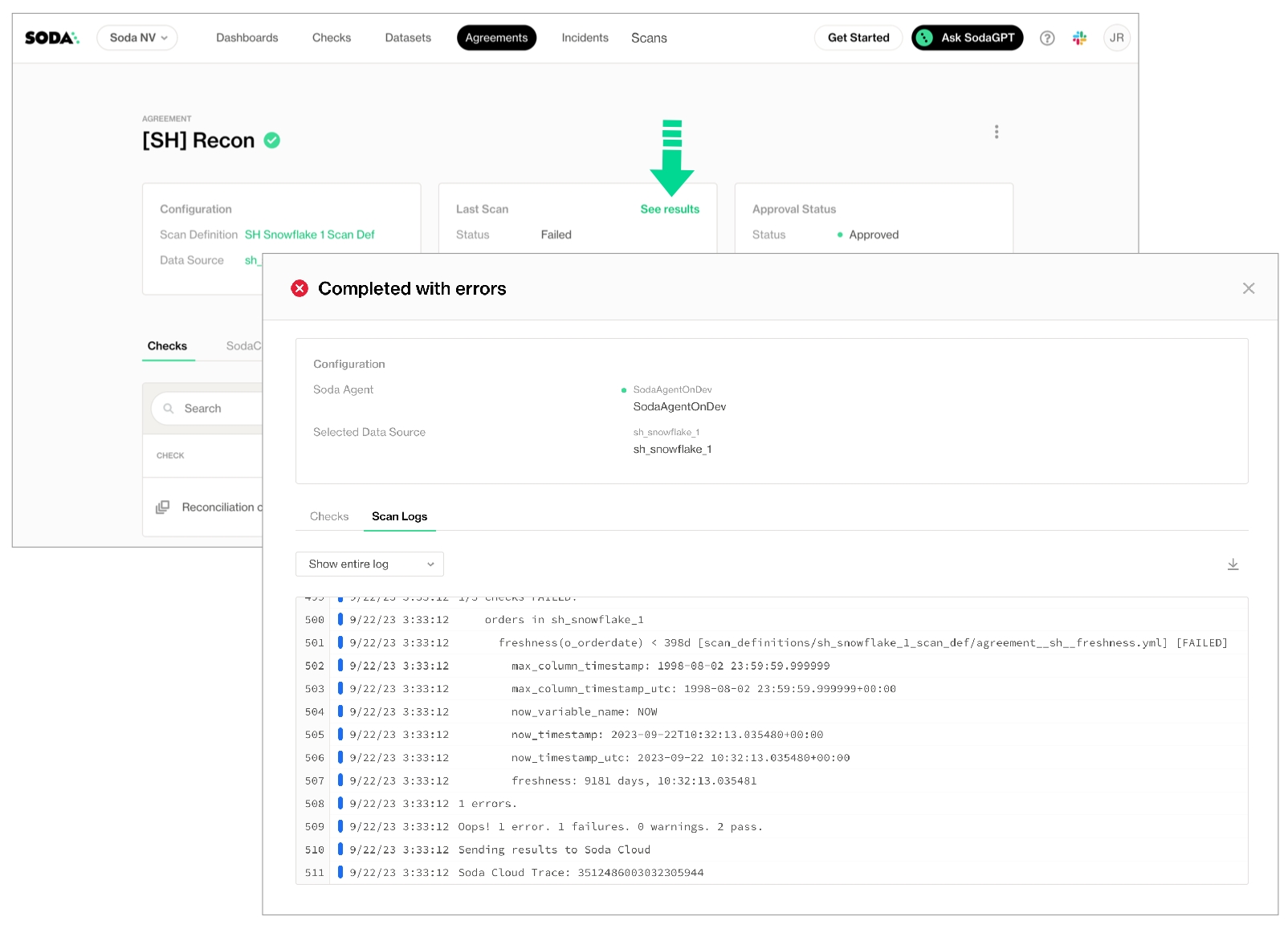

When you notice or receive a notification about a scan failure or delay, you can access the scan’s logs to investigate what is causing the issue.

Log in to your Soda Cloud account, then navigate to Scans, and access the Agents tab.

From the list of scan definitions, select the one that failed or timed out.

On the scan definitions’s page, in the list of scan results, locate the one that failed or timed out, then click the stacked dots to its right and select Scan Logs.

Review the scan log, using the filter to show only warning or errors if you wish, or downloading the log file for external analysis.

Use the Scans page to access an overview of the executing and queuing scans in your Soda Cloud account. If you wish, you can cancel and restart a scan to manage the order in the queue.

On the Scans page, select a scan that is in an Executing state.

On the scan definition's page, click Cancel Scan.

When the scan state reads Canceled, you can click Run Scan from the same page to restart the scan.

To prevent processing bottlenecks, configure a scan timeout on your Soda Agent to ensure that excessively long-running scans stop automatically. If you have configured a delayed completion alert using the procedure above, Soda uses this timeout value to trigger alert notifications.

By default, Soda sets the scan timeout to two hours; follow the steps below to adjust that value.

Log in to your Soda Cloud account, then navigate to your avatar, Data Sources, and access the Agents tab.

From the list of Agents, select the one for which you wish to adjust the timeout value.

On the agent's page, click the stacked dots at right, then select Edit Agent.

Use the dropdown to adjust the value of Timeout Scans After

Best practice dictates that to enhance scan efficiency, you avoid scheduling resource-intensive tasks, such as , concurrently with checks. This practice minimizes the likelihood of delays caused by resource contention, ensuring smoother execution of scans.

Do not set all of your scan definitions to run at the same time, particularly if the scans use the same Soda Agent. Mindfully stagger scan definition times to more evenly distribute executions and reduce the risk of bottlenecks, delays, and failed scans.

As the volume of checks a scan executes organically increases over time, scans may take longer to execute. If your scans are timing out too frequently, adjust the to a higher threshold.

for checks that fail or warn during a Soda scan.

Configure a webhook to connect Soda to your ServiceNow account.

Configure a webhook in Soda Cloud to connect to your ServiceNow account.

In ServiceNow, you can create a Scripted REST API that enables you to prepare a resource to work as an incoming webhook. Use the ServiceNow Resource Path in the URL field in the Soda Cloud integration setup.

This example offers guidance on how to set up a Scripted REST API Resource to generate an external link which Soda Cloud displays in the Incident Details; see image below. When you change the status of a Soda Cloud incident, the webhook also updates the status of the SNOW issue that corresponds with the incident.

Refer to Event payloads for details information.

The following steps offer a brief overview of how to set up a ServiceNow Scripted REST API Resource to integrate with a Soda Cloud webhook. Reference the ServiceNow documentation for details:

and

In ServiceNow, start by navigating to the All menu, then use the filter to search for and select Scripted REST APIs.

Click New to create a new scripted REST API. Provide a name and API ID, then click Submit to save.

In the Scipted Rest APIs list, find and open your newly-created API, then, in the Resources tab, click New to create a new resource.

As a business user, learn more about in Soda Cloud.

Set that apply to multiple checks in your account.

Learn more about creating, tracking, and resolving data quality .

Access a list of that Soda Cloud supports.

Learn how to double-onboard a data source to leverage all the features supported by Soda Agents.

To scan your data for quality, Soda must connect to a data source using connection configurations (host, port, login credentials, etc.) that you either define in Soda Cloud during onboarding using a Soda Agent, or in a configuration YAML file you reference during programmatic or CLI scans using Soda Library. Soda recognizes each data source you onboard as an independent resource in Soda Cloud, where it displays all scan results and failed row samples for all data sources regardless of onboarding method.

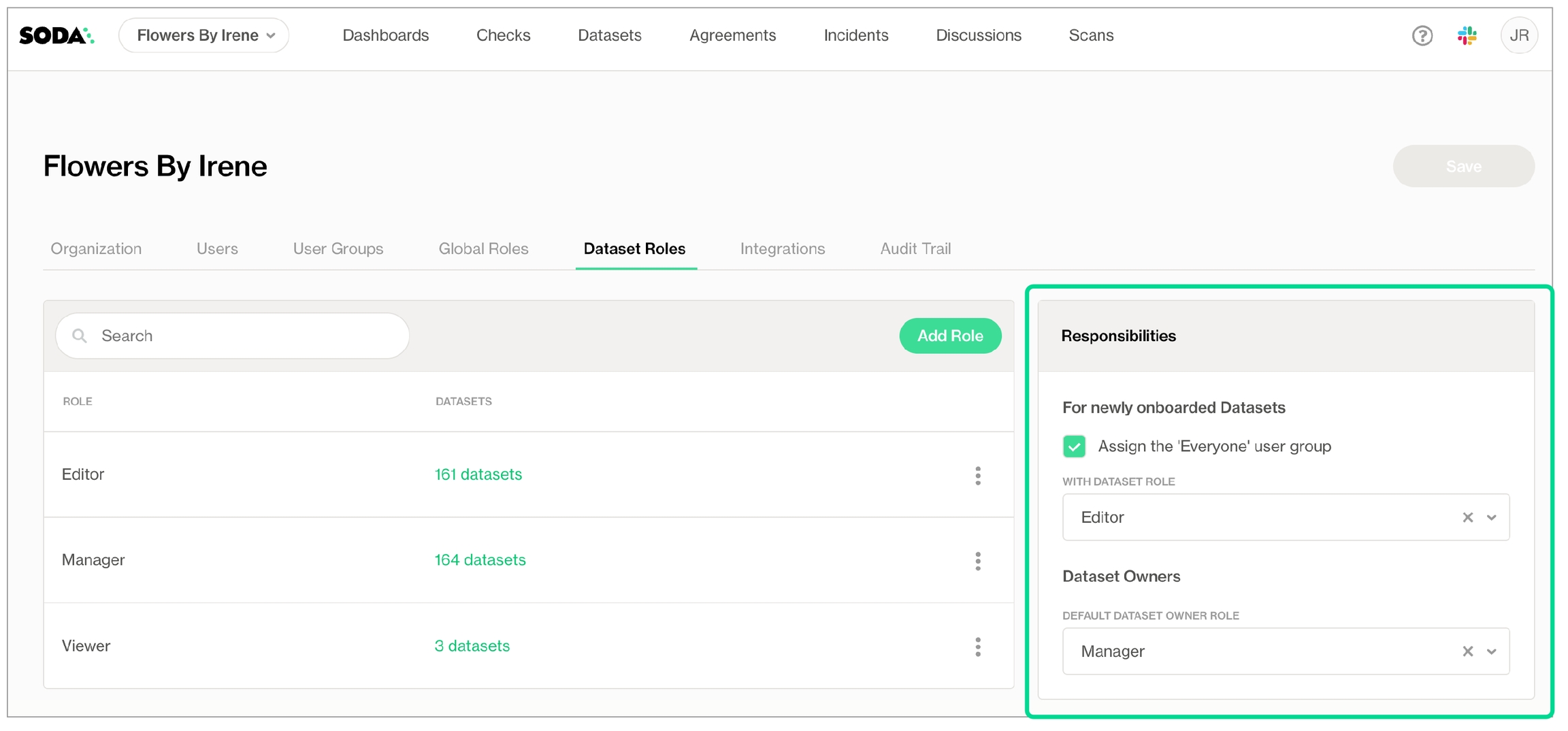

However, data sources you connect via a Soda agent using the guided workflow in Soda Cloud support several features which data sources you connect via Soda Library do not, including:

Available in 2025

If you have onboarded a data source via Soda Library but you wish to take advantage of the features available to Soda Agent-onboarded data sources, you can double-onboard an existing data source.

See also:

See also:

See also: in Soda Cloud

You , you have configured it to connect to your data source, and you have run at least one programmatically or via the Soda Library CLI.

You have deployed a helm chart in a Kubernetes cluster in your cloud services environment OR Someone with Soda Admin privileges in your organization’s Soda Cloud account has navigated to your avatar > Organization Settings check the box to Enable Soda-hosted Agent; see .

You have access to the connection configurations (host, port, login credentials, etc.) for your data source.

1 MS SQL Server with Windows Authentication does not work with Soda Agent out-of-the-box.

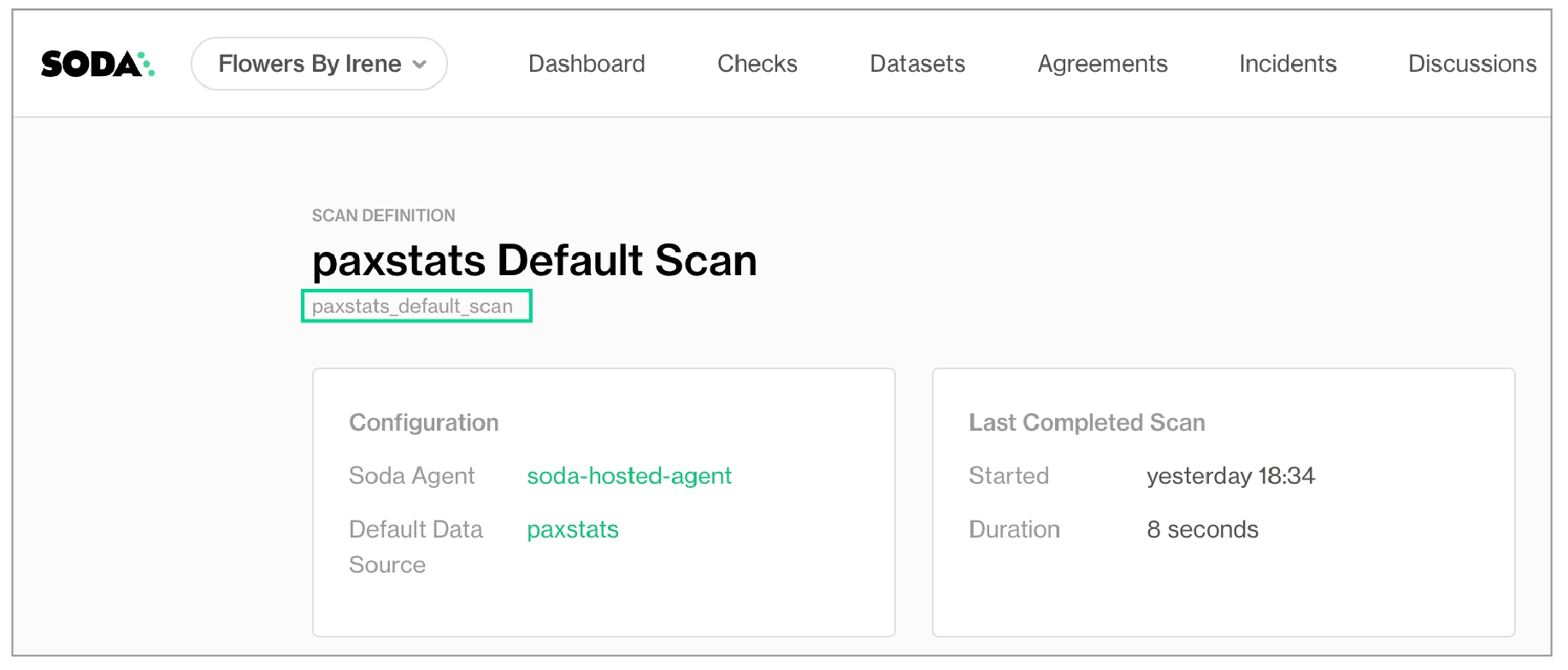

Log in to Soda Cloud, then navigate to your avatar > Data Sources.

From the list of data sources connected to your Soda Cloud account, click to select and open the one you onboarded via Soda Library and now wish to double-onboard via a Soda Agent.

Follow the guided workflow to onboard the existing data source via a Soda Agent, starting by using the dropdown to select the Default Scan Agent you wish to use to connect to the data source.

define a schedule for your default scan definition

provide connection configuration details for the data source such as name, schema, and login credentials, and test the connection to the data source

profile the datasets in the data source to gather basic metadata about the contents of each

identify the datasets to which you wish to apply automated monitoring for anomalies and schema changes

Save your changes, then navigate to the Datasets page and select a dataset in the data source you just double-onboarded.

(Optional) If you wish, and if you have for the feature, you can follow the instructions to for the dataset.

(Optional) Click Add Check and begin adding to the dataset.

Known issue: Double-onboarding a data source renders Soda Library API keys invalid. After double-onboarding a data source, if you run a programmatic or CLI scan of that data source using Soda Library, an error appears to indicate that the API keys are invalid. As a workaround, in Soda Cloud, then, in your configuration YAML, replace the old API key values with the newly-generated ones.

Learn more about for observability.

Access configuration details to connect Soda to a Microsoft Fabric data source.

Install package: soda-fabric

Soda support for Fabric data source is based on soda-sqlserver package.

data_source my_datasource_name:

type: fabric

host: host

port: '1433'

username: simple

password: simple_pass

database: database

schema: dbo

trusted_connection: false

encrypt: false

trust_server_certificate: false

driver: ODBC Driver 18 for SQL Server

scope: DW

connection_parameters:

multi_subnet_failover: true

authentication: sqlIf you have integrated Soda Cloud with Slack, you can use an Incident’s built-in ability to create a channel that your team can use to investigate an issue.

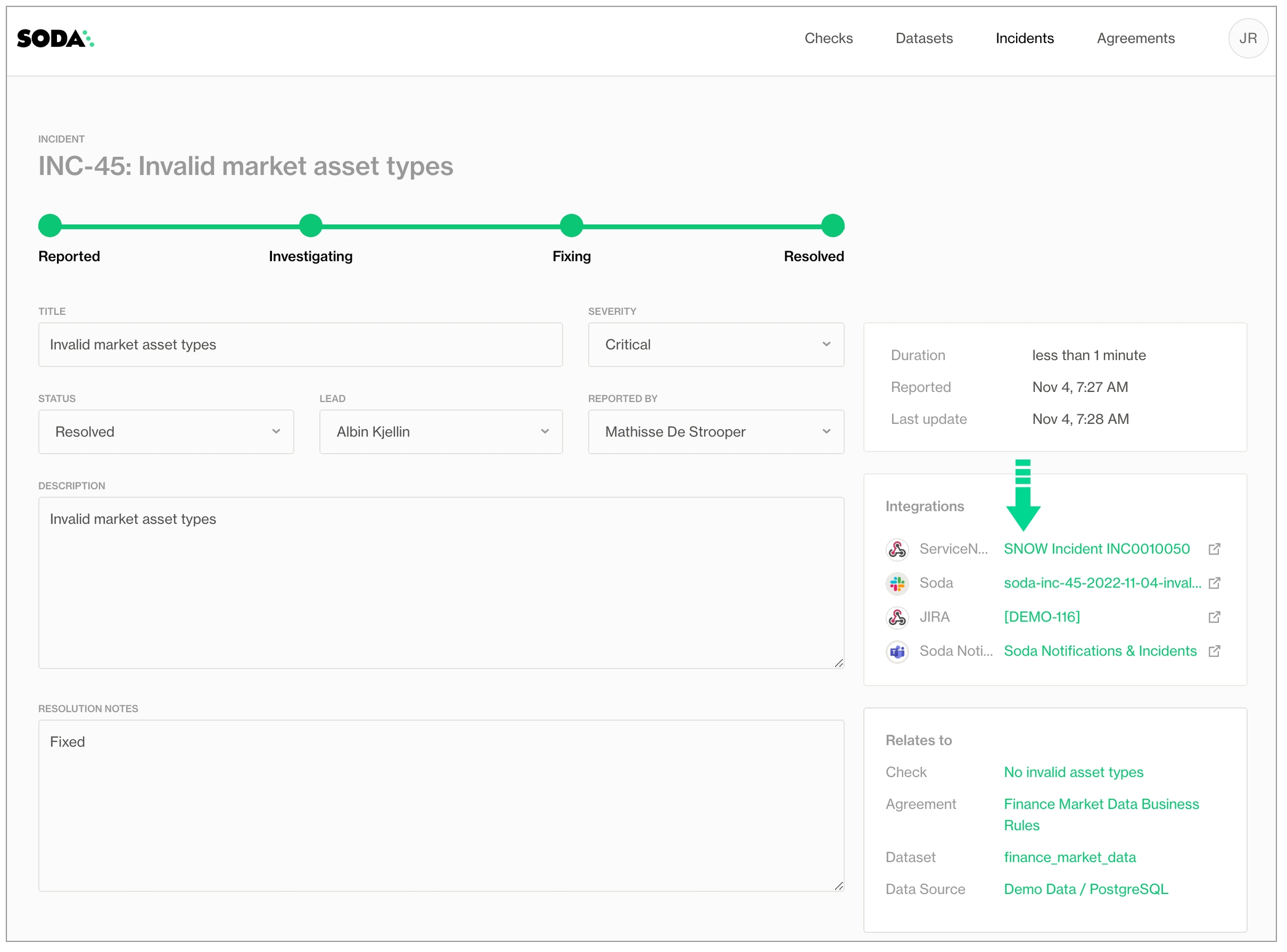

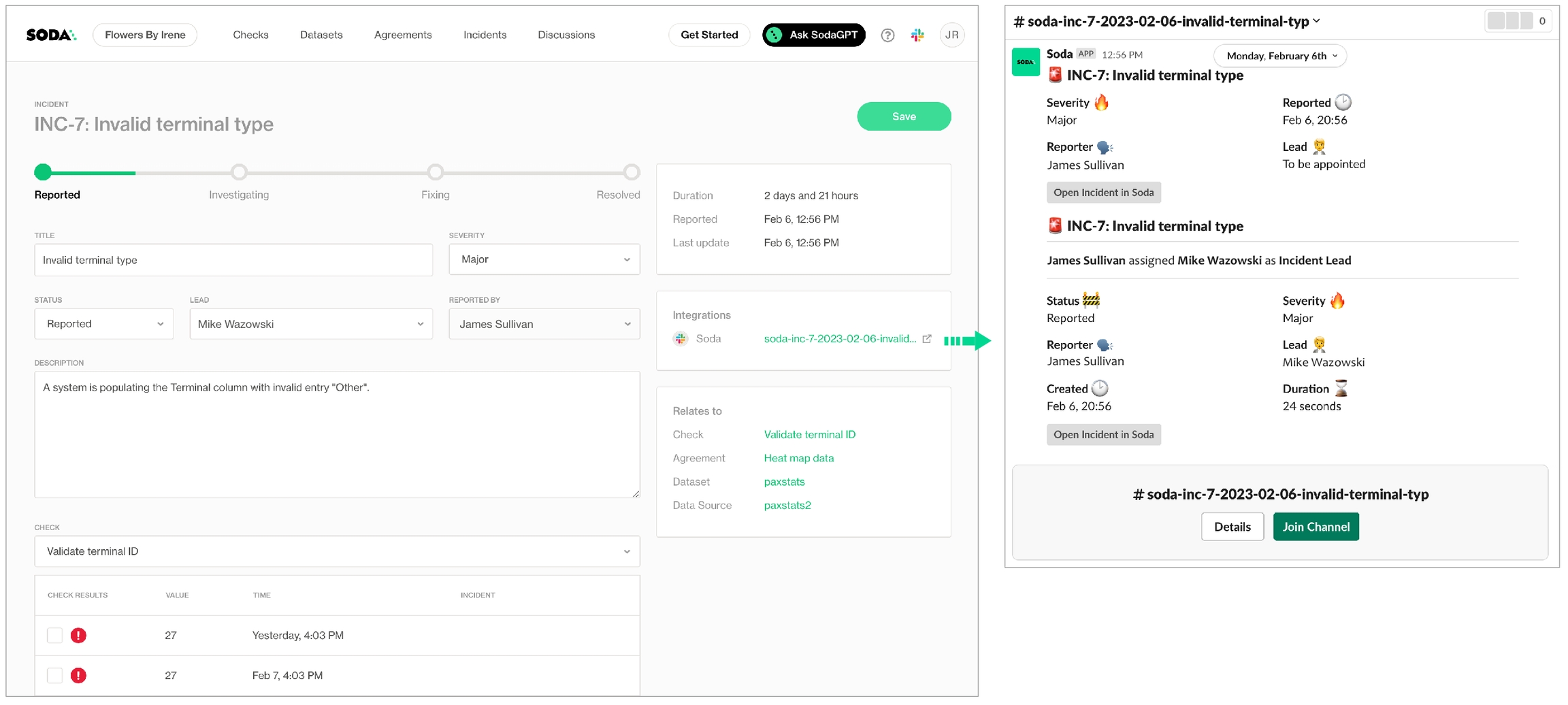

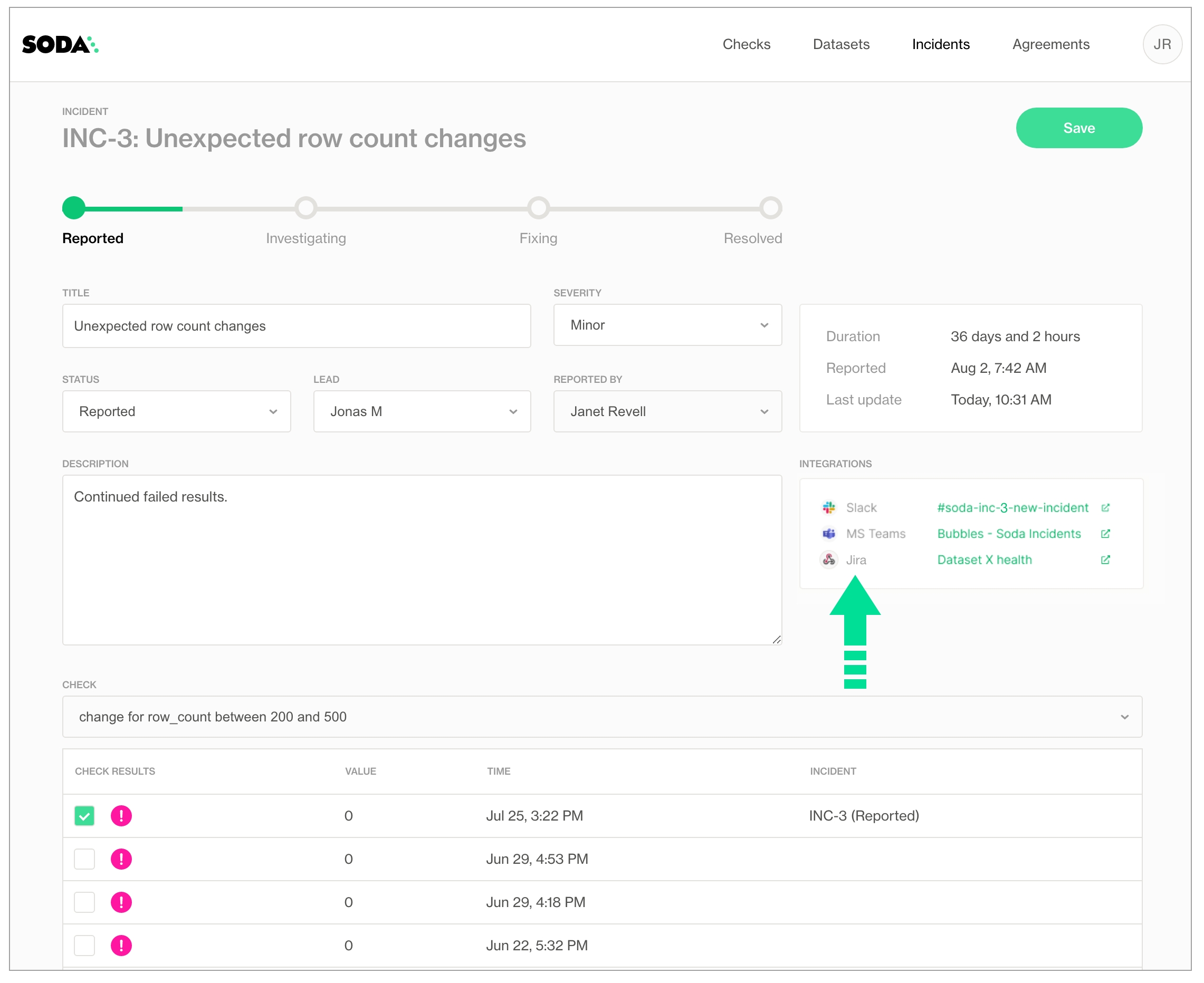

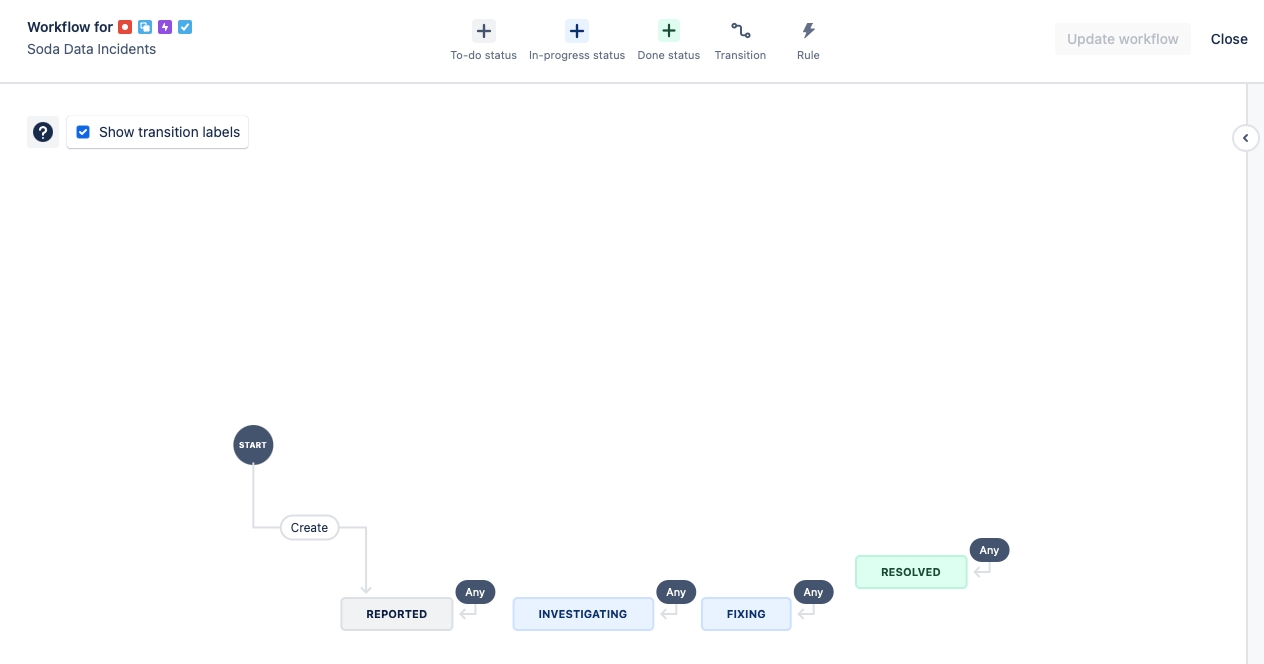

When Soda runs a scan to execute the SodaCL checks you defined, Soda Cloud displays the checks and their latest scan results in the Checks dashboard. For a check that failed or triggered a warning, you have the option of creating an Incident for that check result in Soda Cloud to track your team's investigation and resolution of a data quality issue.

If you have integrated your Soda Cloud account with a Slack workspace, or MS Teams channel, or another third-party messaging or ticketing tool that your team uses such as Jira or ServiceNow, you can use an incident’s built-in ability to create an incident-specific link where you and your team can collaborate on the issue investigation.

Log in to your Soda Cloud account, then navigate to the Checks dashboard.

For the check you wish to investigate, click the stacked dots at right, then select Create Incident. Provide a Title, Severity, and Description of your new incident, then save.

In the Incident column of the check result, click the Incident link to access the Incident page where you can record the following details:

As your team works through the investigation of an Incident, use the Incident's Status field to keep track of your progress.

In the Incidents dashboard, review all Incidents, their severity and status, and the assigned lead. Sort the list of Incidents by severity.

From an Incident's page, link other check results to the same Incident to expand the investigation landscape.

If you opened a Slack channel to investigate the incident, Soda archives the channel when you set the

to facilitate your search for the right data.

for a check result.

Collaborate with your team using a .

Integrate Soda with your or .

Access configuration details to connect Soda to a ClickHouse data source.

Because ClickHouse is compatible with MySQL wire protocol, Soda offers indirect support for ClickHouse data sources using the soda-mysql package.

To confirm that you have correctly configured the connection details for the data source(s) in your configuration YAML file, use the test-connection command. If you wish, add a -V option to the command to returns results in verbose mode in the CLI.

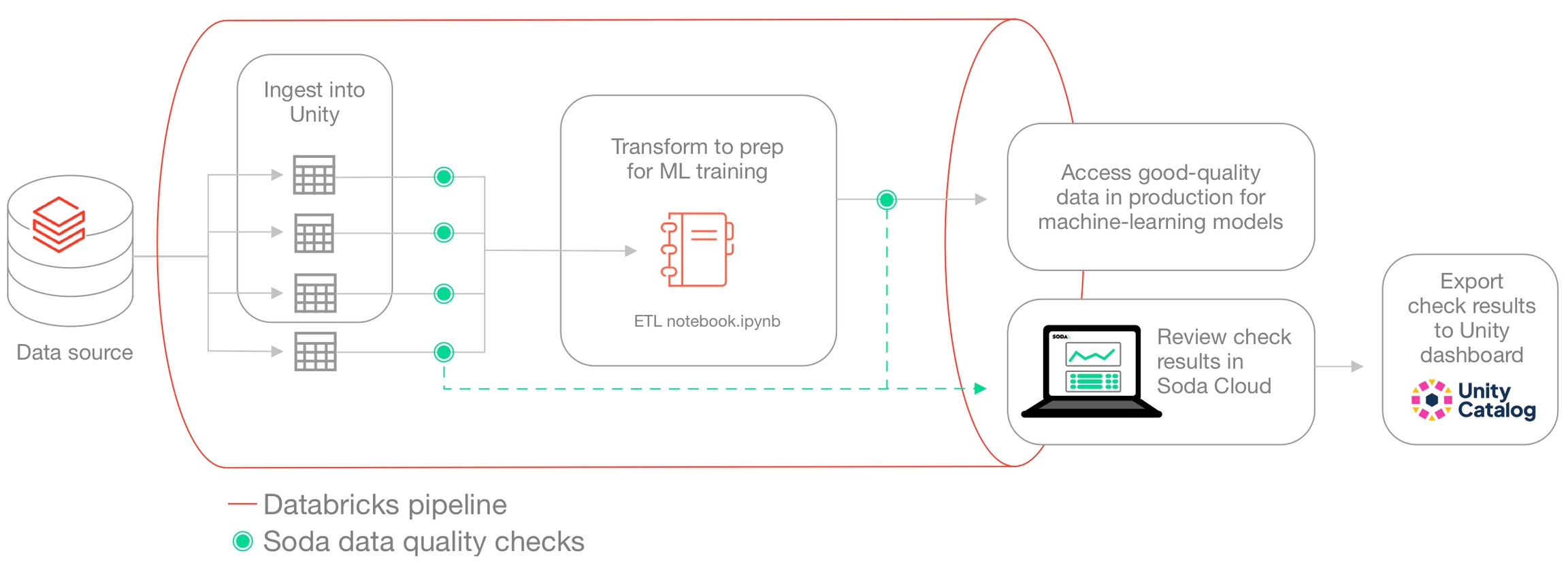

Use this guide to invoke Soda data quality tests from inside a Databricks notebook.

Use this guide to install and set up Soda in a Databricks notebook so you can run data quality tests on data in a Spark data source.

🎥 Watch a video that demonstrates how to add Soda to your Databricks pipeline:

The instructions below offer Data Engineers an example of how to write Python in a Databricks notebook to set up Soda, then write and execute scans for data quality in Spark.

This example uses a programmatic deployment model which invokes the Soda Python library, and uses Soda Cloud to validate a commercial usage license and display visualized data quality test results. See:

Access configuration details to connect Soda to an Athena data source.

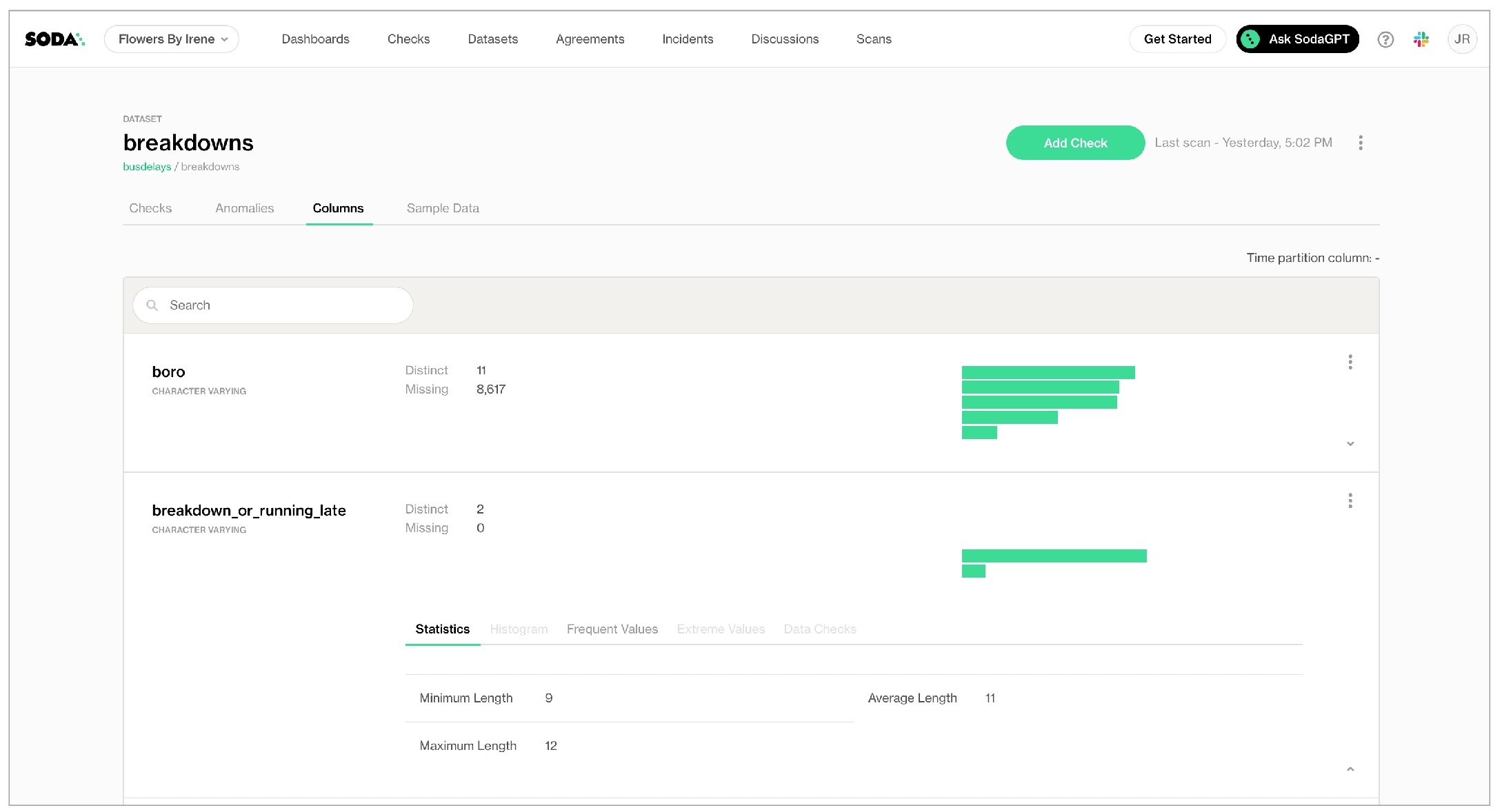

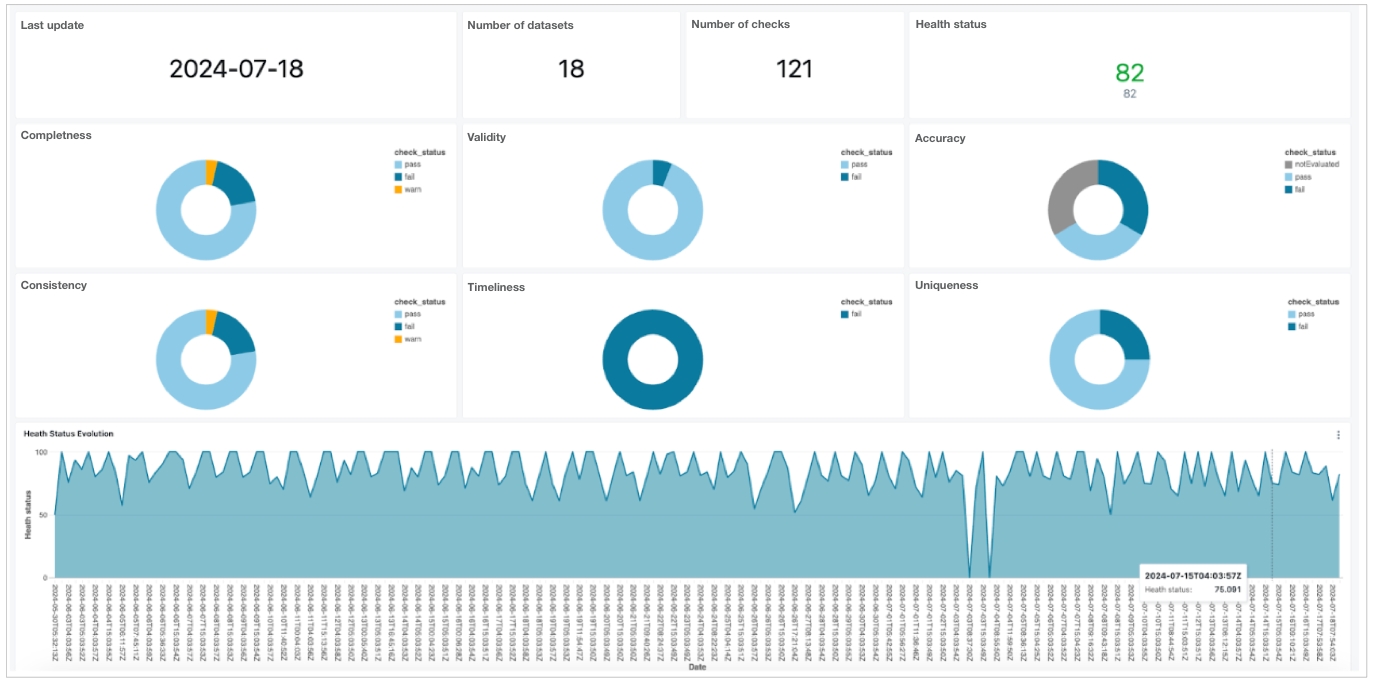

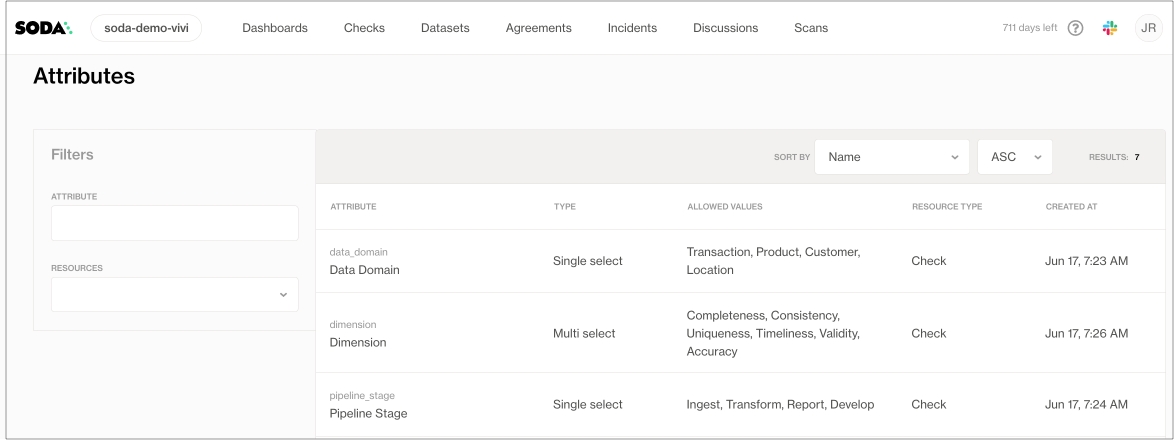

Use attributes, tags, and filters to facilitate your search for the specific data quality status of your datasets.

With dozens, or even hundreds of datasets in your Soda Cloud account, it may be laborious to try to find the data quality information you're looking for. To facilitate your search for specific data quality status, consider defining your own Attributes and Tags for datasets, then use filters to narrow your search.

Define new attributes for datasets in your organization that your colleagues can use to categorize datasets for easy identification and discovery. Consider adding multiple attributes to access precise cross-sections of data quality.

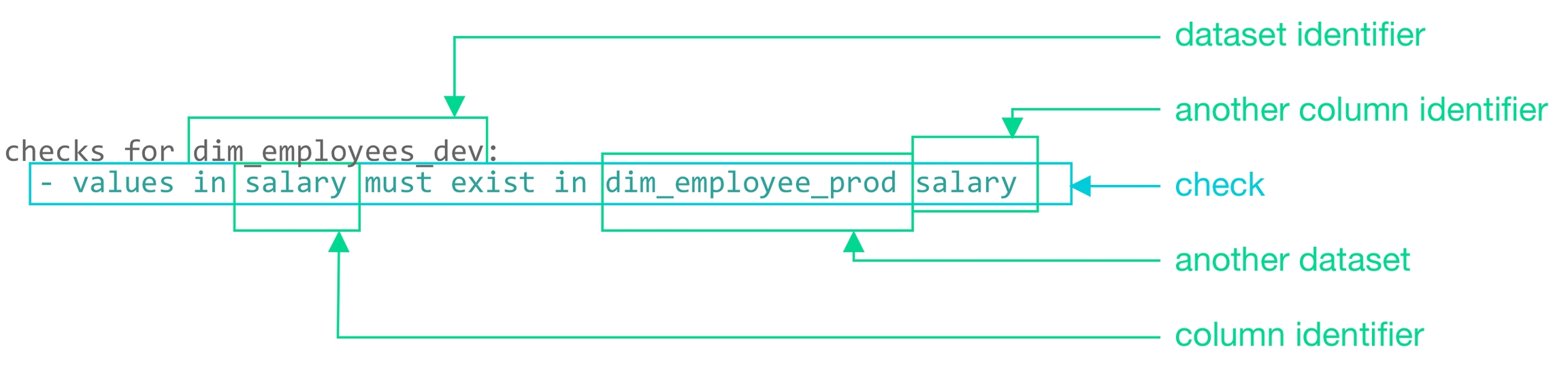

Use a SodaCL cross check to compare row counts across datasets in the same, or different, data sources.

Use a cross check to compare row counts between datasets within the same, or different, data sources.

See also:

✖️ Requires Soda Core Scientific (included in a Soda Agent) ✔️ Supported in Soda Core ✔️ Supported in Soda Library + Soda Cloud ✔️ Supported in Soda Cloud Agreements + Soda Agent ✖️ Available as a no-code check

In the context of , cross checks are unique. This check employs the row_count metric and is limited in its syntax variation, with only a few mutable parts to specify dataset and data source names.

Integrate MS Teams in your Soda Cloud account so that Soda sends alert notifications and incident events to your MS Teams conversation.

Access examples of Soda implementations according to use case and data quality testing needs.

Use the following guides as example implementations based on how you intend to use Soda for data quality testing. For standard set up instructions, see .

Set up Soda to programmatically scan the contents of a local file using Dask.

For use with , only. Refer to .

to use Soda to scan a local file for data quality. Refer to the following example that executes a simple check for row count of the dataset.

data_source my_datasource_name:

type: duckdb

database: filename.db

read_only: true

schema_name: publicsoda test-connection -d my_datasource -c configuration.yml -Vdata_source my_datasource_name:

type: db2

host: 127.0.0.1

port: 50000

username: simple

password: simple_pass

database: database

schema: publicautomated monitoring:

datasets:

- include %

- exclude test%data_source my_datasource_name:

type: denodo

username: simple

password: simple_pass

host: 127.0.0.1

port: 5432

sslmode: preferdata_source my_datasource_name:

type: mysql

host: 127.0.0.1

port: `9004`

username: simple

password: simple_pass

database: customersEmbed Soda’s data quality details within Metaphor’s catalog interface. Leverage the Metaphor integration to visualize rules, metrics, and profiles in your governance workflow.

View Soda-powered data quality rules and metrics in Microsoft Purview. Provide your colleagues with confidence in table-level data through inline quality indicators.

CI/CD & development tools

Ingest dbt-core or dbt Cloud test results into Soda Cloud to track and visualize your test outcomes over time, set alerts on failures, and manage data-quality incidents alongside dbt runs.

Add the Soda GitHub Action to your workflows to automatically execute data-quality scans on pull requests or commits, with results posted as PR comments and in Soda Cloud reports.

Messaging & collaboration

Send alert notifications and incident events to Slack channels. Create private incident channels, track Soda Discussions, and collaborate on failed checks in real time.

Route Soda alert notifications and incident updates into Microsoft Teams channels. Use Teams workflows to manage and triage data-quality issues.

Incident & ticketing systems

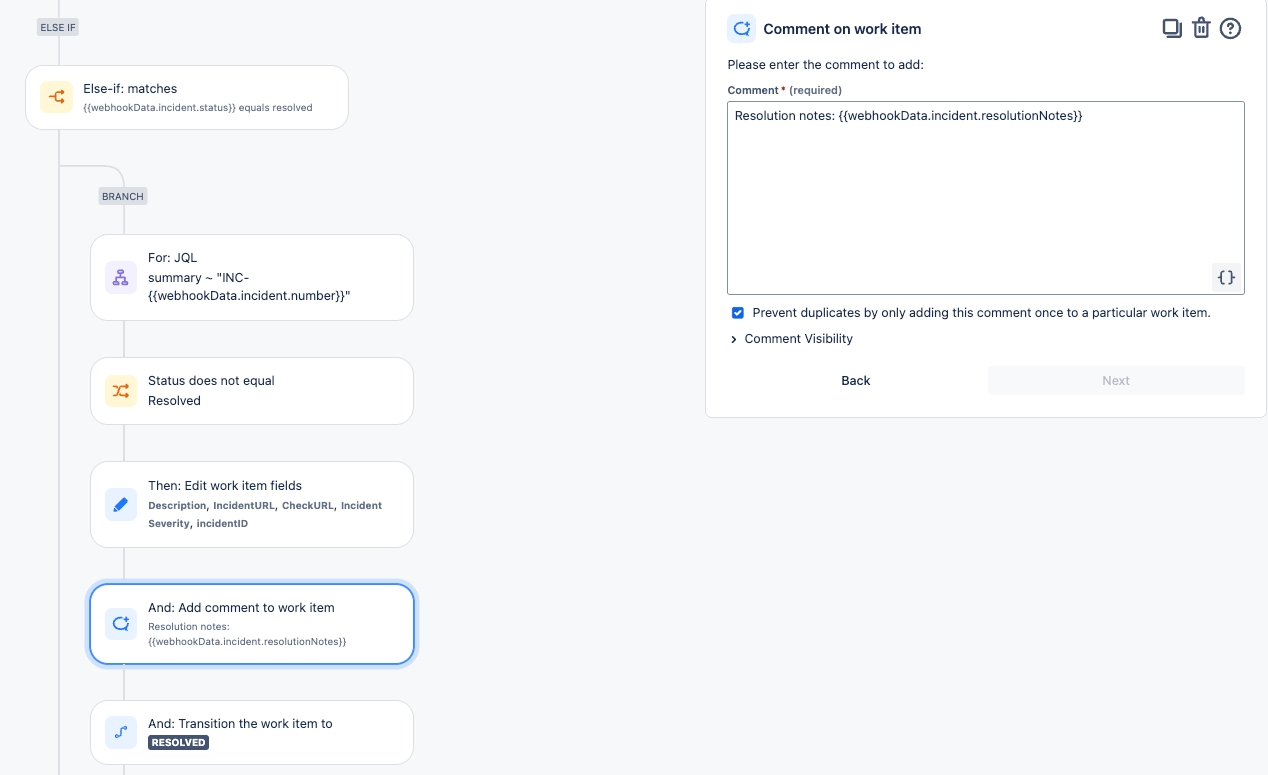

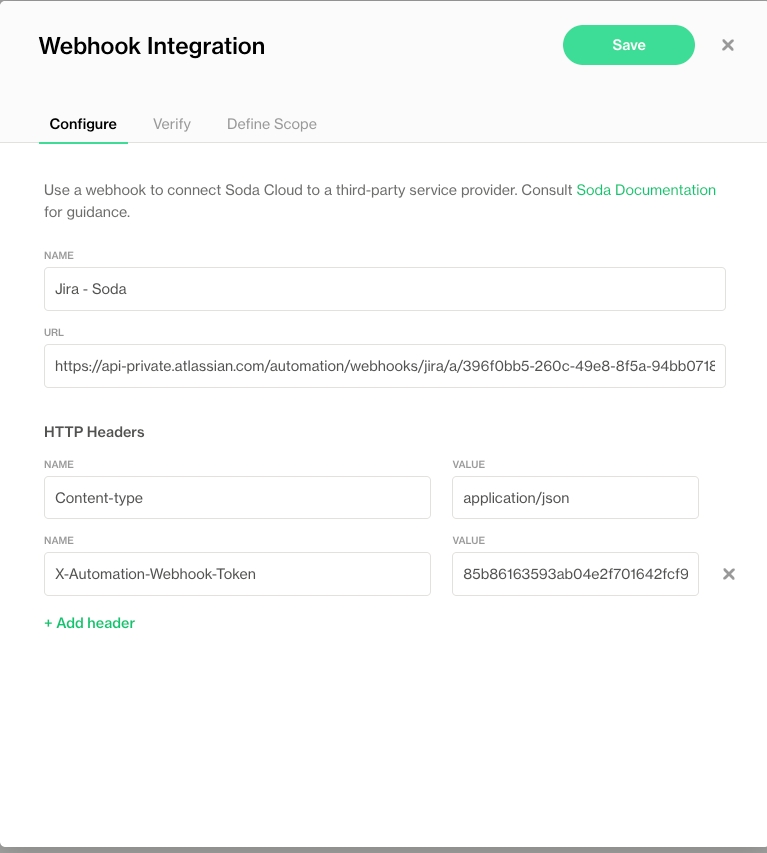

Configure Soda Cloud incidents to automatically create and sync Jira tickets. Keep incident status, descriptions, and links up to date between Soda and Jira.

Use webhooks to bridge Soda incidents with ServiceNow issues. Automate ticket creation and status updates from Soda Cloud into ServiceNow.

Connect Soda Cloud to any HTTP endpoint—PagerDuty, OpsGenie, custom apps—for alert notifications, incident tracking, and agreement events.

Security & access

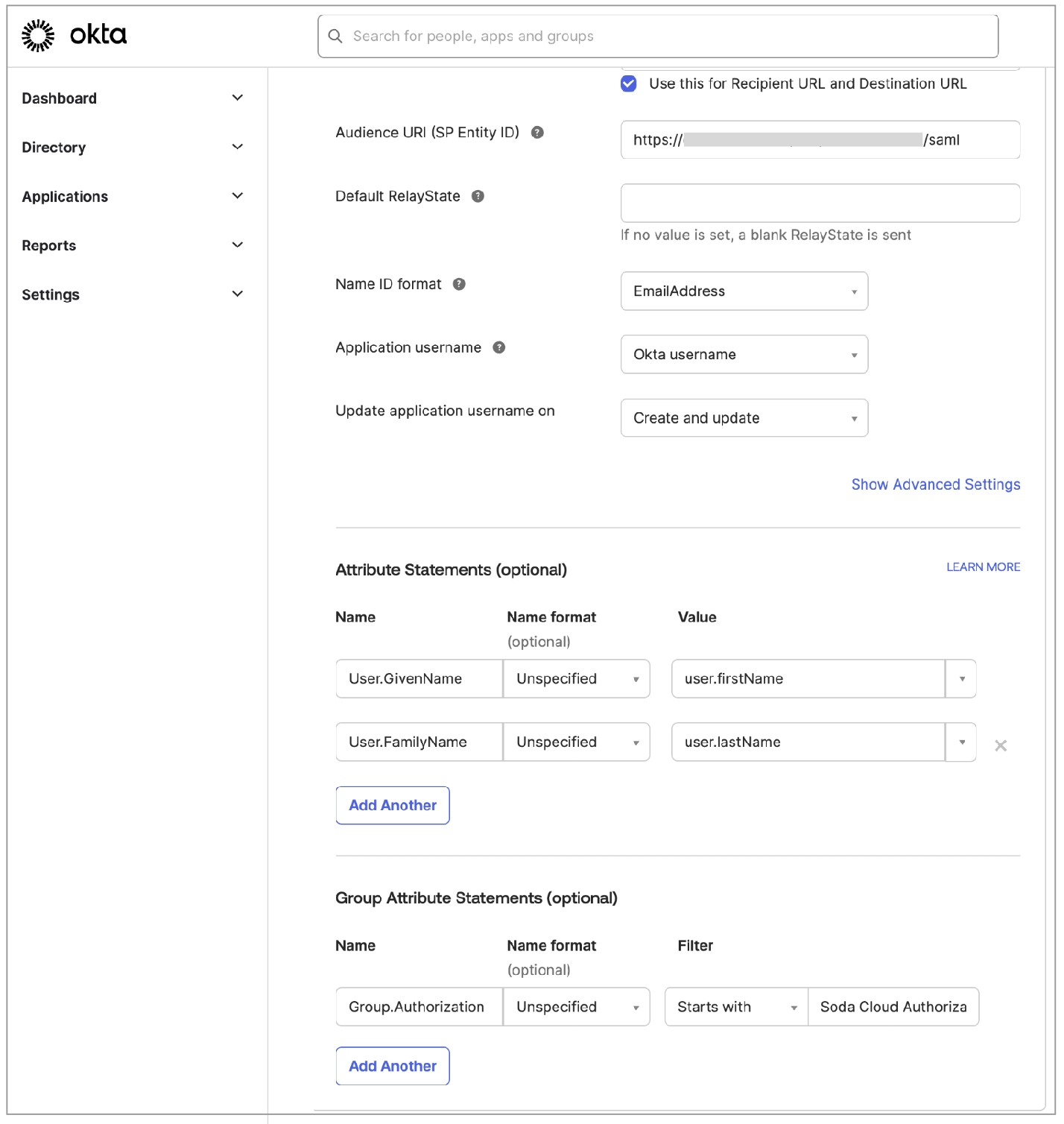

Configure SAML 2.0 SSO with Azure AD, Okta, Google Workspace, or other IdPs. Simplify secure access, user provisioning, and group syncing for your Soda Cloud organization.

import dask.dataframe as dd

from soda.scan import Scan

# Create Soda Library Scan object and set a few required properties

scan = Scan()

scan.set_scan_definition_name("test")

scan.set_data_source_name("dask")

# Read a `cities` CSV file with columns 'city', 'population'

ddf = dd.read_csv('cities.csv')

scan.add_dask_dataframe(dataset_name="cities", dask_df=ddf)

# Define checks using SodaCL

checks = """

checks for cities:

- row_count > 0

"""

# Add the checks to the scan and set output to verbose

scan.add_sodacl_yaml_str(checks)

scan.set_verbose(True)

# Execute the scan

scan.execute()

# Inspect the scan object to review scan results

scan.get_scan_results()Solution: Start Docker Desktop running.

Problem: When you run docker-compose up you get an error that reads Cannot start service soda-adventureworks: Ports are not available: exposing port TCP 0.0.0.0:5432 -> 0.0.0.0:0: listen tcp 0.0.0.0:5432: bind: address already in use.

Solution:

1. Execute the command lsof -i tcp:5432 to print a list of PIDs using the port.

2. Use the PID value to run the following command to free up the port: kill -9 your_PID_value. You many need to prepend the commands with sudo .

3. Run the docker run command again.

soda_cloudconfiguration.ymlSave the configuration.yml file and close the API modal in your Soda account.

In Terminal, return to the tab in which the virtual environment is active in the soda_sip directory. Run the following command to test Soda's connection to the data source.

Command:

last_nameNo duplicate phone numbers validates that each value in the phone column is unique. See Numeric metrics.

Columns have not been added, removed, or changed compares the schema of the dataset to the last scan result to determine if any columns were added, deleted, changed data type, or changed index. The first time this check executes, the results show [NOT EVALUATED] because there are no previous values to which to compare current results. In other words, this check requires a minimum of two scans to evaluate properly. See Schema checks.

Data in this dataset is less than 7 days old confirms that the data in the dataset is less than seven days old. See Freshness checks.

configuration.yml file.

5. Adjust your checks.yml to point to your own dataset in your data source, then adjust the checks to apply to your own data. Go ahead and run a scan!Learn more about How Soda works.

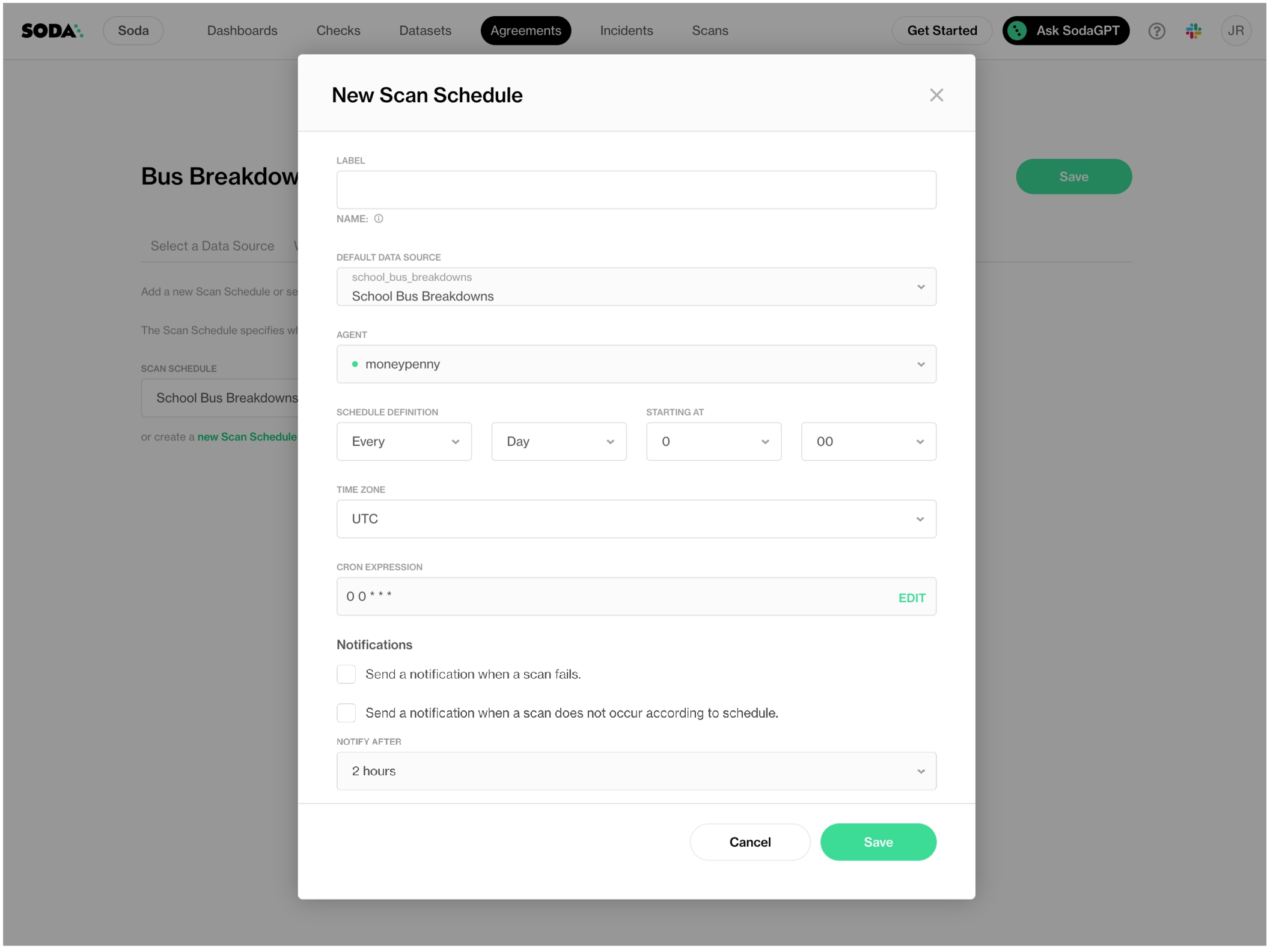

Send a notification when a scan fails.

Check this box to instruct Soda to send a notification when a scan fails to complete, or completes with errors.

Send a notification when a scan does not occur according to the scan definition.

Check this box to instruct Soda to send a notification when a scan times out, meaning it does not complete within a specific time frame after the scheduled start time. See: Configure scan timeouts.

Notify after

Use the dropdown to select the time delay between when a scheduled scan fails or does not complete within the expected timeframe, and when Soda sends an alert notification. For example, set this to 12h to receive a notification 12 hours after Soda logged the failed or delayed scan.

Notify recipients

Use this field to identify to whom Soda sends scan failure or delay alert notifications.

Need help? Join the .

In the Script field, define a script that creates new tickets when a Soda Cloud incident is opened, and updates existing tickets when a Soda Cloud incident status is updated. Use the example below for reference. You may also need to define Security settings according to your organizations authentication rules.

Click Submit, then copy the value of the Resource path to use in the URL field in the Soda Cloud integration setup.

Need help? Join the .

Your data source is compatible with a Soda Agent; refer to tables below.

assign ownership roles for the data source and its datasets

Amazon Athena Amazon Redshift Azure Synapse ClickHouse Databricks SQL Denodo Dremio DuckDB GCP BigQuery Google CloudSQL

IBM DB2 MotherDuck MS SQL Server1 MySQL OracleDB PostgreSQL Presto Snowflake Trino Vertica

BigQuery Databricks SQL MS SQL Server MySQL

PostgreSQL Redshift Snowflake

Need help? Join the .

Provide an identifier for your database.

schema

required

Provide an identifier for the schema in which your dataset exists.

trusted_connection

optional

Indicate connection trustworthiness by providing a boolean value: true or false. The default value is false. Set to true if you are using Active Directory authentication.

encrypt

optional

Indicate the encryption status by providing a boolean value: true or false. The default value is false.

trust_server_certificate

optional

Specifies whether encryption occurs if there is no verifiable server certificate. Provide a boolean value: true or false. The default value is false.

driver

optional

Use this config setting to specify the ODBC driver version you use. For example, SQL Server Native Client 11.0 or ODBC Driver 18 for SQL Server.

scope

optional

Access token scope.

multi_subnet_failover

optional

Enable MultiSubnetFailover; see .

authentication

optional

Authentication method to use. Supported values: sql, activedirectoryinteractive, activedirectorypassword, activedirectoryserviceprincipal, activedirectory, auto, cli, environment, synapsespark, and fabricspark. The default value is sql, which uses username and password

type

required

Identify the type of data source for Soda.

host

required

Provide a host identifier.

port

optional

Provide a port identifier. You can remove the port config setting entirely. Default: 1433.

username

required

Use system variables to retrieve this value securely.

password

required

Use system variables to retrieve this value securely.

database

required

For Soda to run quality scans on your data, you must configure it to connect to your data source. To learn how to set up Soda and configure it to connect to your data sources, see .

Severity: Minor, Major, or Critical

Status: Reported, Investigating, Fixing, Resolved

Lead: a list of team members from whom you can assign the Lead Investigator role

Save your changes.

If you have connected your Soda Cloud account to Slack, navigate to the Integrations tile, then click the auto-generated link that connects directly to a newly-created, public channel in your Slack workspace that is dedicated to the investigation and resolution of the incident and invite team members to the channel to collaborate on resolving the data quality issue. If you have integrated Soda Cloud with MS Teams or another third-party tool, like Jira or ServiceNow, you can access those tools via auto-generated links in the Integrations tile, as well.

Need help? Join the .

soda-dremiotype

required

Identify the type of data source for Soda.

host

required

Provide a host identifier.

port

required

Provide a port identifier.

username

required

Consider using system variables to retrieve this value securely.

To confirm that you have correctly configured the connection details for the data source(s) in your configuration YAML file, use the test-connection command. If you wish, add a -V option to the command to returns results in verbose mode in the CLI.

text

CHAR, VARCHAR, STRING

number

TINYINT, SMALLINT, INT, INTEGER, BIGINT, DOUBLE, FLOAT, DECIMAL

time

DATE, TIMESTAMP

For Soda to run quality scans on your data, you must configure it to connect to your data source. To learn how to set up Soda and configure it to connect to your data sources, see .

To validate your account license or free trial, Soda Library must communicate with a Soda Cloud account via API keys. You create a set of API keys in your Soda Cloud account, then use them to configure the connection to Soda Library.

In a browser, navigate to cloud.soda.io/signup to create a new Soda account, which is free for a 45-day trial. If you already have a Soda account, log in.

Navigate to your avatar > Profile, then access the API keys tab. Click the plus icon to generate new API keys.

Copy+paste the API key values to a temporary, secure place in your local environment.

Soda Library has the following requirements:

Python 3.8, 3.9, or 3.10

Pip 21.0 or greater

Download the notebook: Soda Databricks notebook

Use Soda to test data in a Databricks pipeline.

Learn more about SodaCL checks and metrics.

Access instructions to Generate API Keys.

Need help? Join the .

type

required

Identify the type of data source for Soda.

access_key_id

required 1

Consider using system variables to retrieve this value securely. See .

secret_access_key

required 1

Consider using system variables to retrieve this value securely. See .

region_name

optional

The endpoint your AWS account uses. Refer to .

role_arn

optional 2

Specify role to use for authentication and authorization.

staging_dir

1 access_key_id and secret_access_key are required parameters to obtain an authentication token from Amazon Athena or Redshift. You can provide these key values in the configuration file or as environment variables.

2You may add the optional role_arn parameter which first authenticates with the access keys, then uses the role to access temporary tokens that allow for authentication. Depending on your Athena or Redshift setup, you may be able to use only the role_arn to authenticate, though Athena still must access the keys from a config file or environment variables. See AWS Boto3 documentation for details on the progressive steps it takes to access the credentials it needs to authenticate.

Some users who access their Athena or Redshift data source via a self-hosted Soda Agent deployed in a Kubernetes cluster have reported that they can use IAM roles for Service Accounts to authenticate, as long as the IAM role that the Kubernetes pod has from the Kubernetes Service Account has the permissions to access Athena or Redshift. See Enable IAM Roles for Service Accounts (IRSA) on the EKS cluster.

To confirm that you have correctly configured the connection details for the data source(s) in your configuration YAML file, use the test-connection command. If you wish, add a -V option to the command to returns results in verbose mode in the CLI.

text

CHAR, VARCHAR, STRING

number

TINYINT, SMALLINT, INT, INTEGER, BIGINT, DOUBLE, FLOAT, DECIMAL

time

DATE, TIMESTAMP

required

Identify the type of data source for Soda.

host

required

Provide a host identifier.

port

optional

Provide a port identifier. You can remove the port config setting entirely. Default: 1433.

username

required

Use system variables to retrieve this value securely.

password

required

Use system variables to retrieve this value securely.

database

required

Provide an identifier for your database.

schema

required

Provide an identifier for the schema in which your dataset exists.

trusted_connection

optional

Indicate connection trustworthiness by providing a boolean value: true or false. The default value is false. Set to true if you are using Active Directory authentication.

encrypt

optional

Indicate the encryption status by providing a boolean value: true or false. The default value is false.

trust_server_certificate

optional

Specifies whether encryption occurs if there is no verifiable server certificate. Providing a boolean value: true or false. The default value is false.

driver

optional

Use this config setting to specify the ODBC driver version you use. For example, SQL Server Native Client 11.0 or ODBC Driver 18 for SQL Server.

scope

optional

Access token scope.

multi_subnet_failover

optional

Enable MultiSubnetFailover; see .

authentication

optional

Authentication method to use. Supported values: sql, activedirectoryinteractive, activedirectorypassword, activedirectoryserviceprincipal, activedirectory. The default value is sql which uses username and password to authenticate.

text

CHAR, VARCHAR, TEXT, NCHAR, NVARCHAR, BINARY

number

BIG INT, NUMERIC, BIT, SMALLINT, DECIMAL, SMALLMONEY, INT, TINYINT, MONEY, FLOAT, REAL

time

DATE, TIME, DATETIME, DATETIMEOFFSET

type

For Soda to run quality scans on your data, you must configure it to connect to your data source. To learn how to set up Soda and configure it to connect to your data sources, see .

by organizational department: Product Marketing, Engineering-FE, Finance-AP, Customer Success

by product

by data domain: Customer data, Product data, Order & Fulfillment data

by internal objectives and key results (OKR)

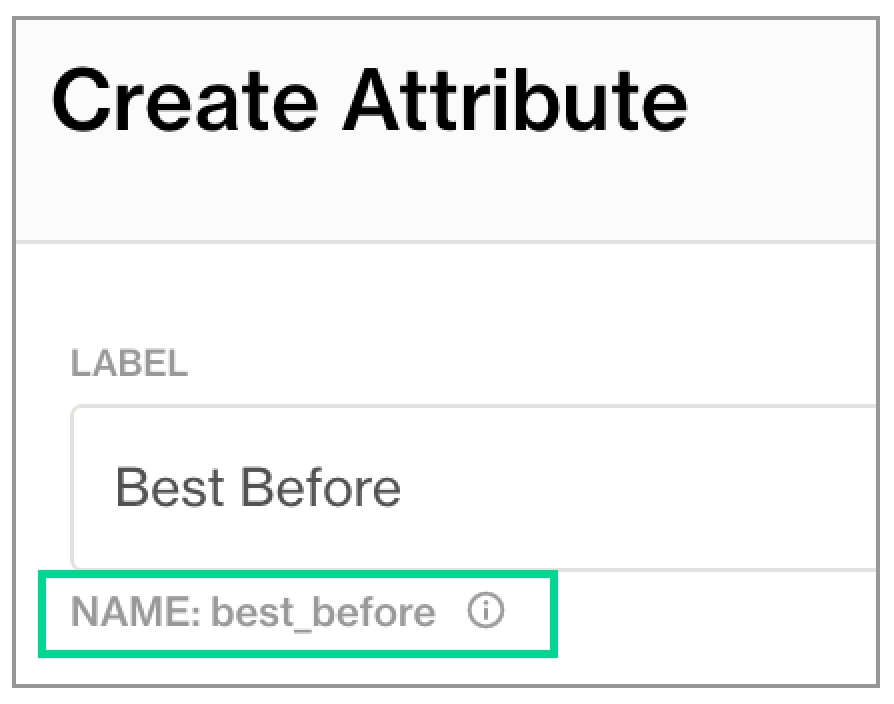

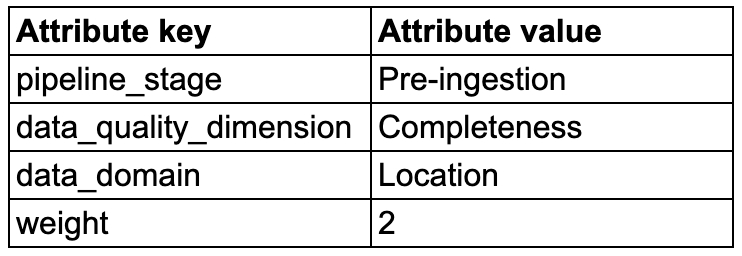

As a user with the permission to do so in your Soda Cloud account, navigate to your avatar > Attributes > New Attribute.

Follow the guided steps to create the new attribute. Use the details below for insight into the values to enter in the fields in the guided steps.

Label

Enter the key for the key:value pair that makes up the attribute. For example, if you define a dataset attribute's key department, its value could be marketing or finance.

Resource Type

Select Dataset to define an attribute for a dataset.

Type

Define the type of input a dataset owner may use for the value that pairs with the attribute's key:

Single select

Multi select

Checkbox

Text

Number

Date

Note that during a scan, Soda validates that the type of input for an attribute's value matches the expected type. For example, if your attribute's type is Number and the dataset owner enters a value of one instead of 1, the scan produces an error to indicate the incorrect attribute value.

Allowed Values

Applies only to Single select and Multi select. Provide a list of values that a check author may use when applying the attribute key:value pair to a check.

Description

(Optional) Provide details about the check attribute to offer guidance for your fellow Soda users.

Once created, you cannot change the type of your attribute. For example, you cannot change a checkbox attribute into a multi-select attribute.

Once created, you can change the display name of an attribute.

For a single- or multi-select attribute, you can remove, change, or add values to the list of available selections. However, if you remove or change values on such a list, you cannot search for the deleted or previous value in the dataset filter.

While only a Soda Cloud Admin can define or revise dataset attributes, any Admin, Manager, or Editor for a dataset can apply attributes to it.

Navigate to the Datasets dashboard, click the stacked dots next to a dataset, then select Edit Dataset. Use the attributes fields to apply the appropriate attributes to the dataset.

While editing a dataset, consider adding Tags to the dataset as well. Use tags to:

identify datasets that are associated with a particular marketing campaign

identify datasets that are relevant for a particular customer account

identify datasets whose quality is critical to business operations, or to categorize datasets according to their criticality in general, such as “high”, “medium”, and “low”.

identify datasets that populate a particular report or dashboard

After saving your changes and applying tags and attributes to multiple datasets, use the Filters in the Datasets dashboard to display the datasets that help narrow your study of data quality, then click Save Collection to name the custom filtered view.

In the future, use the dropdown in the Checks dashboard to quickly access your collection again.

Create alerts to notify your team of data quality issues.

Learn how to create and track data quality Incidents.

Need help? Join the .

The example check below compares the volume of rows in two datasets in the same data source. If the row count in the dim_department_group is not the same as in dim_customer, the check fails.

You can use cross checks to compare row counts between datasets in different data sources, as in the example below.

In the example, retail_customers is the name of the other dataset, and aws_postgres_retail is the name of the data source in which retail_customers exists.

If you wish to compare row counts of datasets in different data sources, you must have configured a connection to both data sources. Soda needs access to both data sources in order to execute a cross check between data sources.

The data sources do not need to be the same type; you can compare a dataset in a PostgreSQL data source to a dataset in a BigQuery data source.

✓

Define a name for a cross check; see .

✓

Add an identity to a check.

Define alert configurations to specify warn and fail alert conditions.

-

Apply an in-check filter to return results for a specific portion of the data in your dataset.

Learn more about SodaCL metrics and checks in general.

Learn more about Comparing data using SodaCL.

Use a schema check to discover missing or forbidden columns in a dataset.

Reference tips and best practices for SodaCL.

Need help? Join the .

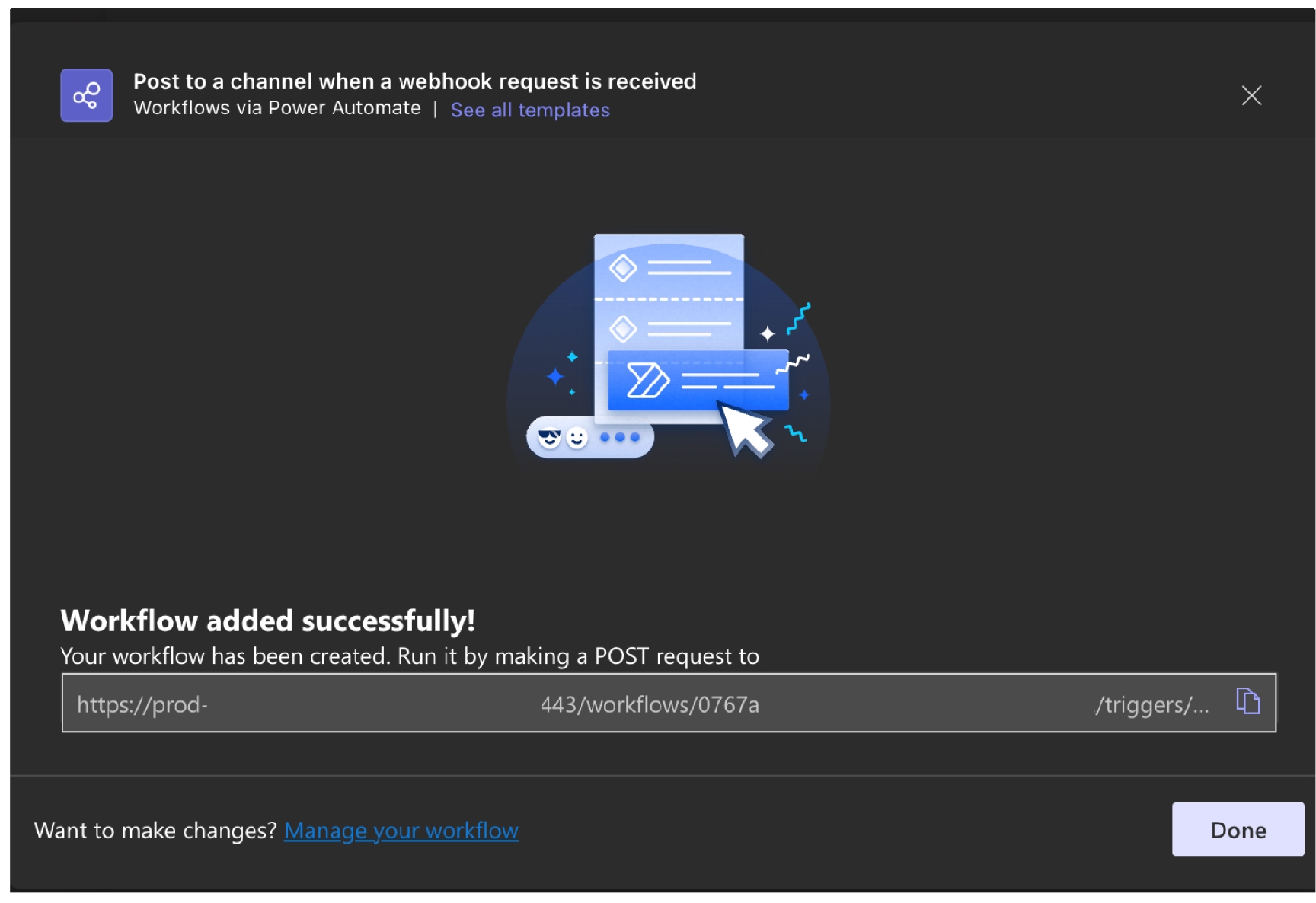

As a user with permission to do so, log in to your Soda Cloud account, navigate to your avatar > Organization Settings, then select the Integrations tab.

Click the + at the upper right of the table of integrations to add a new integration.

In the Add Integration dialog box, select Microsoft Teams.

In the first step of the guided integration workflow, follow the instructions to navigate to your MS Teams account to create a Workflow; see Microsoft's documentation for . Use the Workflow template to Post to a channel when a webhook request is received.

In the last step of the guided Workflow creation, copy the URL created after successfully adding the workflow.

Returning to Soda Cloud with the URL for Workflow, continue to follow the guided steps to complete the integration. Reference the following tables for guidance on the values to input in the guided steps.

Name

Provide a unique name for your integration in Soda Cloud.

URL

Input the Workflow URL you obtained from MS Teams.

Enable to send notifications to Microsoft Teams when a check result triggers an alert.

Check to allow users to select MS Teams as a destination for alert notifications when check results warn or fail.

Use Microsoft Teams to track and resolve incidents in Soda Cloud.

Check to automatically send incident information to an MS Teams channel.

Channel URL

Provide a channel identifier to which Soda Cloud sends all incident events.

Use Microsoft Teams to track discussions in Soda Cloud.

Check to automatically send notifications to an MS Teams channel when a user creates or modifies a discussion in Soda Cloud.

Use the Alert Notification scope to enable Soda Cloud to send alert notifications to an MS Teams channel to notify your team of warn and fail check results. With such an integration, Soda Cloud enables users to select MS Teams as the destination for an alert notification of an individual check or checks that form a part of an agreement, or multiple checks. To send notifications that apply to multiple checks, see Set notification rules.

Use the Incident scope to notify your team when a new incident has been created in Soda Cloud. With such a scope, Soda Cloud displays an external link to the MS Teams channel in the Incident Details. Soda Cloud sends all incident events to only one channel in MS Teams. As such, you must provide a separate link in the Channel URL field in the Define Scope tab. For example, https://teams.microsoft.com/mychannel. To obtain the channel link in MS Teams, right-click on the channel name in the overview sidebar. Refer to Incidents for more details about using incidents in Soda Cloud.

Use the Discussions scope to post to a channel when a user creates or modifies a Soda Cloud discussion. Soda Cloud sends all incident events to only one channel in MS Teams. As such, you must provide a separate link in the Channel URL field in the Define Scope tab. For example, https://teams.microsoft.com/mychannel. To obtain the channel link in MS Teams, right-click on the channel name in the overview sidebar. Refer to Begin a discussion and propose checks for more details about using incidents in Soda Cloud.

Problem: You encounter an error that reads, "Error encountered while rendering this message."

Solution: A fix is documented, the short version of which is as follows.

Restart MS Teams.

Clear your cache and cookies.

If you have not already done so, update to the latest version of MS Teams.

Learn more about general webhooks to integrate Soda Cloud with other third-party service providers.

Set notification rules that apply to multiple checks in your account.

Access a list of all integrations that Soda Cloud supports.

Need help? Join the .

Soda Library Soda Cloud

Use this guide to set up Soda to test before and after data migration between data sources.

Soda Library Soda Cloud

Use this guide to set up Soda Cloud to enable users across your organization to serve themselves when it comes to testing data quality.

Soda Cloud Soda Agent

Use this guide to set up Soda to test the quality of your data during your development lifecycle in a GitHub Workflow.

Soda Library Soda Cloud

Use this guide to set up Soda to automatically monitor data quality.

Soda Cloud Soda Agent

Use the following How tos for practical advice, examples, and instructions for using Soda.

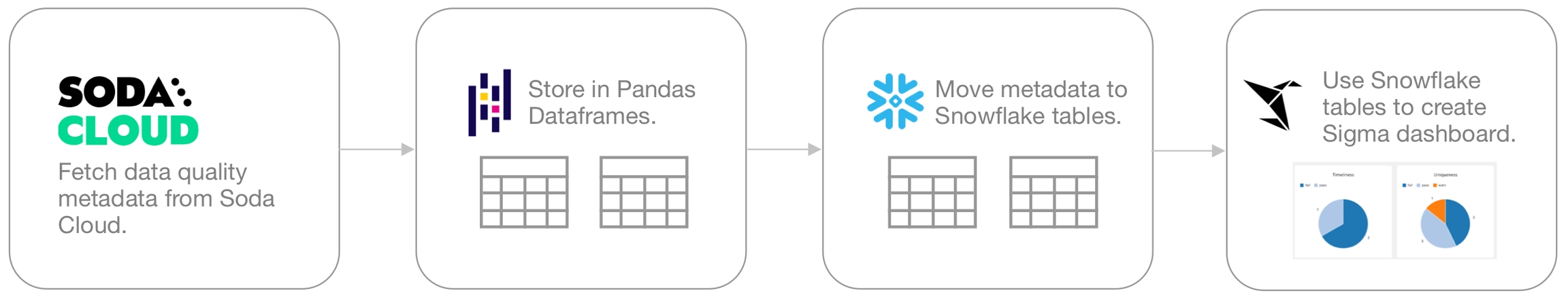

Learn how to build a customized data quality reporting dashboard in Sigma using the Soda Cloud API.

Soda Library Soda Cloud

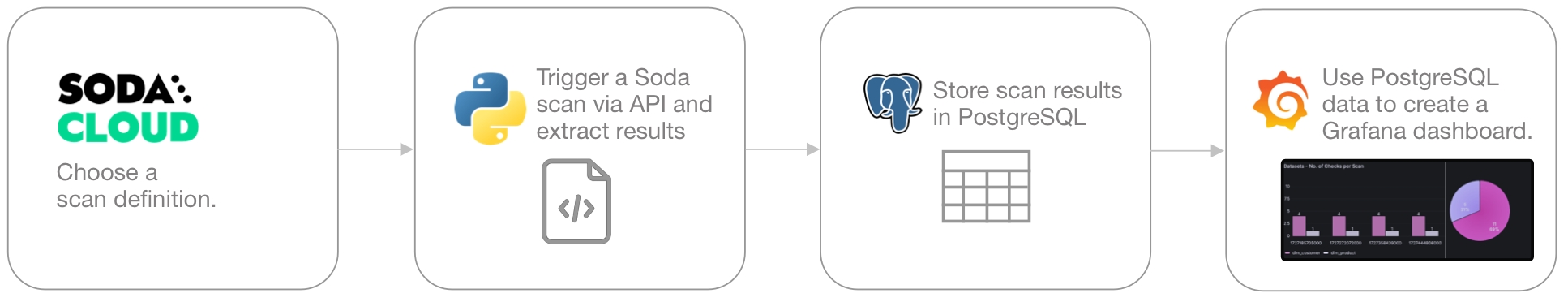

Learn how to build a customized data quality reporting dashboard in Grafana using the Soda Cloud API.

Soda Cloud

Learn how to invoke Soda data quality tests in a Databricks notebook.

Soda Library Soda Cloud

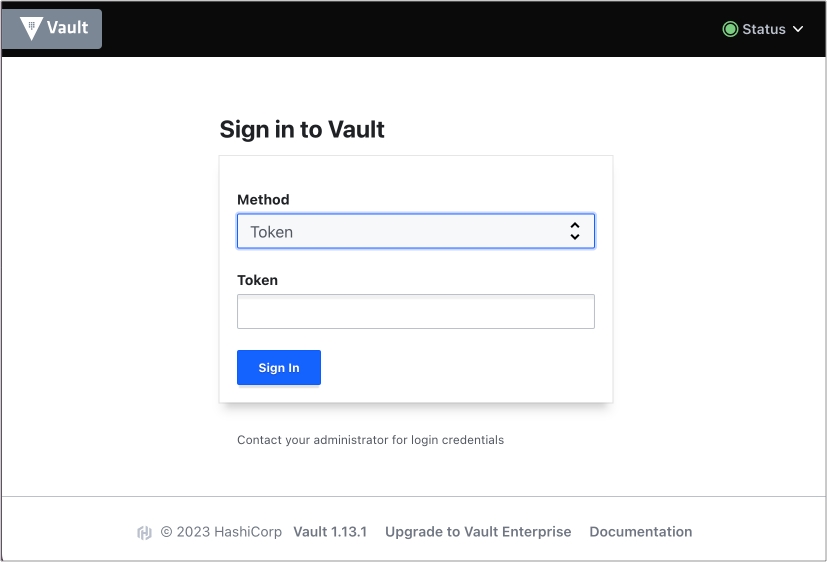

Learn how to set up a Soda Agent to use an External Secrets Manager to retrieve frequently-rotated data source passwords.

Soda Cloud Self-hosted Agent

Learn how to use Soda Cloud API keys to securely communicate with other entities such as Soda Library and self-hosted Soda Agents, and to provide secure access to Soda Cloud via API.

Soda Cloud

Need help? Join the Soda community on Slack.

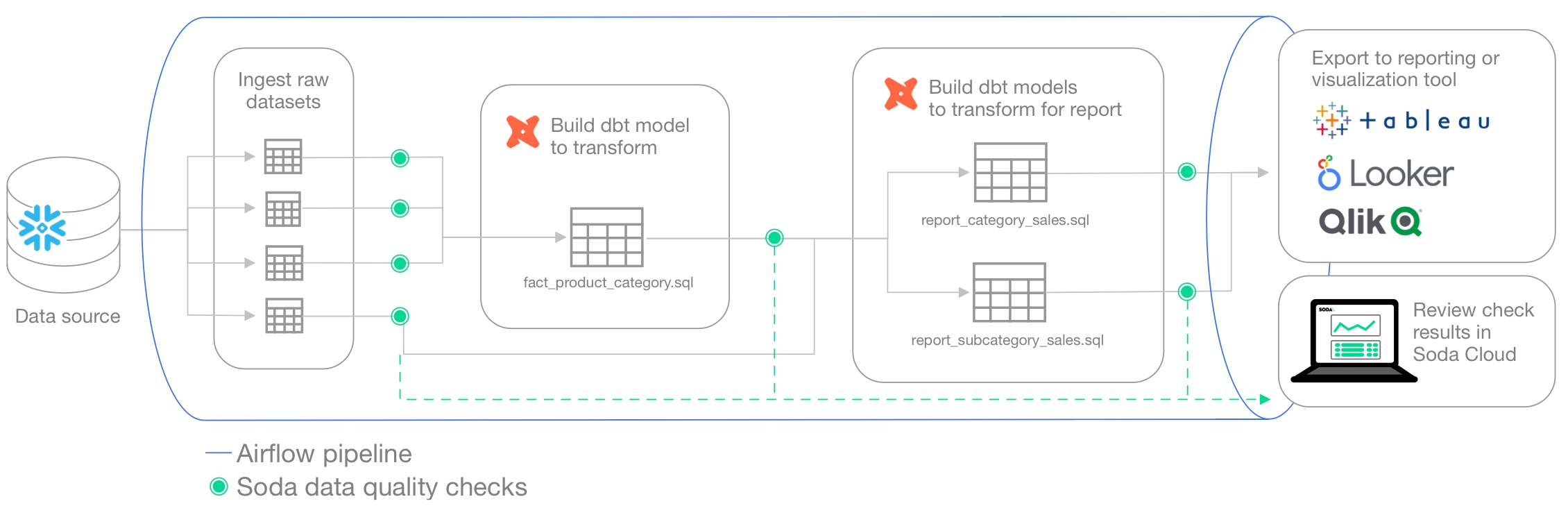

Use this guide as an example for how to set up Soda to test the quality of your data in an Airflow pipeline that uses dbt transformations.

Soda Library Soda Cloud

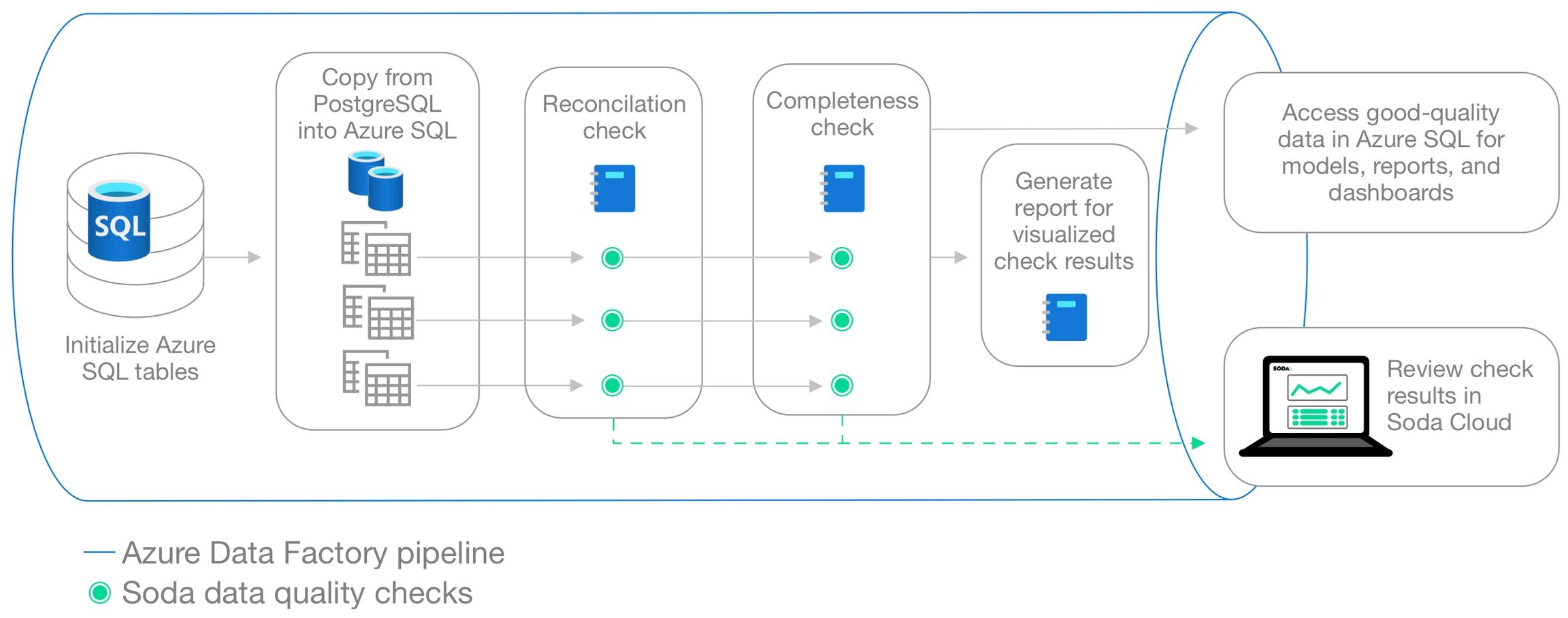

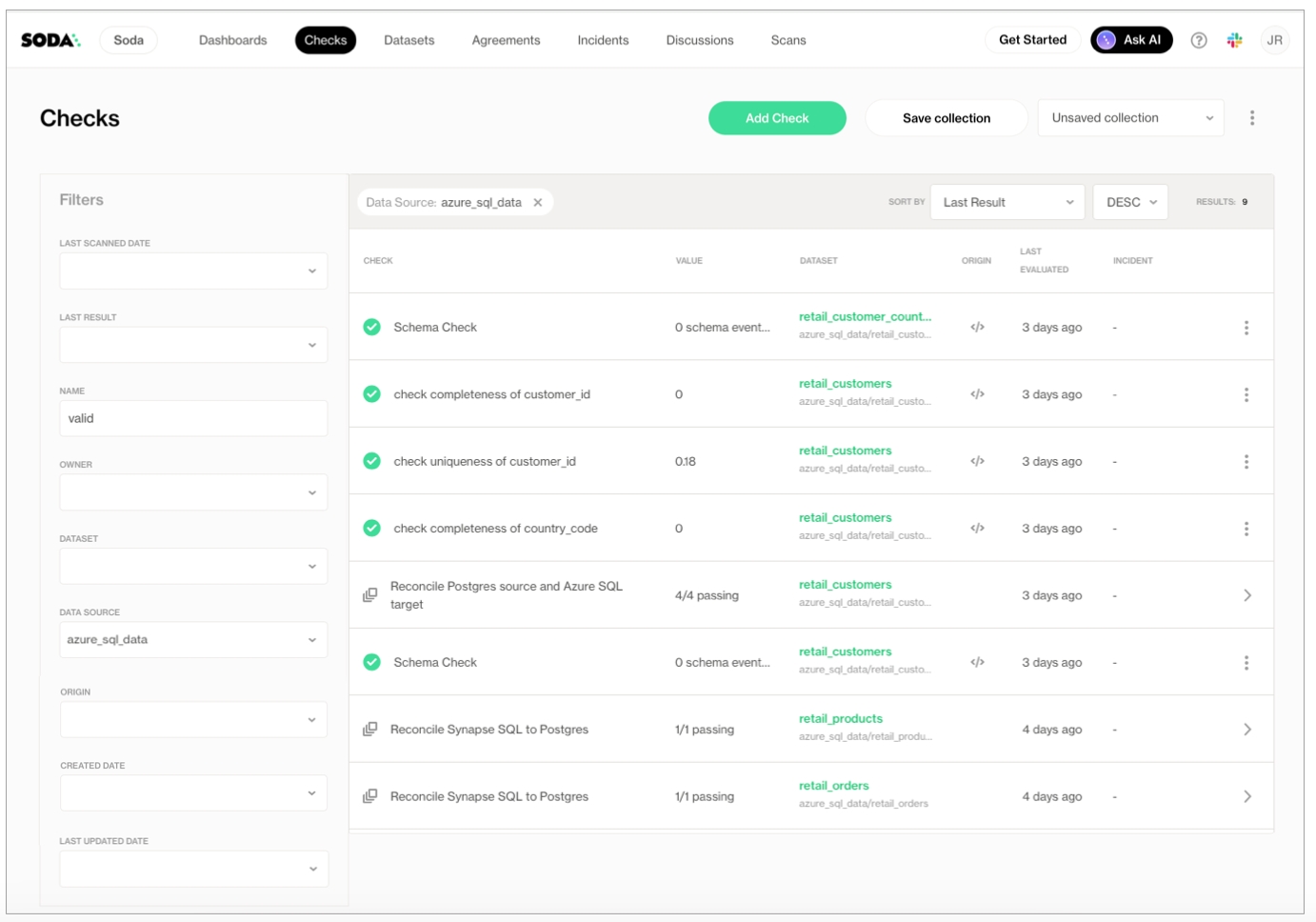

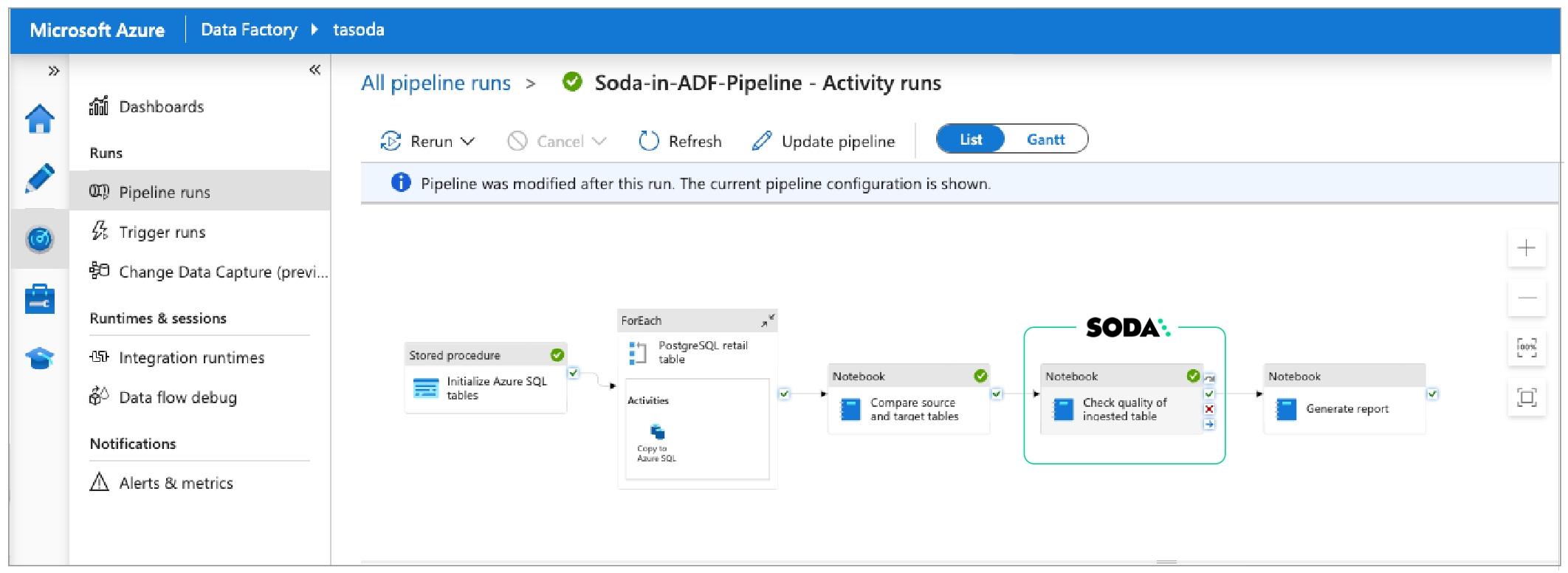

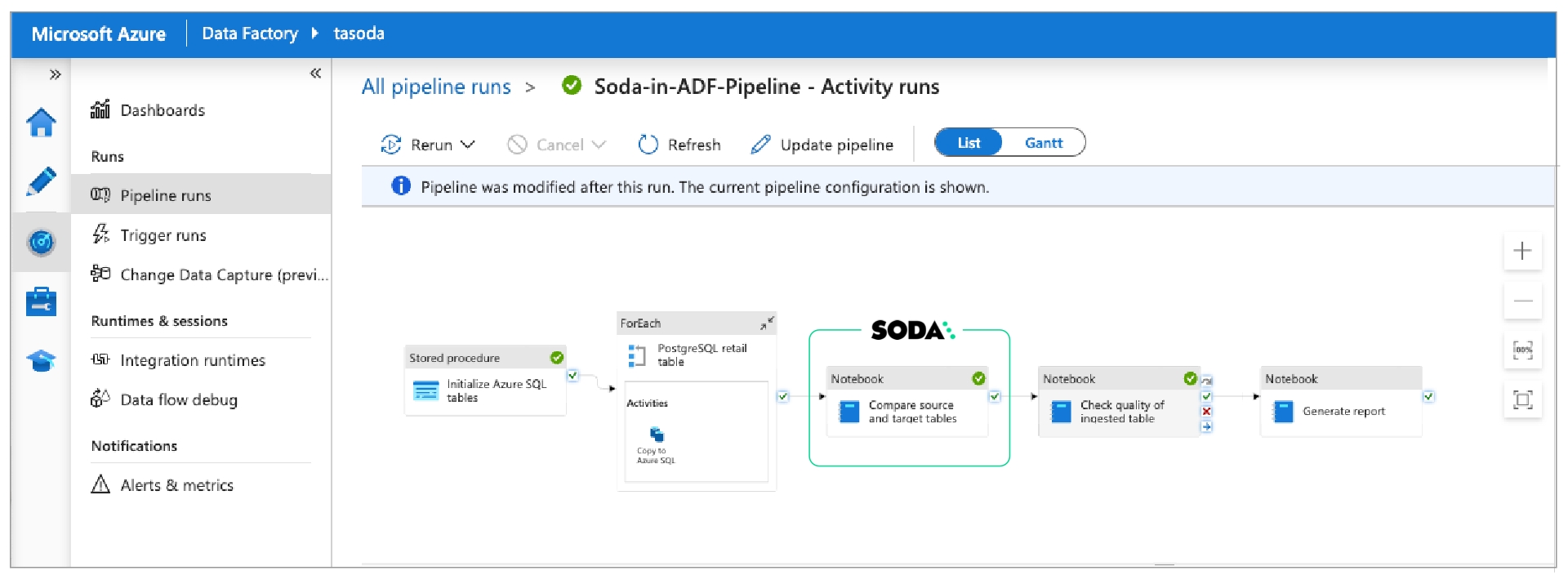

Learn how to invoke Soda data quality tests in an ETL pipeline in Azure Data Factory.

Soda Library Soda Cloud

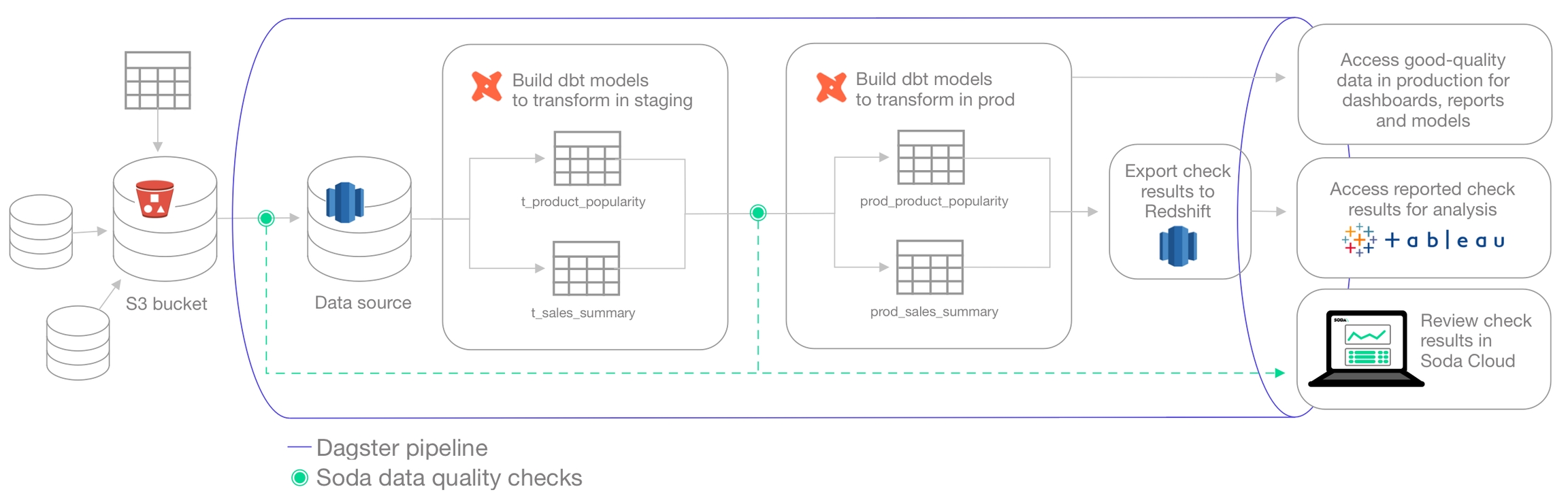

Learn how to invoke Soda data quality tests in a Dagster pipeline.

Soda Library Soda Cloud

Learn how to use Databricks notesbooks with Soda to test data quality before feeding a machine learning model.

password

required

Consider using system variables to retrieve this value securely.

database

required

Provide an identifier for your database.

schema

optional

Provide an identifier for the schema in which your dataset exists.

For Soda to run quality scans on your data, you must configure it to connect to your data source. To learn how to set up Soda and configure it to connect to your data sources, see .

Send all check alerts to the Check Owner

Soda Cloud sends all check results that fail or warn to the Soda Cloud user who created or owns an individual check.

Send all check alerts to the Dataset Owner

Soda Cloud sends all check results that fail or warn to the Soda Cloud user who created or owns the dataset to which the checks are associated.

Name

Provide a unique identifier for your notification.

For

Select All Checks, or select Selected Checks to use conditions to identify specific checks to which you want the rule to apply. You can identify checks according to several attributes such as Data Source Name, Dataset Name, or Check Name.

Notify Recipient

Select the destination to which this rule sends its notifications. For example, you can send the rule’s notifications to a channel in Slack.

Notify About

Identify the notifications this rule sends based on the severity of the check result: warn, fail, or both.

Need help? Join the .

Need help? Join the .

type

required

Identify the type of data source for Soda.

username

required

Consider using system variables to retrieve this value securely.

password

required

Consider using system variables to retrieve this value securely.

host

required

Provide a host identifier.

port

optional

Provide a port identifier.

database

optional

Provide a virtual database (VDB) name.

connection_timeout

optional

Provide an integer value to represent seconds.

sslmode

optional

Provide a value to indicate the type of SSL support:

prefer

require

allow

diable

Default value is prefer.

text

CHARACTER VARYING, CHARACTER, CHAR, TEXT

number

SMALLINT, INTEGER, BIGINT, DECIMAL, NUMERIC, VARIABLE, REAL, DOUBLE PRECISION, SMALLSERIAL, SERIAL, BIGSERIAL

time

TIMESTAMP, DATE, TIME, TIMESTAMP WITH TIME ZONE, TIMESTAMP WITHOUT TIME ZONE, TIME WITH TIME ZONE, TIME WITHOUT TIME ZONE

For Soda to run quality scans on your data, you must configure it to connect to your data source. To learn how to set up Soda and configure it to connect to your data sources, see .

type

required

Identify the type of data source for Soda.

host

required

Provide a host identifier.

port

required

Provide a port identifier.

username

required

Use system variables to retrieve this value securely.

password

required

Use system variables to retrieve this value securely.

database

required

Provide an identifier for your database.

text

CHAR, VARCHAR, TEXT

number

BIG INT, NUMERIC, BIT, SMALLINT, DECIMAL, SMALLMONEY, INT, TINYINT, MONEY, FLOAT, REAL

time

DATE, TIME, DATETIME, DATETIMEOFFSET

For Soda to run quality scans on your data, you must configure it to connect to your data source. To learn how to set up Soda and configure it to connect to your data sources, see .

Need help? Join the .

For Soda to run quality scans on your data, you must configure it to connect to your data source. To learn how to set up Soda and configure it to connect to your data sources, see .

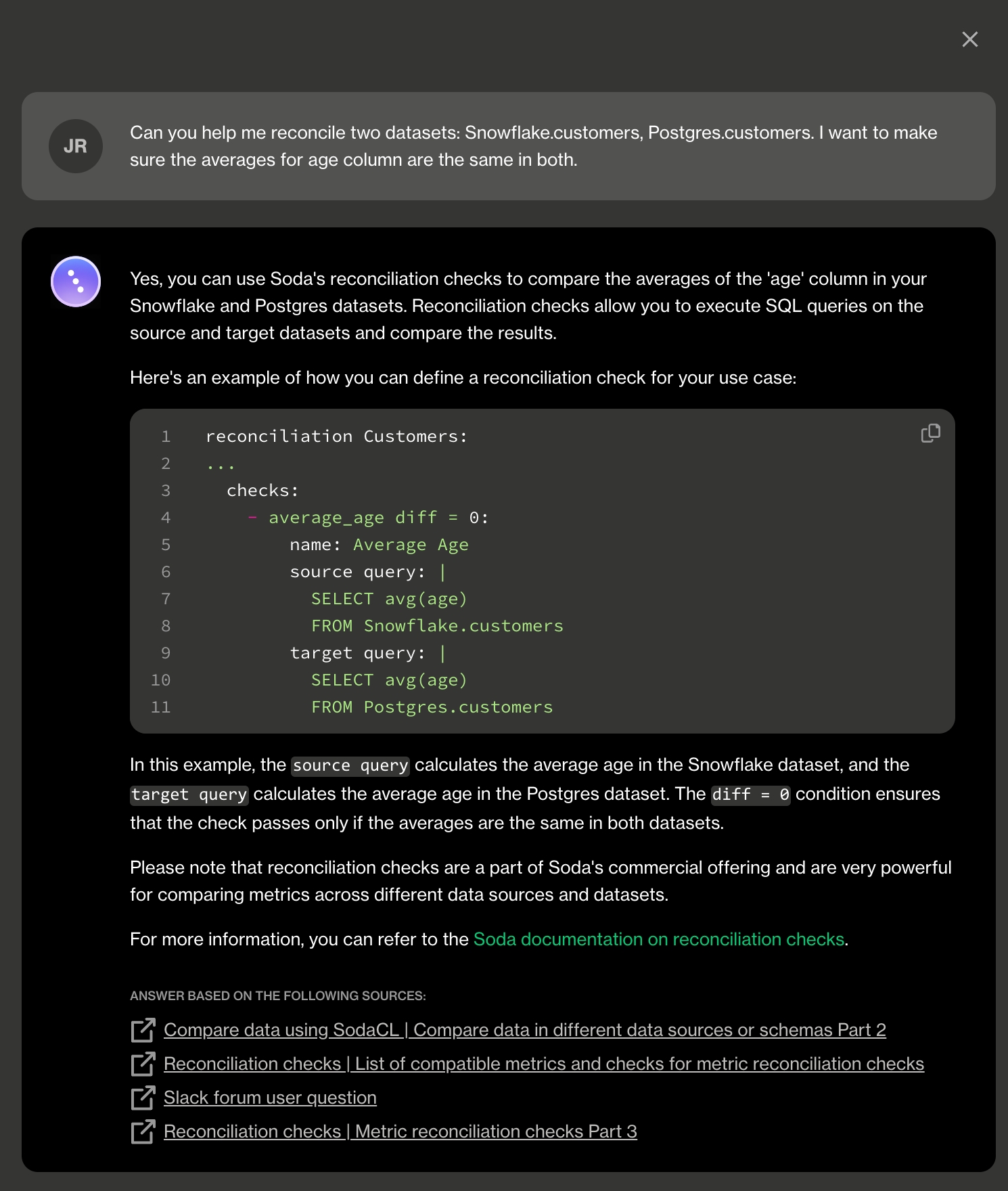

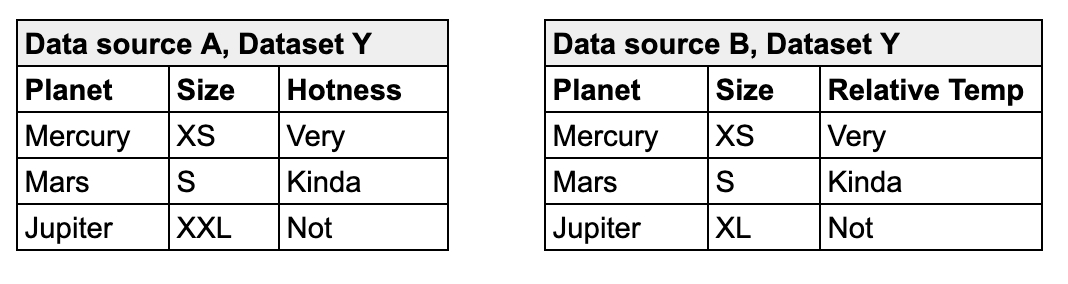

Learn how to use the Soda Checks Language to compare data across datasets in the same, or different, data sources.

There are several ways to use SodaCL metrics and checks to compare data across datasets and data sources. The following offers some advice about how and when to use different types of checks to obtain the comparison results you need.

See also: Reconciliation checks